Over the past month or so I noticed an issue with my Pac-Man Kubernetes application, which I use for demonstrations as a basic app front-end that writes to a database back end, running in Kubernetes.

- When I restored my instances using Kasten, my Pac-Man high scores were missing.

- This issue happened when I made some changes to my deployment files to configure authentication to the MongoDB using environment variables in my deployment file.

This blog post is a detail walk-through of the steps I took to troubleshoot the issue, and then rectify it!

Summary if you don’t want to read the post

If you are not looking to read through this blog post, here is the summary:

- I changed MongoDB images, I needed to configure a new mount point location to match the MongoDB configuration

- New MongoDB image is non-root, so had to use an Init container to configure the permissions on the PV first

Overview of the application

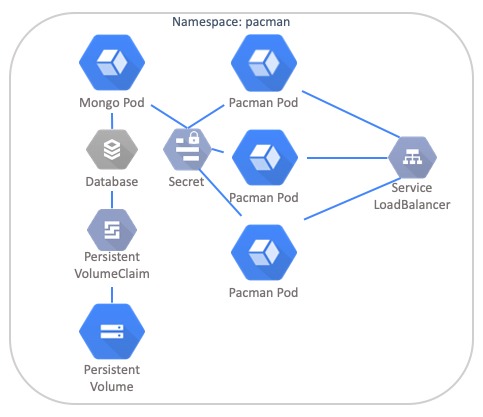

The application is made up of the following components:

- Namespace

- Deployment

- MongoDB Pod

- DB Authentication configured

- Attached to a PVC

- Pac-Man Pod

- Nodejs web front end that connects back to the MongoDB Pod by looking for the Pod DNS address internally.

- MongoDB Pod

- RBAC Configuration for Pod Security and Service Account

- Secret which holds the data for the MongoDB Usernames and Passwords to be configured

- Service

- Type: LoadBalancer

- Used to balance traffic to the Pac-Man Pods

- Type: LoadBalancer

Confirming the behaviour

The behaviour I was seeing when my application was deployed:

- Pac-Man web page – I could save a high score, and it would show in the high scores list

- This showed the connectivity to the database was working, as the app would hang if it could not write to the database.

- I would protect my application using Kasten. When I deleted the namespace, and restored everything, my application would be running, but there was no high scores to show.

- This was apparent from deploying the branch version v0.5.0 and v0.5.1 from my GitHub.

- Deploying the branch v0.2.0 would not product the same behaviour

- This configuration did not have any database authentication setup, meaning MongoDB was open to the world if they could connect without a UN/Password.

Testing the Behaviour

First, I deployed my branch v0.2.0 code. I saved some high scores, backed up the namespace and artifacts. I then restored everything, and it worked.

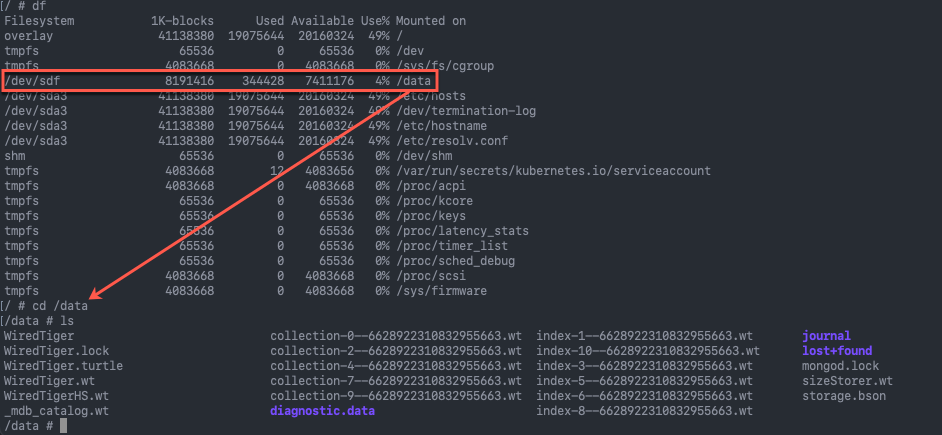

I connected to the shell of my container to look at what was happening.

kubectl exec {podname} -n {namespace} -it -- {cmd}

From here, I could see my mount point listed correct, and when browsing the mount point, I could see the expected files from MongoDB stored.

spec:

serviceAccount: pacman-sa

containers:

- image: mongo

name: mongo

ports:

- name: mongo

containerPort: 27017

volumeMounts:

- name: mongo-db

mountPath: /data/db

volumes:

- name: mongo-db

persistentVolumeClaim:

claimName: mongo-storage

Next, I deleted this namespace, and redeployed using my branch v0.5.1 code. Ran a game of Pac-Man and saved the high score. Once again this looked to have committed fine. Backup data, kill namespace, and restore using Kasten.

I run a shell to the pod and browse the mount point again. There is no data.

Ok, so MongoDB is not writing the data to file, which means it’s storing the data in memory for some reason.

The next steps I took to confirm behaviour:

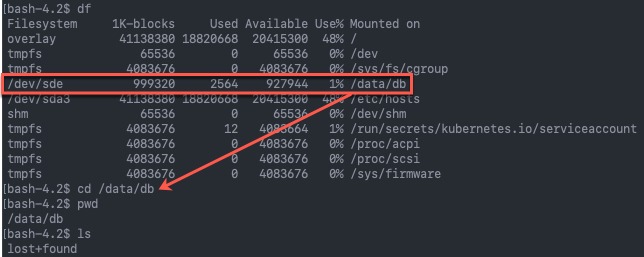

- Restore only the Persistent Volume and connect a test pod to the PV.

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

namespace: pacman

spec:

volumes:

- name: mongo-storage

persistentVolumeClaim:

claimName: mongo-storage

containers:

- name: task-pv-container

image: alpine:latest

command:

- /bin/sh

- "-c"

- "sleep 60m"

volumeMounts:

- mountPath: "/data"

name: mongo-storage

- For the v0.2.0 deployment, this was as expected, the data is there.

- For the v0.5.1 deployment, there is no data.

I deployed the both versions again and dropped the Kasten backup/restore steps.

- Deploy the version of code

- Play Pac-Man, save highscore

- Set Mongo Deployment replicas to zero

- Spin up a test pod and connect to the PVC/PV.

Confirmed same behaviour.

A few other checks I ran to ensure the volumes were being mounted correctly:

kubectl get pod,vpc,pv -n pacman NAME READY STATUS RESTARTS AGE pod/mongo-bdbcc7c7f-hlz6r 1/1 Running 0 77m pod/pacman-5dd85445bc-bvqv9 1/1 Running 1 2d3h NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE persistentvolumeclaim/mongo-storage Bound pvc-36fac4ef-a09a-4cd2-b03f-eaf09c442768 1Gi RWO csi-sc-vmc 2d3h NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE persistentvolume/pvc-36fac4ef-a09a-4cd2-b03f-eaf09c442768 1Gi RWO Delete Bound pacman-052/mongo-storage csi-sc-vmc 2d3h

The Pac-Man NodeJS container also has some basic logging, we could see here the a successful insert of a new high score to the database.

kubectl logs pacman-5dd85445bc-bvqv9 -n pacman > [email protected] start /usr/src/app > node . Listening on port 8080 Connected to database server successfully Time: Thu Aug 26 2021 16:20:02 GMT+0000 (UTC) [GET /highscores/list] Time: Thu Aug 26 2021 16:20:02 GMT+0000 (UTC) [GET /loc/metadata] [getHost] HOST: pacman-5dd85445bc-bvqv9 getCloudMetadata getK8sCloudMetadata Querying tkg-wld-01-md-0-54598b8d99-89498 for cloud data Request Failed. Status Code: 403 getAWSCloudMetadata Time: Thu Aug 26 2021 16:20:02 GMT+0000 (UTC) [GET /user/id] Successfully inserted new user ID = 6127bf321c074a0011281673 Time: Thu Aug 26 2021 16:20:14 GMT+0000 (UTC) [POST /highscores] body = { name: '052', cloud: '', zone: '', host: '', score: '100', level: '1' } host = 192.168.200.51 user-agent = Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36 referer = http://192.168.200.51/ Successfully inserted highscore problem with request: connect ETIMEDOUT 169.254.169.254:80 getAzureCloudMetadata problem with request: connect ETIMEDOUT 169.254.169.254:80 getGCPCloudMetadata problem with request: getaddrinfo ENOTFOUND metadata.google.internal metadata.google.internal:80 getOpenStackCloudMetadata problem with request: connect ETIMEDOUT 169.254.169.254:80 CLOUD: unknown ZONE: unknown HOST: pacman-5dd85445bc-bvqv9

And then finally, I checked to see the high score in Mongo by getting a shell to the Mongo container (command above):

@mongo-bdbcc7c7f-hlz6r:/data/db$ mongo 127.0.0.1:27017/pacman -u blinky -p pinky MongoDB shell version v4.4.8 connecting to: mongodb://127.0.0.1:27017/pacman?compressors=disabled&gssapiServiceName=mongodb Implicit session: session { "id" : UUID("a839cb26-0d6e-41ef-a730-c82ccfd3897d") } MongoDB server version: 4.4.8 > show dbs pacman 0.000GB > use pacman switched to db pacman > show collections highscore userstats > coll = db.highscore pacman.highscore > coll.find() { "_id" : ObjectId("6127bf3e1c074a0011281674"), "name" : "052", "cloud" : "", "zone" : "", "host" : "", "score" : 100, "level" : 1, "date" : "Thu Aug 26 2021 16:20:14 GMT+0000 (UTC)", "referer" : "http://192.168.200.51/", "user_agent" : "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/92.0.4515.107 Safari/537.36", "hostname" : "192.168.200.51", "ip_addr" : "::ffff:100.96.2.1" } >

Attempting to fix the issue by changing container image

After discussing the issue with a few people in “virtual passing” (because there’s no more corridor discussions when you work from home). I decided to mix things up and change the image, as everything else in the YAMLs looks correct, just MongoDB isn’t writing to disk, maybe it’s a bug in the version in use, plus it was MongoDB 3.6, I should probably try a newer release if possible.

With that, I looked at the official Mongo container, but it’s packaging is pretty pants, in terms of initialising it for first use and available options.

I decided to move the image to the Bitnami MongoDB image.

Moving to the Bitnami Image

I moved over to the Bitnami MongoDB image, the read me file in GitHub is well produced.

I just swapped out the image in my YAML and expected it to work. I did not. Same behaviour.

I consulted another friend on the issue, and he asked one simple question, and everything fell into place:

- “Can you check the mongodb config file and make sure the data source is /data/db?”

So off I went to google where the config file is located on the container image, (rather than you know, pay attention to the documentation). So that I could check the default location of where it expects the mount point to be for the storing the database files.

# Default MongoDB Config file for Bitnami image /opt/bitnami/mongodb/conf/ # If you are providing your own config file, use a mount point here /bitnami/mongodb/conf

Anyhow low and behold, the default path for the database files in the Bitnami image is:

/bitnami/mongodb/data/db

I also verified the issue by looking at the logs on the container:

{"t":{"$date":"2021-08-26T20:41:33.593+00:00"},"s":"E", "c":"STORAGE", "id":20557, "ctx":"initandlisten","msg":"DBException in initAndListen, terminating","attr":{"error":"IllegalOperation: Attempted to create a lock file on a read-only directory: /bitnami/mongodb/data/db"}}

Fixing the volume mount issue and nearly winning

So I changed my Deployment file to the correct Volume Mount Point, and redeployed. This time I went straight to the logs, and I saw another error:

# k logs mongo-9c9dcf58d-47rf6 mongodb 20:49:49.44 mongodb 20:49:49.44 Welcome to the Bitnami mongodb container mongodb 20:49:49.45 Subscribe to project updates by watching https://github.com/bitnami/bitnami-docker-mongodb mongodb 20:49:49.45 Submit issues and feature requests at https://github.com/bitnami/bitnami-docker-mongodb/issues mongodb 20:49:49.45 mongodb 20:49:49.45 INFO ==> ** Starting MongoDB setup ** mongodb 20:49:49.47 INFO ==> Validating settings in MONGODB_* env vars... mongodb 20:49:49.48 INFO ==> Initializing MongoDB... mongodb 20:49:49.50 INFO ==> Deploying MongoDB from scratch... mkdir: cannot create directory '/bitnami/mongodb/data/db': Permission denied

OK this isn’t good! Another hurdle to jump through.

Fixing the Permission Issue

The Bitnami MongoDB container image is a non-root image, meaning it doesn’t have the writes to set its permissions on the mounted file system. This is provide a more secure deployment. And helpfully I found listed in this Bitnami documentation, which also pointed me to the fix > Init Container.

If you deploy the Bitnami MongoDB image using helm, the deployment uses an Init Container to run the necessary root level commands to prepare the environment, in this case my Persistent Volume, before running the main container. An Init Container is short lived for its prescribed task.

So I cheated ever so slightly, I ran a Helm deployment of the Bitnami image, and looking at how they were achieving this using an Init container, and anything else I might have missed (by this point my files were pretty complete unless I wanted to add some liveness probes).

helm repo add bitnami https://charts.bitnami.com/bitnami helm install bitmongotest bitnami/mongodb --set volumePermissions.enabled=true

I then cloned over the Init Container details to my deployment files, taking careful note to change things like the Service Accounts referenced and the PVC names.

Wrap Up

After using the Init Container to set the permissions, I found all my testing successful once again.

During this process I did actually realise by the time I hit the Bitnami mount point issue, what my issue was with Original MongoDB with Auth deployment was (in branch v0.5.0). The same thing, the volume mount point. I was using a different image of Mongo in this commit, as setting up Auth was a lot easier in this version, for the same reasons mentioned earlier in the post about using the Official MongoDB container.

- Example of correct Centos/mongodb-36-centos-7 mount point

volumeMounts:

- mountPath: /var/lib/mongodb/data

name: mongodb-data

However, I decided to continue down the Bitnami MongoDB image path by this point, as I wanted to use a newer version of MongoDB. I put my issues down to experience, as I develop my skills and knowledge of Kubernetes, and applications themselves. If I had taken a step back to thing about things logically, I might have hit earlier on the point that maybe the DB configuration had the wrong location to store the data.

Hopefully this blog post is useful anyone reading, I just wanted to document out my troubleshooting steps, and what I tested. Who knows, I might forget all this, and encounter the same issue again, and find my blog whilst googling (it’s happened before).

I’ve updated my GitHub Repo, and everything from this post is captured as the working output in Branch v0.5.2.

Regards