As a vSphere administrator, you’ve built your career on understanding infrastructure at a granular level, datastores, DRS clusters, vSwitches, and HA configurations. You’re used to managing VMs at scale. Now, you’re hearing about KubeVirt, and while it promises Kubernetes-native VM orchestration, it comes with a caveat: Kubernetes fluency is required. This post is designed to bridge that gap, not only explaining what KubeVirt is, but mapping its architecture, operations, and concepts directly to vSphere terminology and experience. By the end, you’ll have a mental model of KubeVirt that relates to your existing knowledge.

What is KubeVirt?

KubeVirt is a Kubernetes extension that allows you to run traditional virtual machines inside a Kubernetes cluster using the same orchestration primitives you use for containers. Under the hood, it leverages KVM (Kernel-based Virtual Machine) and QEMU to run the VMs (more on that futher down).

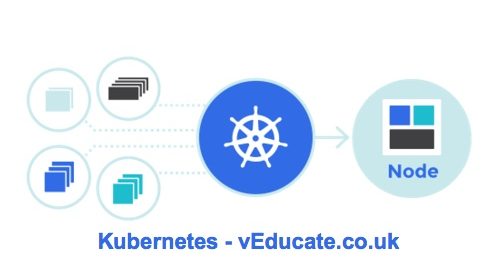

Kubernetes doesn’t replace the hypervisor, it orchestrates it. Think of Kubernetes as the vCenter equivalent here: managing the control plane, networking, scheduling, and storage interfaces for the VMs, with KubeVirt as a plugin that adds VM resource types to this environment.

Tip: KubeVirt is under active development; always check latest docs for feature support.

Core Building Blocks of KubeVirt, Mapped to vSphere

| KubeVirt Concept | vSphere Equivalent | Description |

|---|---|---|

VirtualMachine (CRD) |

VM Object in vCenter | The declarative spec for a VM in YAML. It defines the template, lifecycle behaviour, and metadata. |

VirtualMachineInstance (VMI) |

Running VM Instance | The live instance of a VM, created and managed by Kubernetes. Comparable to a powered-on VM object. |

| virt-launcher | ESXi Host Process | A pod wrapper for the VM process. Runs QEMU in a container on the node. |

| PersistentVolumeClaim (PVC) | VMFS Datastore + VMDK | Used to back VM disks. For live migration, either ReadWriteMany PVCs or RAW block-mode volumes are required, depending on the storage backend. |

| Multus + CNI | vSwitch, Port Groups, NSX | Provides networking to VMs. Multus enables multiple network interfaces. CNIs map to port groups. |

| Kubernetes Scheduler | DRS | Schedules pods (including VMIs) across nodes. Lacks fine-tuned VM-aware resource balancing unless extended. |

| Live Migration API | vMotion | Live migration of VMIs between nodes with zero downtime. Requires shared storage and certain flags. |

| Namespaces | vApp / Folder + Permissions | Isolation boundaries for VMs, including RBAC policies. |

KVM + QEMU: The Hypervisor Stack

Continue reading Learn KubeVirt: Deep Dive for VMware vSphere Admins