vSphere Orphaned First Class Disk (FCD) Cleanup Script

Orphaned First Class Disks (FCDs) in VMware vSphere environments are a surprisingly common and frustrating issue. These are virtual disks that exist on datastores but are no longer associated with any virtual machine or Kubernetes persistent volume (via CNS). They typically occur due to:

- Unexpected VM deletions without proper disk clean-up

- Kubernetes CSI driver misfires, especially during crash loops or failed PVC deletes

- vCenter restarts or failovers during CNS volume provisioning or deletion

- Manual admin operations gone slightly sideways!

Left unchecked, orphaned FCDs can consume significant storage space, cause inventory clutter, and confuse both admins and automation pipelines that expect everything to be nice and tidy.

🛠️ What does this script do?

Inspired by William Lam’s original blog post on FCD cleanup, this script takes the concept further with modern PowerCLI best practices.

You can download and use the latest version of the script from my GitHub repo:

👉 https://github.com/saintdle/PowerCLI/blob/saintdle-patch-1/Cleanup%20standalone%20FCD

Here’s what it does:

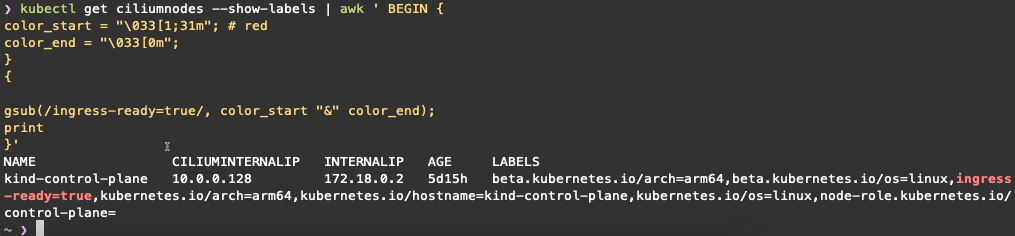

- Checks if you’re already connected to vCenter; if not, prompts you to connect

- Retrieves all existing First Class Disks (FCDs) using

Get-VDisk - Retrieves all Kubernetes-managed volumes using

Get-CnsVolume - Excludes any FCDs still managed by Kubernetes (CNS)

- For each remaining “orphaned” FCD, checks if it is mounted to any VM (even if Kubernetes doesn’t know about it)

- Generates a report (CSV + logs) of any true orphaned FCDs (not in CNS + not attached to any VM)

- If dry-run mode is OFF, safely removes the orphaned FCDs from the datastore

The script is intentionally designed for safety first, with dry-run mode ON by default. You must explicitly allow deletions with -DryRun:$false and optionally -AutoDelete.

❗ Known limitations and gotchas

Despite our best efforts, there is one notorious problem child: the dreaded locked or “current state” error.

You may still see errors like:

The operation is not allowed in the current state.

This happens when vSphere believes something (an ESXi host, a failed task, or the VASA provider) has an active reference to the FCD. These “ghost locks” can only be diagnosed and resolved by:

- Using ESXi shell commands like

vmkfstools -Dto trace lock owners - Rebooting an ESXi host holding the lock

- Engaging VMware GSS to clear internal stale references (sometimes the only safe option)

This script does not attempt to forcibly unlock or clean these disks for obvious reasons. You really don’t want a script going full cowboy on locked production disks. 😅

So while the script works great for true orphaned disks, ghost FCDs are a special case and remain an exercise for the reader (or your VMware TAM and GSS support team!).

⚠️ Before you copy/paste this blindly…

Let me be brutally honest: this script is just some random code stitched together by me, a PowerCLI enthusiast with far too much time on my hands, and enhanced by ChatGPT. It’s never been properly tested in a production environment.

Regards