vRealize Log Insight 8.6 brings the ability to build a hybrid log management platform, utilizing the functionality of an on-premises deployment of vRLI and vRLI Cloud.

From the release notes, in this blog post we’ll be looking at how to configure the following:

- Simplify Log Archival with Non-Indexed Partitions: Use vRealize Log Insight Cloud to archive logs to meet your long-term retention requirements. vRealize Log Insight Cloud provides a no-limit logging solution at a low cost and eliminates any storage management overheads of the past. This enables easy accessibility to archived logs through on-demand queries.

For this, you will need access to a vRealize Log Insight Cloud Instance, with a cloud proxy deployed to your environment that can be accessed by the on-premises vRealize Log Insight platform.

The expectation is that you would forward you vRealize Log Insight on-premises logs to the vRealize Log Insight Cloud instance storing them only in a Non-Indexed Partition (discussed below). As your on-premises deployment act as your easy to analyse near time (within 30 days) copy of your logs. In this blog post I also explore the configuration and use of Index Partitions which essentially offers that near time usability and analysing of logs as well.

The high-level steps for the configuration discussed in this blog post are:

- Send infrastructure or application logs to your on-premises vRealize Log Insight deployment

- Setup the cloud proxy (if not already done)

- Setup log forwarding from the on-premises Log Insight instance

- In vRealize Log Insight Cloud, configure Non-Index Partition to receive the forwarded logs

What are Log Partitions?

Log Partitions are a feature that allows you to ingest logs based on user-defined filters. This feature is available as a paid subscription (or Trial).

There are two types of log Log Partitions:

- Indexed Partitions

- Stores logs for up to 30 days

- Billed only for volume of logs ingested into the partition

- Search and analyse logs in this partition without additional costs

- Non-Indexed Partitions

- Stores logs for up to 7 years

- Billed for the volume of logs ingested into the partition, and for searching the logs.

- If you need to query logs frequently, you can move logs to a recall partition for 30 days.

- No additional cost for searching and analysing logs in the recall partition

Logs that do not match a query criteria in any of the configured partitions, will be stored in the Default Indexed Partition. This is read only and stores logs for 30 days.

Note: - Alerts and dashboard widgets are not operational in non-indexed partitions. - Log partitions store logs ingested in the last 24 hours only. - You can create a maximum of 10 log partitions in an organization.

Video Walk-through

Example Logs

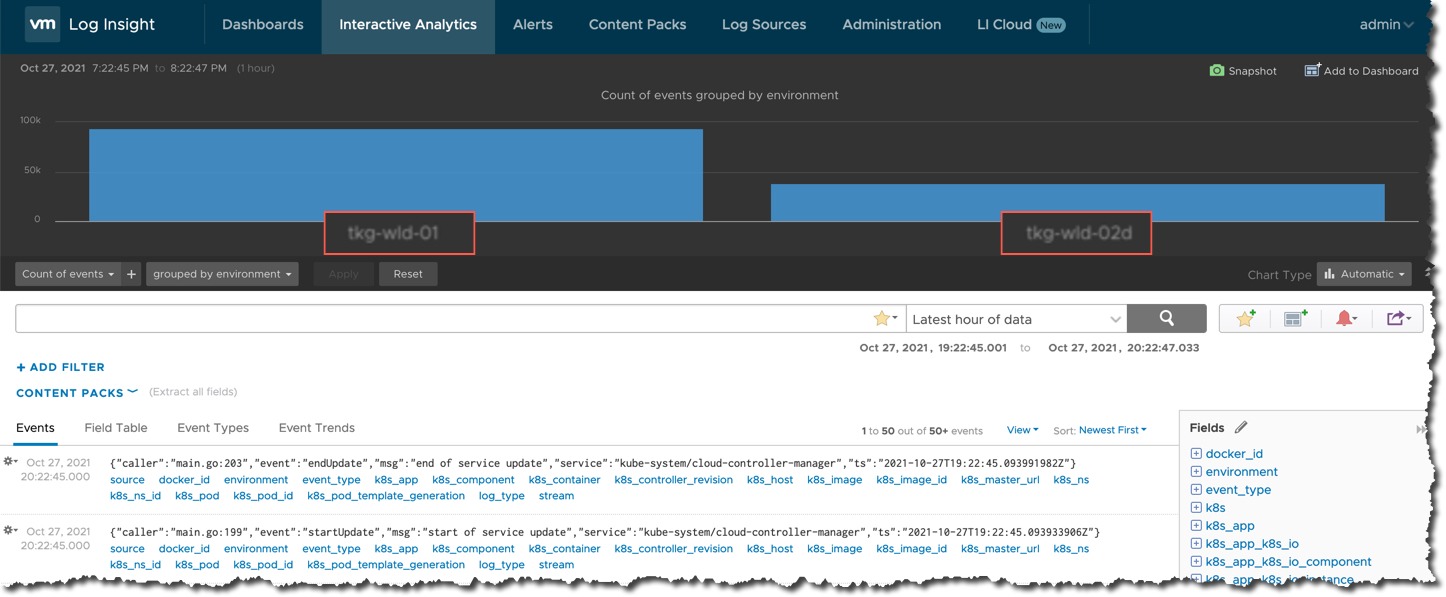

In my Log Insight environment, I have setup the FluentD configuration to forward the Tanzu Kubernetes Grid logs from two clusters to vRealize Log Insight (on-premises deployment).

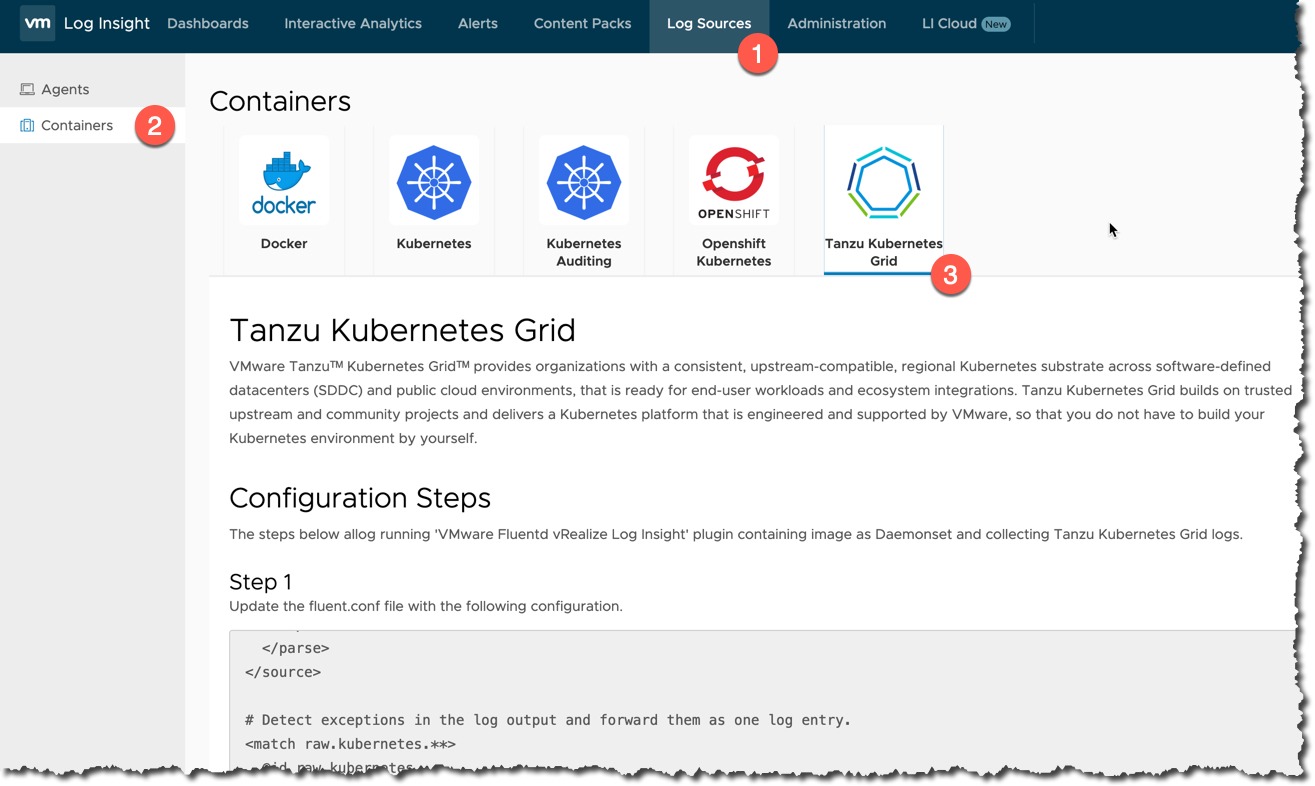

You can find the configuration settings for this within vRealize Log Insight, under the Sources Tab > Containers > Tanzu Kubernetes Grid.

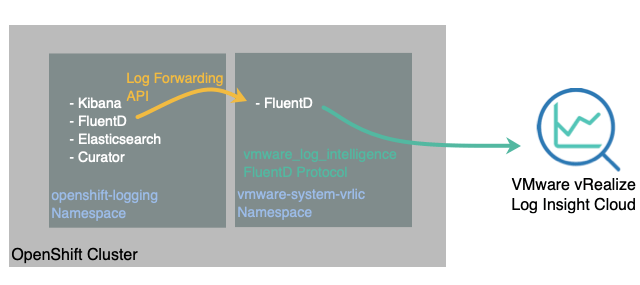

Setup the Cloud Proxy

Continue reading Using vRealize Log Insight Cloud to archive on-premise Log Insight Data