In this post I’m just documenting the steps on how to upgrade the vSphere CSI Driver, especially if you must make a jump in versioning to the latest version.

Upgrade from pre-v2.3.0 CSI Driver version to v2.3.0

You need to figure out what version of the vSphere CSI Driver you are running.

For me it was easy as I could look up the Tanzu Kubernetes Grid release notes. Please refer to your deployment manifests in your cluster. If you are still unsure, contact VMware Support for assistance.

Then you need to find your manifests for your associated version. You can do this by viewing the releases by tag.

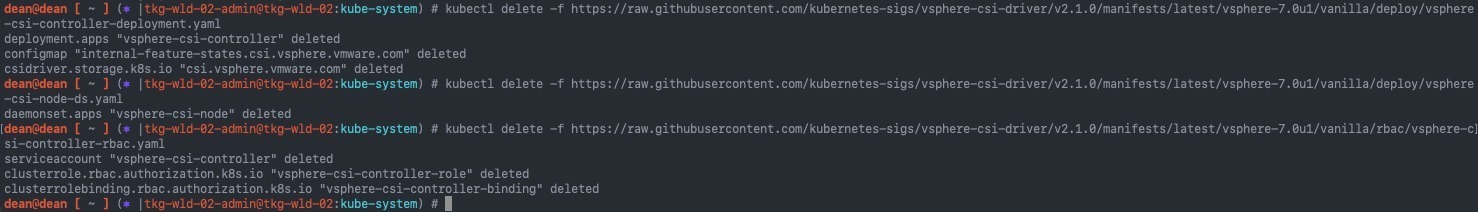

Then remove the resources created by the associated manifests. Below are the commands to remove the version 2.1.0 installation of the CSI.

kubectl delete -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.1.0/manifests/latest/vsphere-7.0u1/vanilla/deploy/vsphere-csi-controller-deployment.yaml kubectl delete -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.1.0/manifests/latest/vsphere-7.0u1/vanilla/deploy/vsphere-csi-node-ds.yaml kubectl delete -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.1.0/manifests/latest/vsphere-7.0u1/vanilla/rbac/vsphere-csi-controller-rbac.yaml

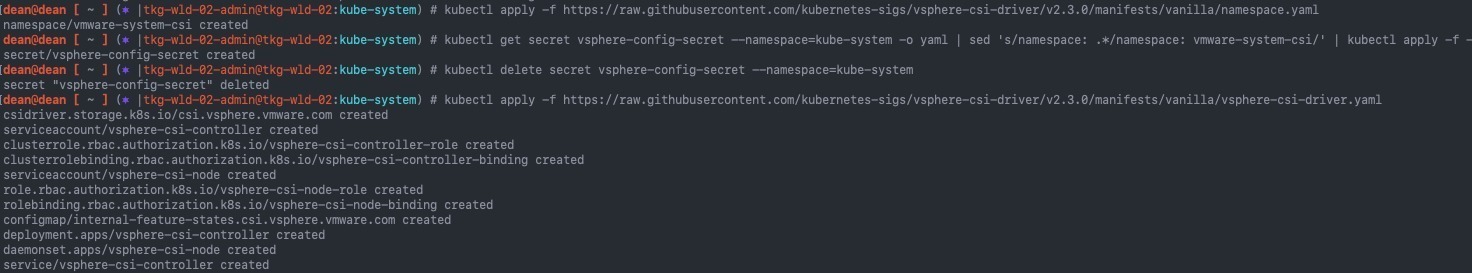

Now we need to create the new namespace, “vmware-system-csi”, where all new and future vSphere CSI Driver components will run.

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.3.0/manifests/vanilla/namespace.yaml

Next, we migrate the existing vSphere configuration secret from its location in the “kube-system” namespace to the new “vmware-system-csi” namespace.

kubectl get secret vsphere-config-secret --namespace=kube-system -o yaml | sed 's/namespace: .*/namespace: vmware-system-csi/' | kubectl apply -f -

Delete the original secret in the “kube-system” namespace.

kubectl delete secret vsphere-config-secret --namespace=kube-system

Now to deploy the manifests for the vSphere CSI Driver version 2.3.0

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.3.0/manifests/vanilla/vsphere-csi-driver.yaml

Below you can see all the commands running in my environment.

You can scale the deployment of the vSphere CSI Controller to match the number of Control-Plane nodes in your environment.

kubectl scale deployment vsphere-csi-controller --replicas=1 -n vmware-system-csi

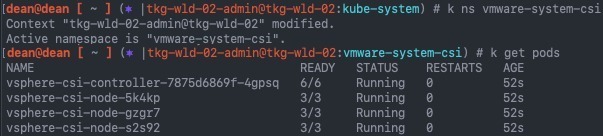

You can check the pods status by running the following command.

kubectl get pods -n vmware-system-csi

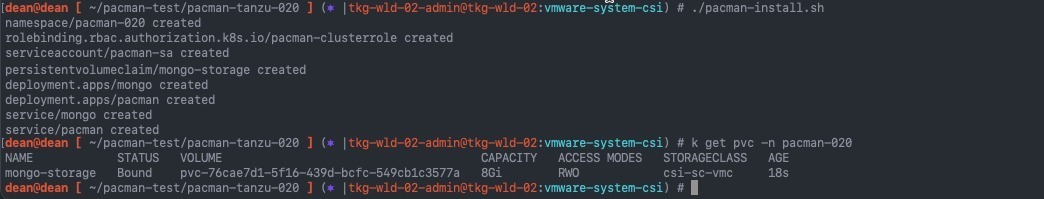

Now to check that I can successfully create a PVC and associated Persistent Volume on the vSphere environment still. I used my trusty Pac-Man application for this test.

Upgrade from v2.3.0 to the latest

kubectl apply -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.4.0/manifests/vanilla/vsphere-csi-driver.yaml

Summary and wrap-up

The steps do follow the documentation, the main points to remember, if you are running a version below v2.3.0, you need to get to v2.3.0 before then upgrading to the latest version. There will be no changes to your PVCs or PVs.

But if you are unsure about any configuration changes or the status of your environment, log a support call with VMware Support for assistance and validation.

Regards