You may have seen my blog post “How to Install and configure vSphere CSI Driver on OpenShift 4.x“.

Here I updated the vSphere CSI driver to work the additional security constraints that are baked into OpenShift 4.x.

Since then, once of the things that has been on my list to test is file volumes backed by vSAN File shares. This feature is available in vSphere 7.0.

Well I’m glad to report it does in fact work, by using my CSI driver (see above blog or my github), you can simply deploy consume VSAN File services, as per the documentation here.

I’ve updated my examples in my github repository to get this working.

OK just tell me what to do…

First and foremost, you need to add additional configuration to the csi conf file (csi-vsphere-for-ocp.conf).

If you do not, the defaults will be assumed which is full read-write access from any IP to the file shares created.

[Global]

# run the following on your OCP cluster to get the ID

# oc get clusterversion -o jsonpath='{.items[].spec.clusterID}{"\n"}'

cluster-id = c6d41ba1-3b67-4ae4-ab1e-3cd2e730e1f2

[NetPermissions "A"]

ips = "*"

permissions = "READ_WRITE"

rootsquash = false

[VirtualCenter "10.198.17.253"]

insecure-flag = "true"

user = "[email protected]"

password = "Admin!23"

port = "443"

datacenters = "vSAN-DC"

targetvSANFileShareDatastoreURLs = "ds:///vmfs/volumes/vsan:52c229eaf3afcda6-7c4116754aded2de/"

Next, create a storage class which is configured to consume VSAN File services.

kind: StorageClass apiVersion: storage.k8s.io/v1 metadata: name: file-services-sc annotations: storageclass.kubernetes.io/is-default-class: "false" provisioner: csi.vsphere.vmware.com parameters: storagepolicyname: "vSAN Default Storage Policy" # Optional Parameter csi.storage.k8s.io/fstype: "nfs4" # Optional Parameter

Then create a PVC to prove it works.

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: file-pvc-1 labels: name: file-pvc-1 platform: ocp spec: accessModes: - ReadWriteMany resources: requests: storage: 1Gi storageClassName: file-services-sc

Finally, create a pod to consume the PVC.

apiVersion: v1

kind: Pod

metadata:

name: example-vanilla-file-pod1

spec:

containers:

- name: test-container

image: gcr.io/google_containers/busybox:1.24

command: ["/bin/sh", "-c", "echo 'Hello! This is Pod1' >> /mnt/volume1/index.html && while true ; do sleep 2 ; done"]

volumeMounts:

- name: test-volume

mountPath: /mnt/volume1

restartPolicy: Never

volumes:

- name: test-volume

persistentVolumeClaim:

claimName: example-vanilla-file-pvcNow what does it look like in vCenter?

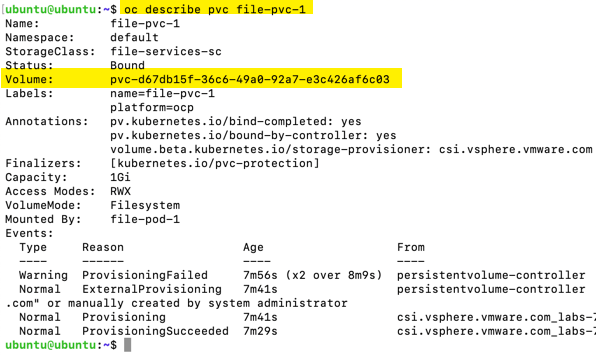

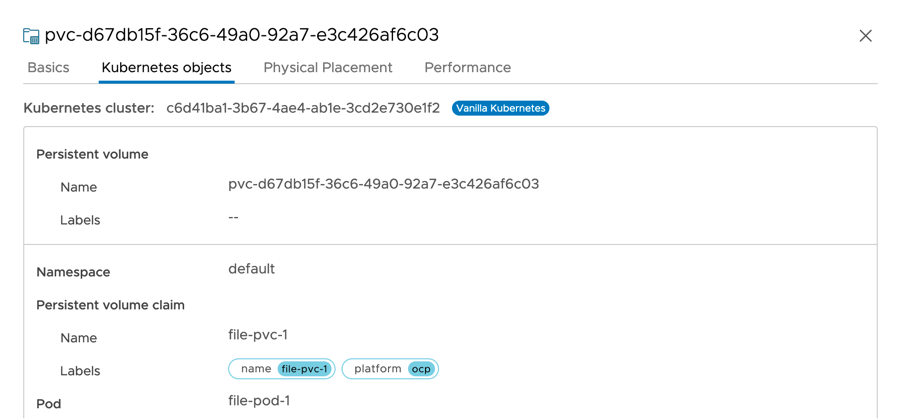

First, let’s check a file services PVC that I’ve created from the above example, we can see the pvc-{uuid} as we’ll see it in vCenter. (I’ve used labels as well).

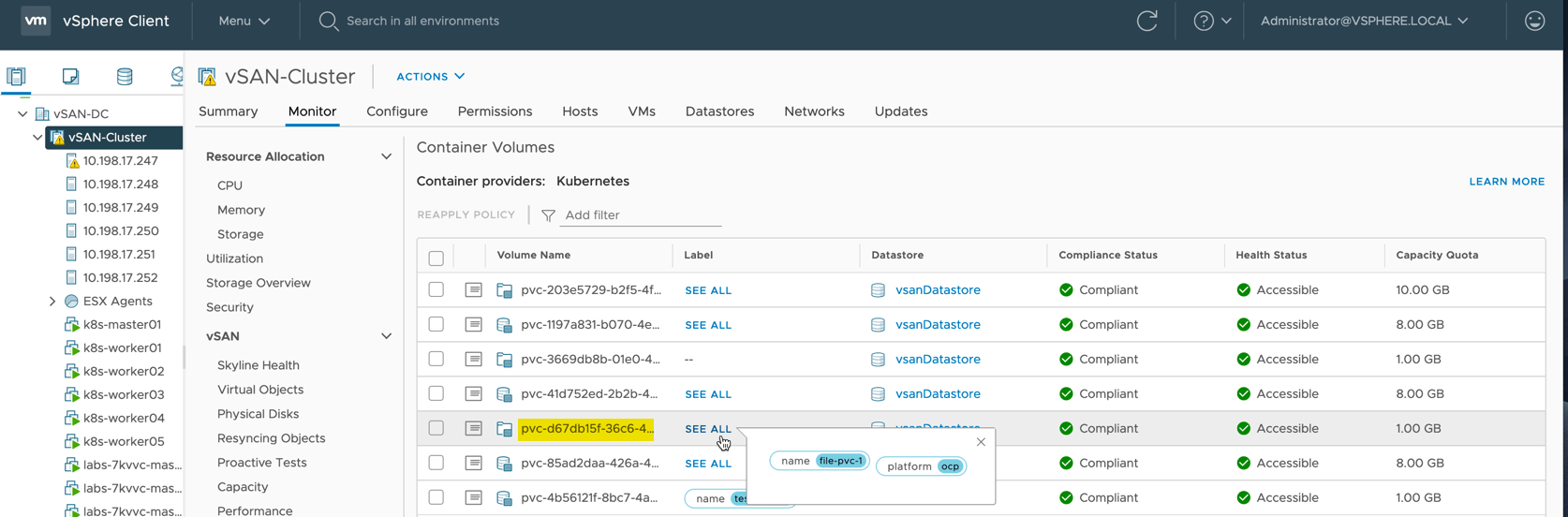

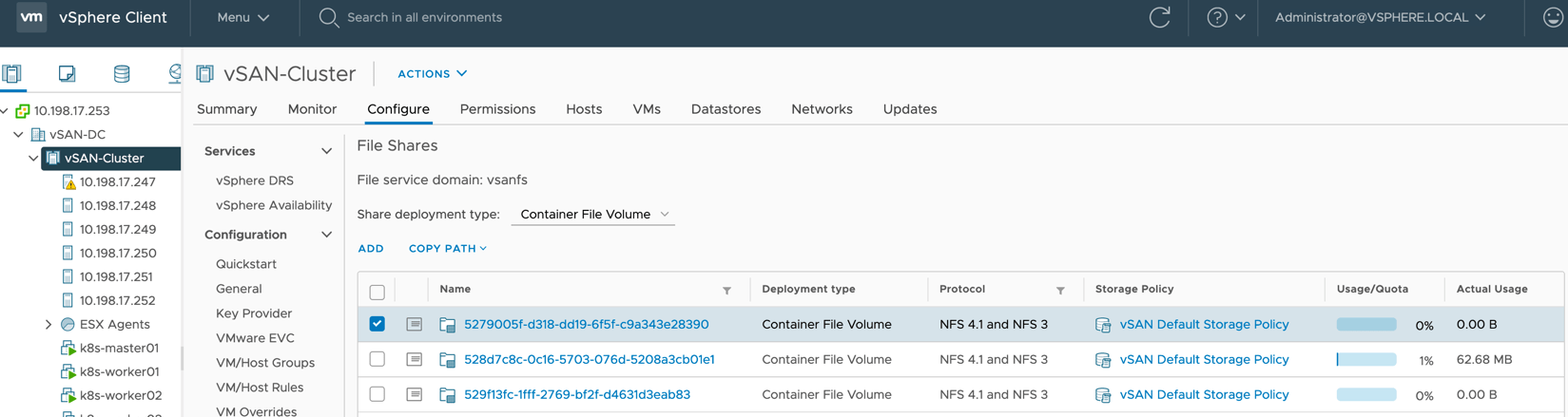

In vCenter the first place to navigate to > vSphere Cluster > Monitor Tab > Container Volumes

In this view, we can see both block and file PVCs, noted by the little icons to the left of the names. You can see the matching PVC name from above.

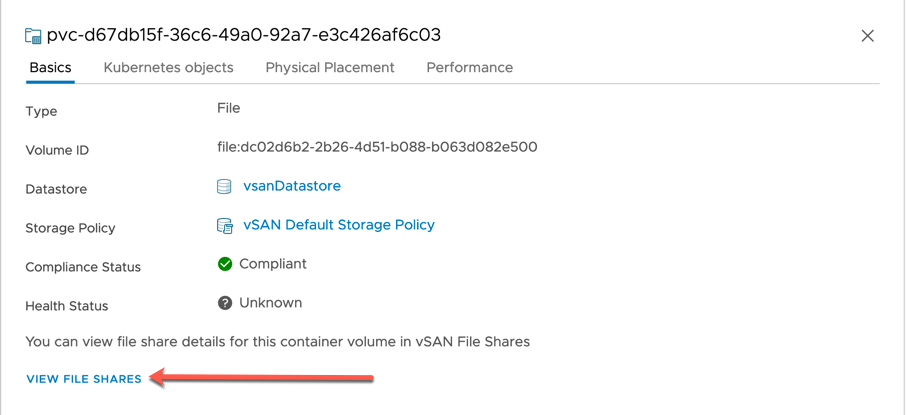

Clicking the left-hand side page icon. This will bring up more information about the PVC.

- Basics Tab

- Note the “view file shares” link, we’ll come back to that.

- Kubernetes Objects Tab

- Here you can see I’ve attached the PVC to a pod called “file-pod-1”

- Physical Placement Tab

- If you’ve used VSAN before you’ll have seen this tab a lot, so I’ve skipped the screenshots

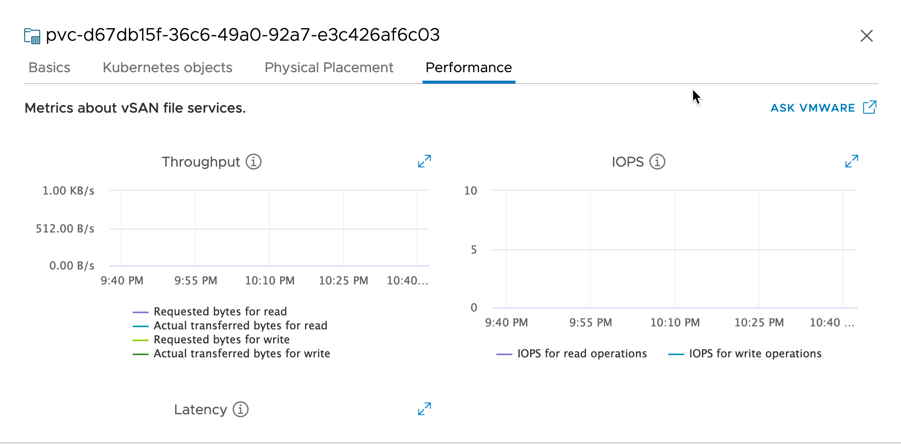

- Performance Tab

- As I did this on the fly no metrics are yet recorded.

OK moving back to the “view file shares” option I pointed out earlier. Clicking that link will take you to the Configure > File Shares tab in vCenter. And highlight the associated file share.

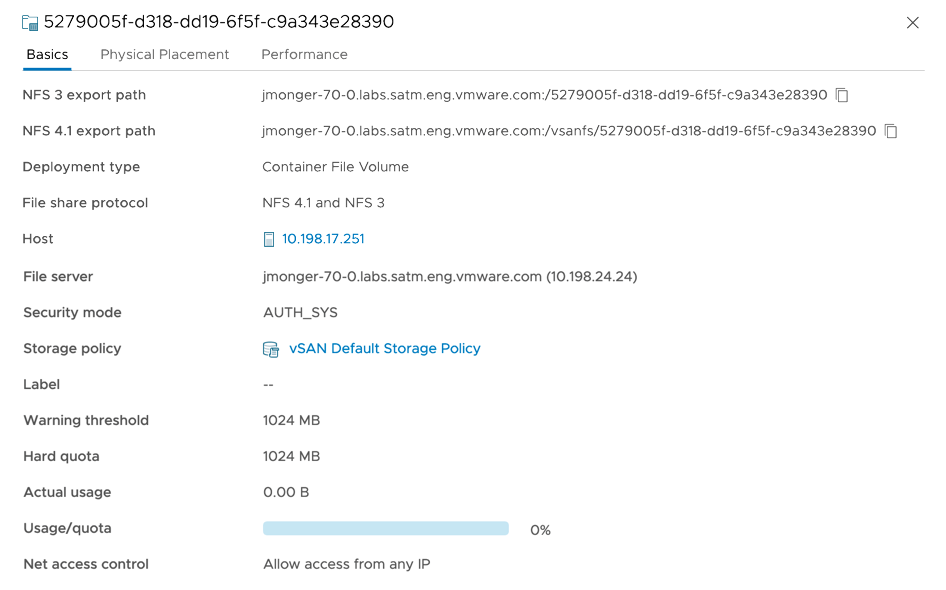

Clicking the little document icon will bring up more details, like the previous screenshots.

Below is the information for file share configured, as per the defaults in the conf-vsphere-for-ocp.conf file.

So that is a wrap for today.

Regards