What is the vSphere Kubernetes Driver Operator (VDO)?

This Kubernetes Operator has been designed and created as part of the VMware and IBM Joint Innovation Labs program. We also talked about this at VMworld 2021 in a joint session with IBM and Red Hat. With the aim of simplifying the deployment and lifecycle of VMware Storage and Networking Kubernetes driver plugins on any Kubernetes platform, including Red Hat OpenShift.

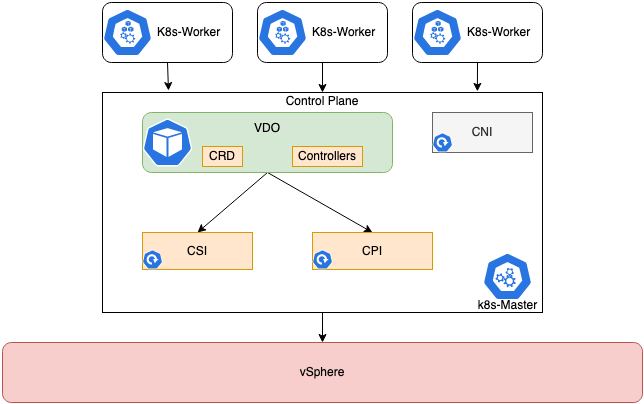

This vSphere Kubernetes Driver Operator (VDO) exposes custom resources to configure the CSI and CNS drivers, and using Go Lang based CLI tool, introduces validation and error checking as well. Making it simple for the Kubernetes Operator to deploy and configure.

The Kubernetes Operator currently covers the following existing CPI, CSI and CNI drivers, which are separately maintained projects found on GitHub.

This operator will remain CNI agnostic, therefore CNI management will not be included, and for example Antrea already has an operator.

Below is the high level architecture, you can read a more detailed deep dive here.

Installation Methods

You have two main installation methods, which will also affect the pre-requisites below.

If using Red Hat OpenShift, you can install the Operator via Operator Hub as this is a certified Red Hat Operator. You can also configure the CPI and CSI driver installations via the UI as well.

- Supported for OpenShift 4.9 currently.

Alternatively, you can install the manual way and use the vdoctl cli tool, this method would also be your route if using a Vanilla Kubernetes installation.

This blog post will cover the UI method using Operator Hub.

Pre-requisites

Kubernetes and vSphere environment must meet the following:

- vSphere 6.7U3(or later) is supported for VDO

- Virtual Machine hardware version should be version 15(or later)

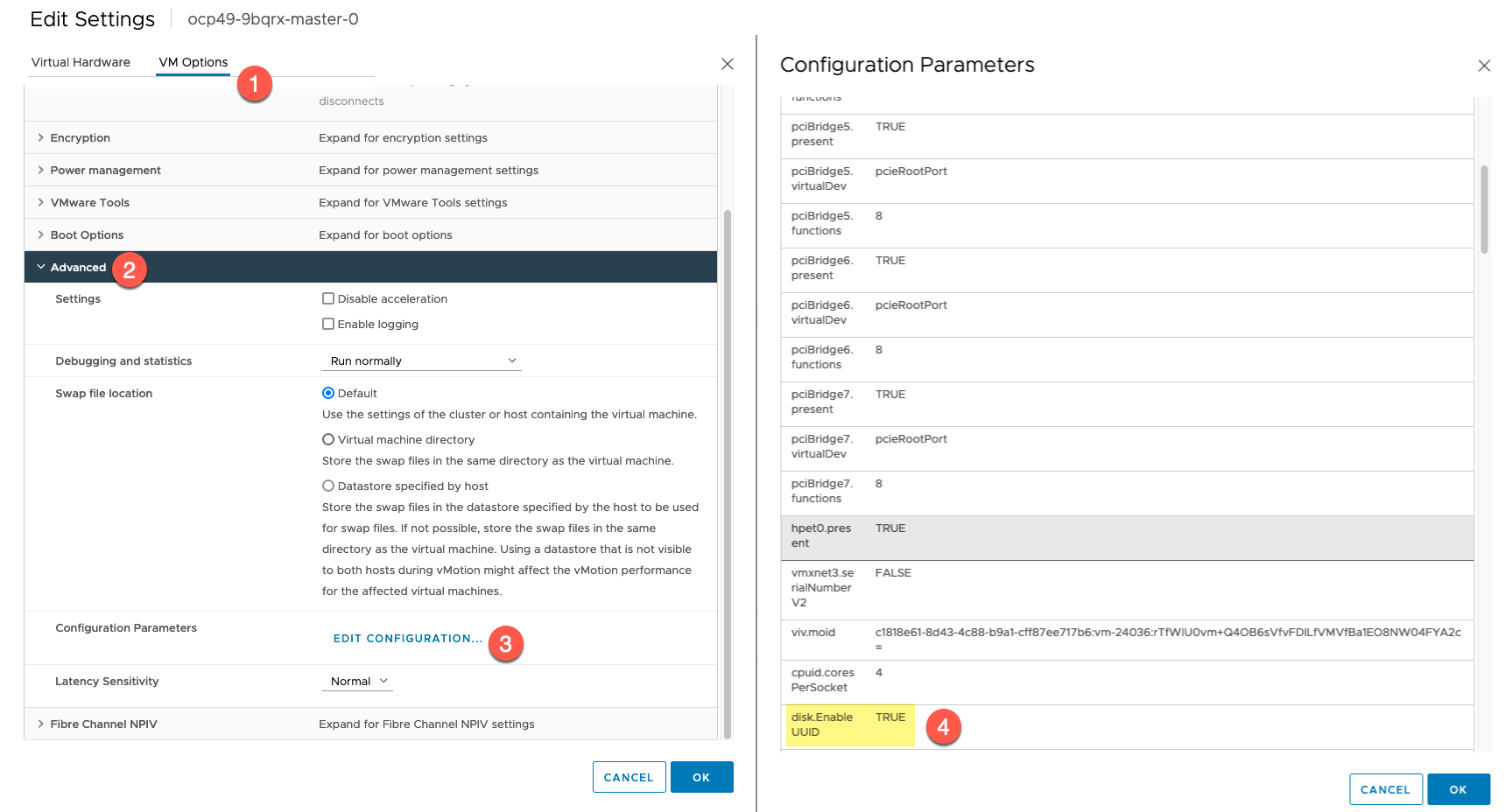

- Enable Disk UUID(disk.EnableUUID) on all node vm’s

- K8s master nodes should be able to communicate with vCenter management interface

- Disable Swap(swapoff -a) on all Kubernetes nodes at the Guest Operating System level.

If you are going to deploy this on a Vanilla Kubernetes instance or want to use the CLI tooling:

Clone the VDO GitHub Repo or download the files from the release page. This installation method will be covered separately in another blog post.

git clone https://github.com/vmware-tanzu/vsphere-kubernetes-drivers-operator

Install Go, so that we can use the vdoctl command line tool.

- https://go.dev/doc/install

Installing and configuring the vSphere Kubernetes Driver Operator

Installation via Red Hat Operator Hub UI

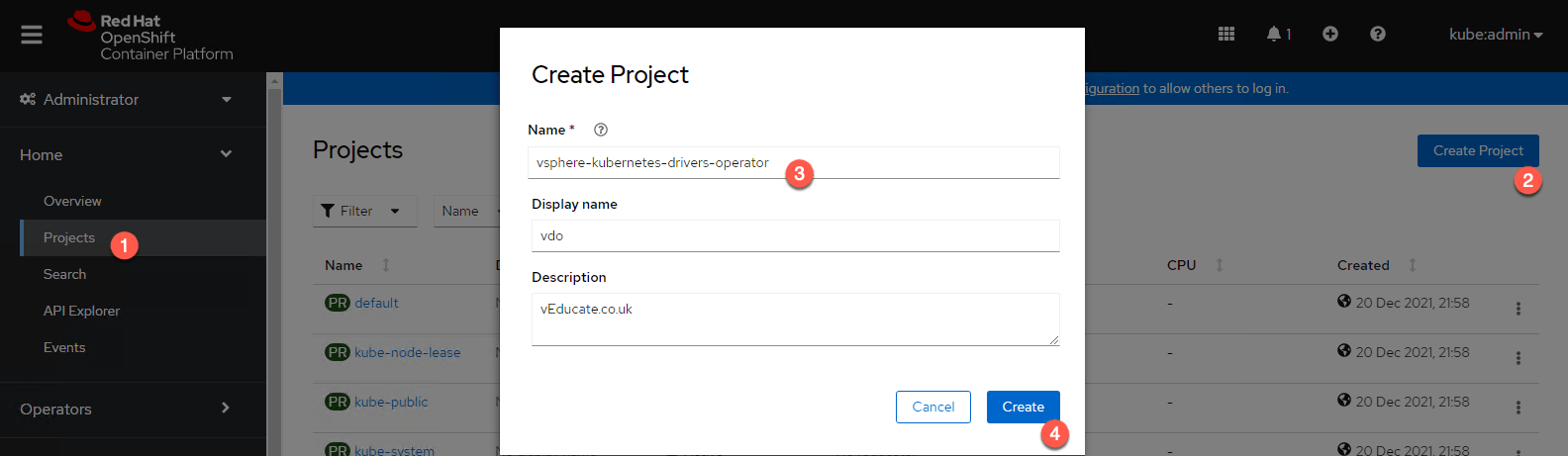

- Create a project to install the Operator into.

- In this example I have used “vsphere-kubernetes-drivers-operator” and will match the below SecurityContext example

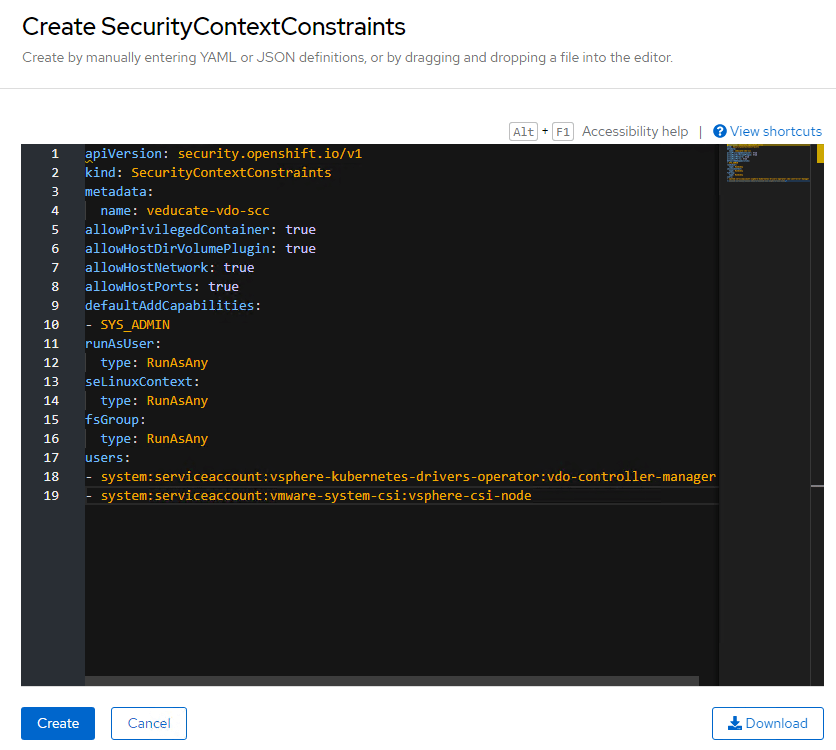

- Create the Security Context Constraint, so that the Service Account can access the relevant resources.

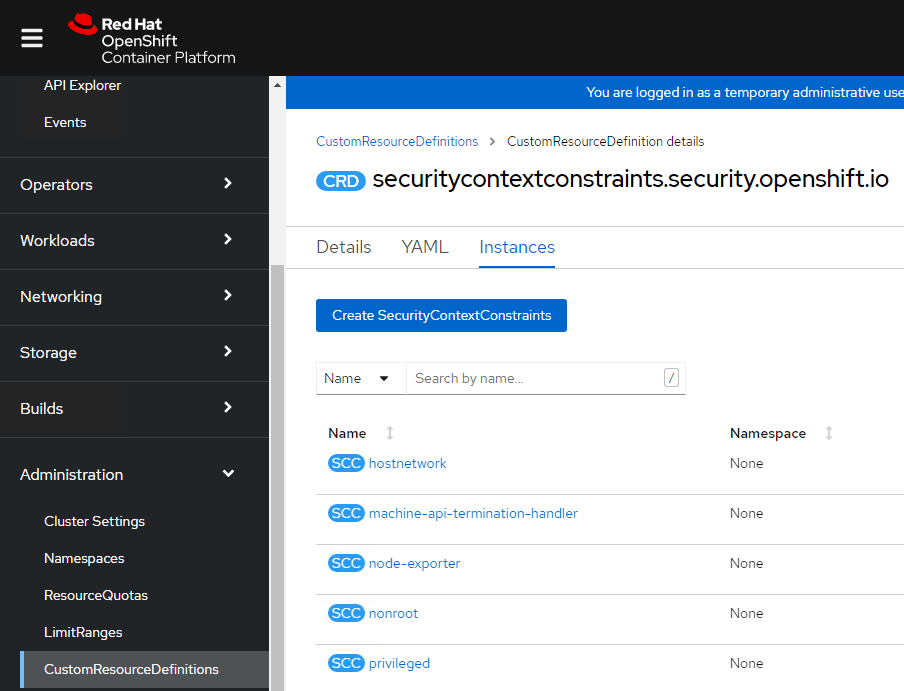

- Administration > Custom Resource Definitions > SecurityContextConstraints > Instance > Create

# Example SCC Source and further details # Used for CSI 2.3.0 and later, ensure the namespace in bold below matches the one you have created earlier apiVersion: security.openshift.io/v1 kind: SecurityContextConstraints metadata: name: example allowPrivilegedContainer: true allowHostDirVolumePlugin: true allowHostNetwork: true allowHostPorts: true defaultAddCapabilities: - SYS_ADMIN runAsUser: type: RunAsAny seLinuxContext: type: RunAsAny fsGroup: type: RunAsAny users: - system:serviceaccount:vsphere-kubernetes-drivers-operator:vdo-controller-manager - system:serviceaccount:vmware-system-csi:vsphere-csi-node

Now we will install the Operator.

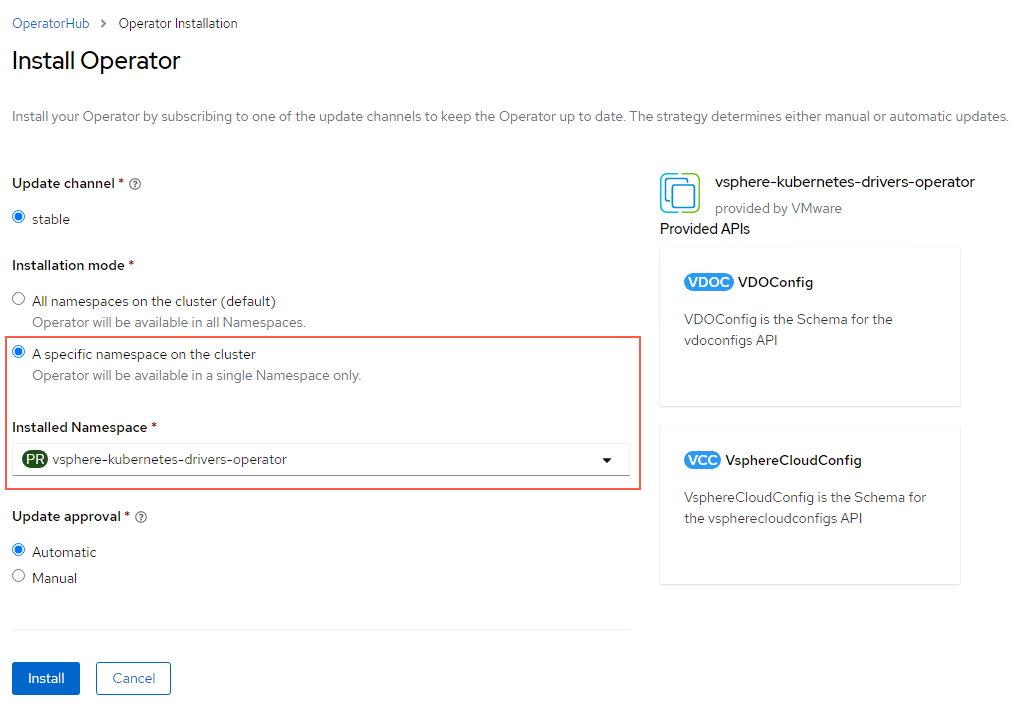

- Go to OperatorHub and search for “vsphere-kubernetes-driver-operator”

- Click the Operator to install it

- Ensure it is installed to the namespace you have created, where the SCC is linked.

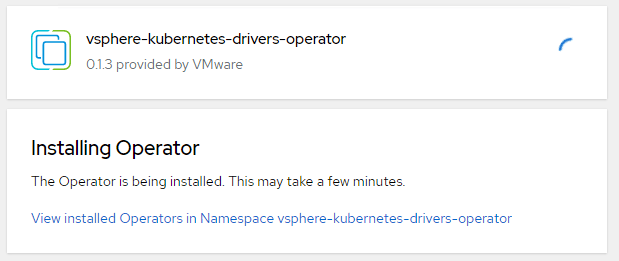

Note: I recommend that you ensure you are installing/running Operator version 0.1.5 or higher. This blog post used 0.1.3 in some of the images. However, a number of enhancements were introduced, and I upgraded to 0.1.5 part way through (see this section for upgrades)

Once completed, click to view the Operator, and we will continue to configure the drivers.

Configuring the CPI and CSI via the Operator in the OpenShift Cluster UI

You will need the following pieces of information

- IP address or FQDN of vCenter

- If using a secure connection to the vCenter, you will need to provide the SSL thumbprint

- Credentials for the vCenter with the appropriate permissions.

- Datacenter(s) Names within the vCenter. This is required by CPI and CSI to manage the cluster

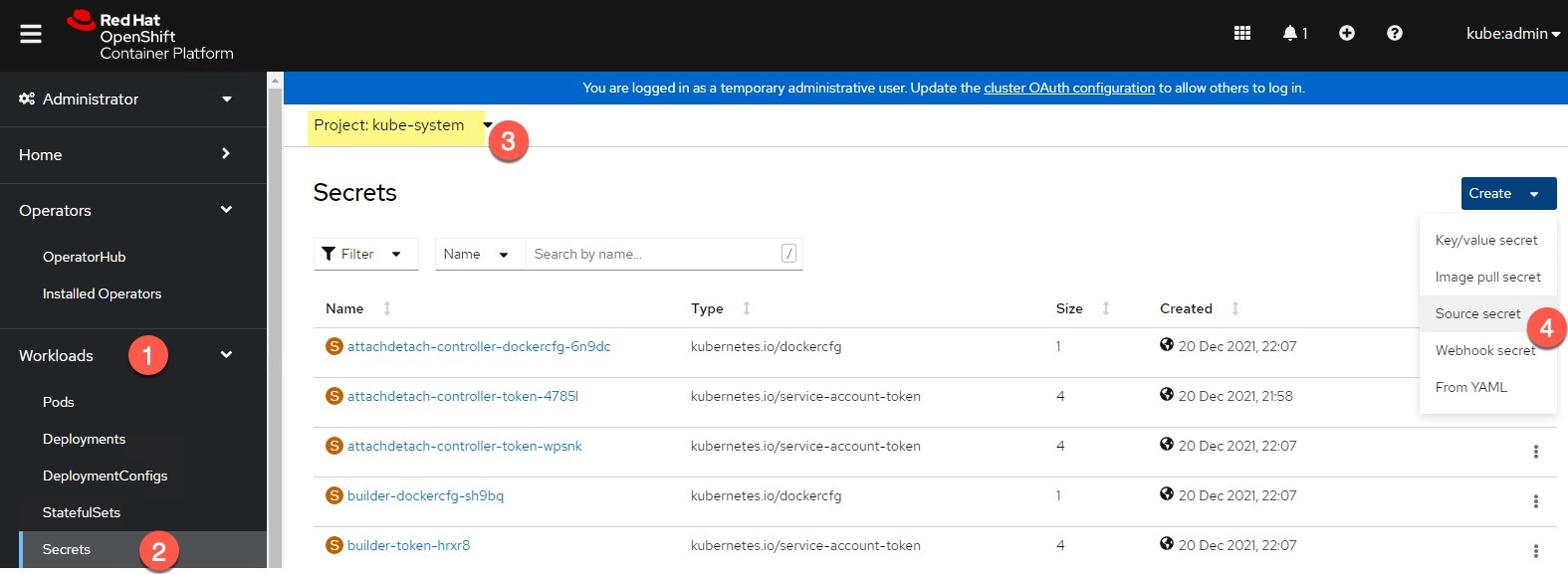

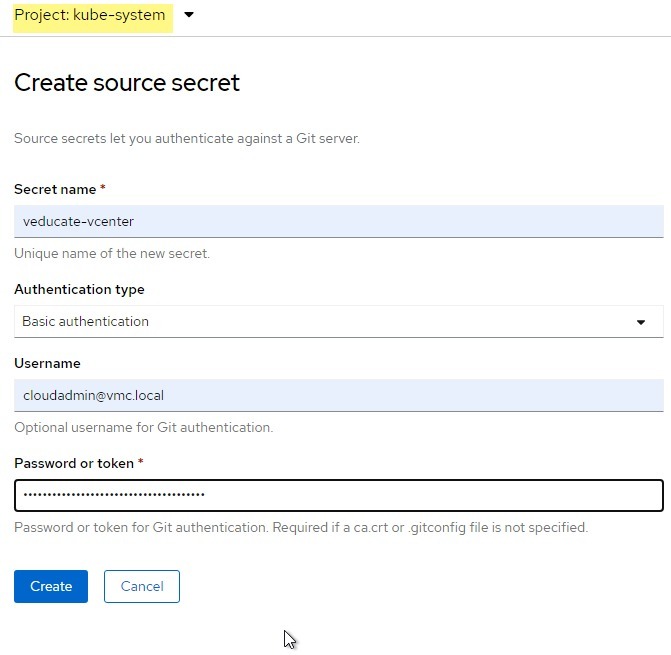

We now need to create a source secret to hold our credentials for our vCenter.

- In the OpenShift Cluster UI > Workloads > Secrets

- Ensure you are in the namespace of “kube-system”

- Create > Source Secret

- Provide a name for the secret

- Authentication type should be set to “basic”

- Provide the username and password and click create

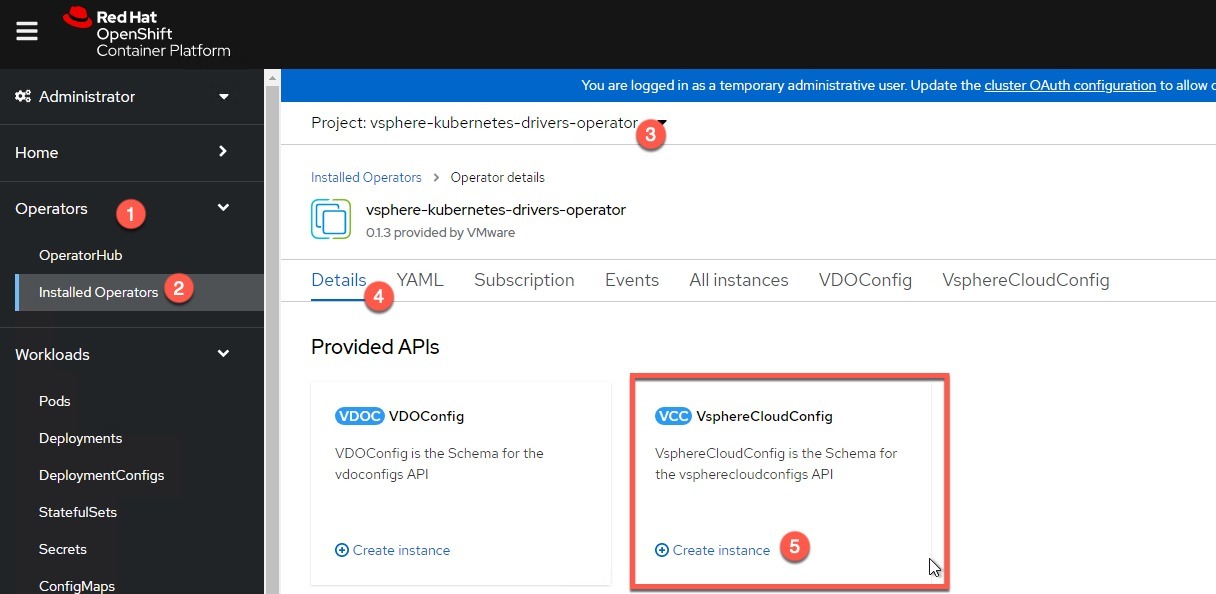

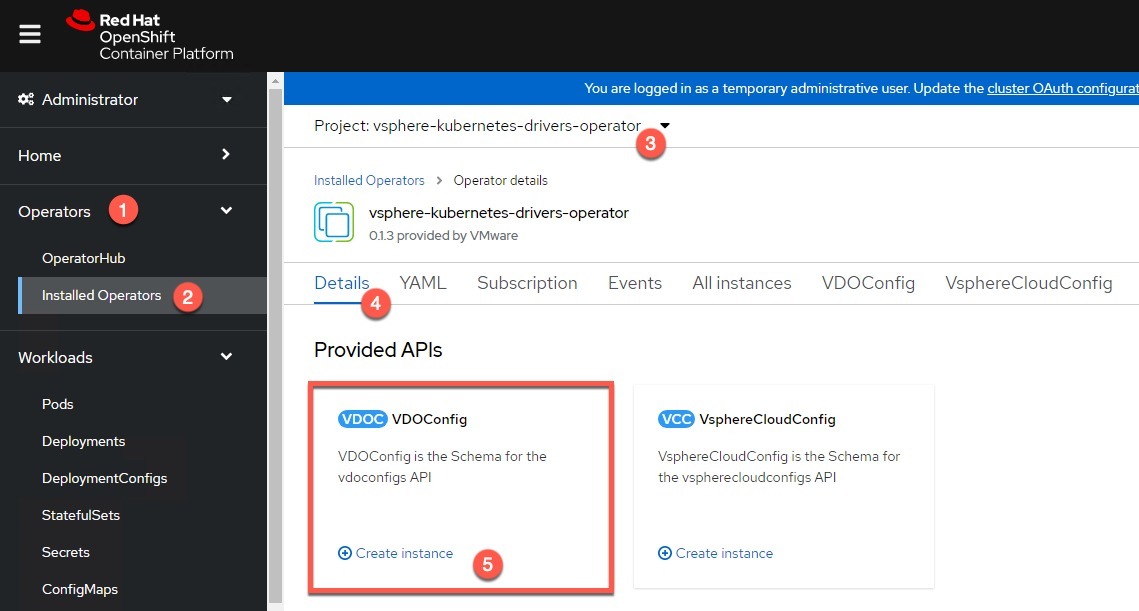

Go back to the Installed Operators Page, set the namespace where you installed the operator and select it.

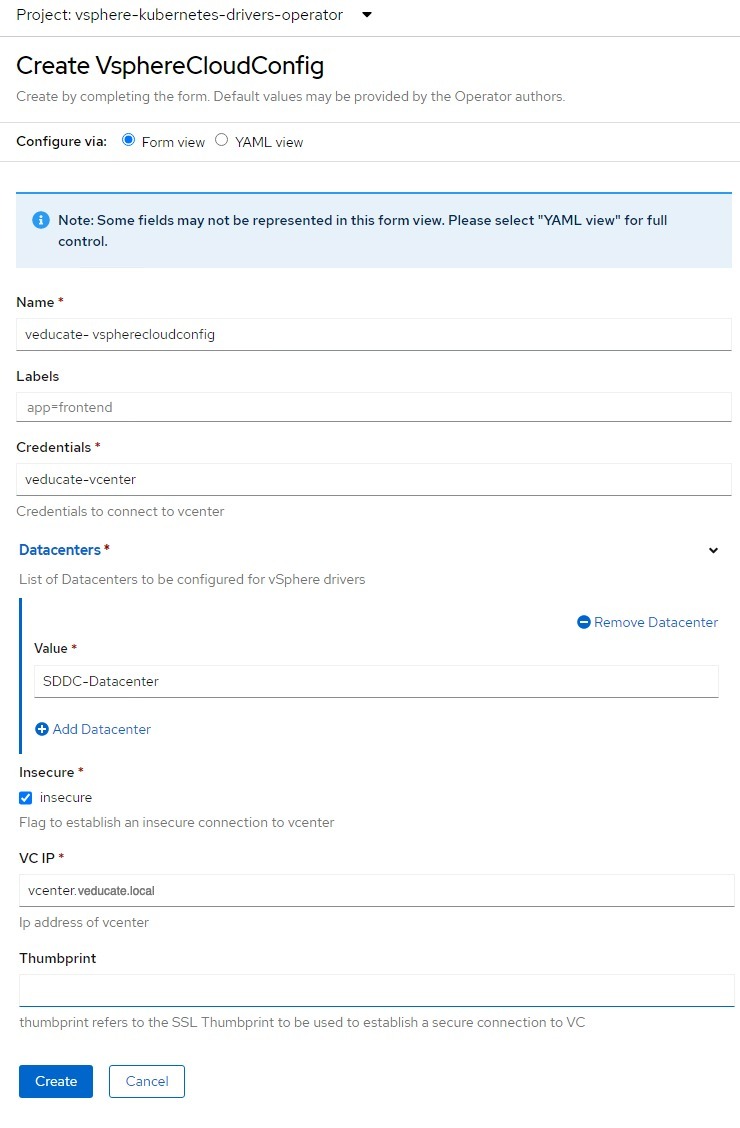

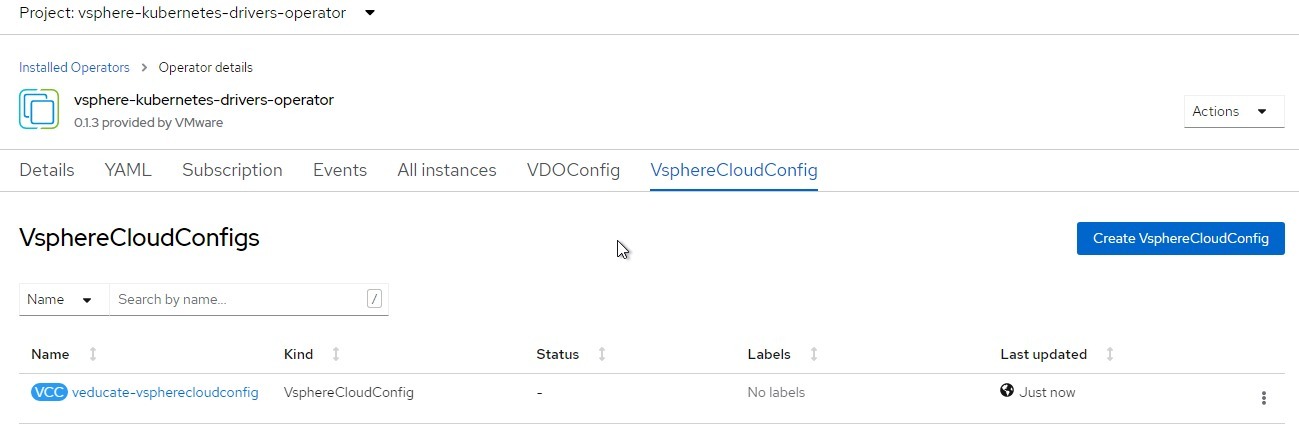

First we will create the vSphere Cloud Config, which is the connection and credential data for our vCenter.

Click Create Instance under “VsphereCloudConfig”

- Provide a name for the configuration and any labels as necessary.

- Credentials – provide the name of the source secret created earlier in the kube-system namespace

- Provide your datacenter names

- Select Insecure configuration if necessary

- If unticked, you need to provide a thumbprint for the vCenter SSL

- VC IP – you can provide either IP or FQDN here.

Click create.

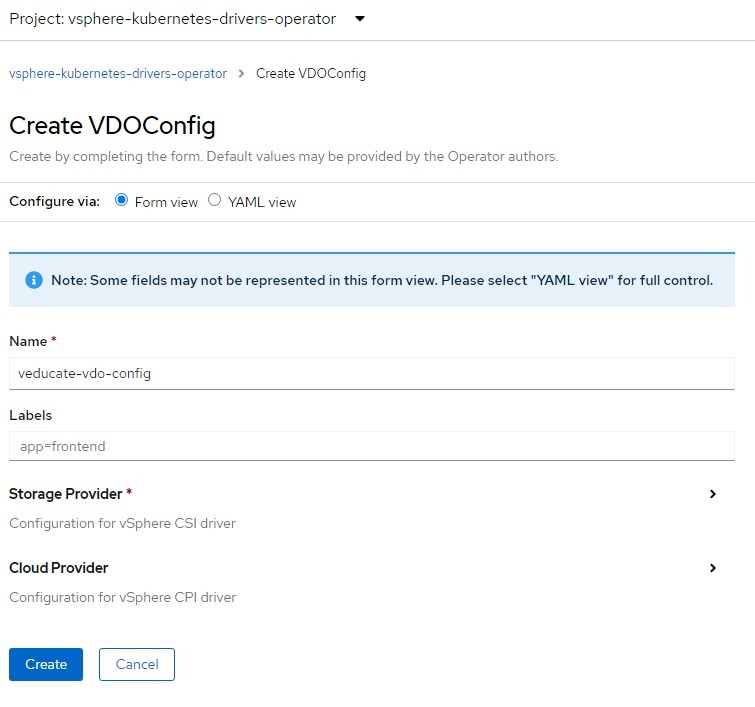

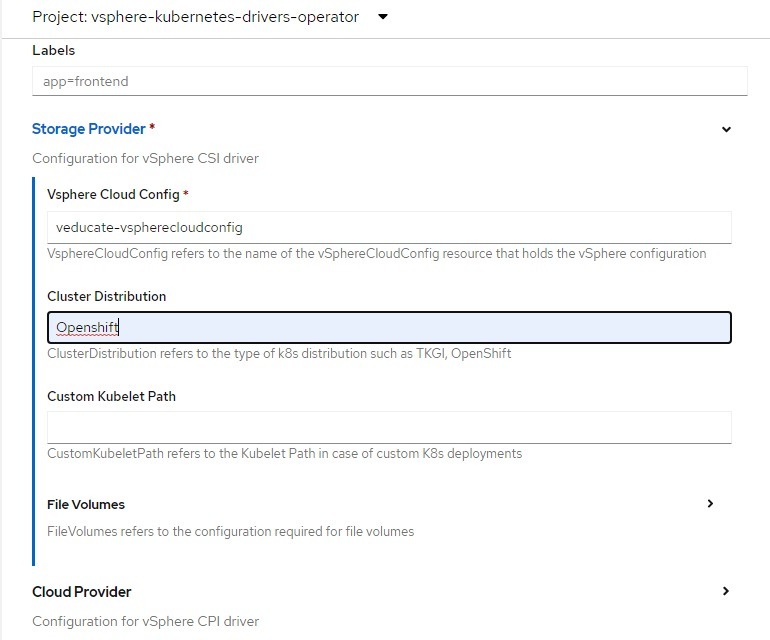

We will now configure the VDOConfig, which will control which drivers are deployed and the credentials to use.

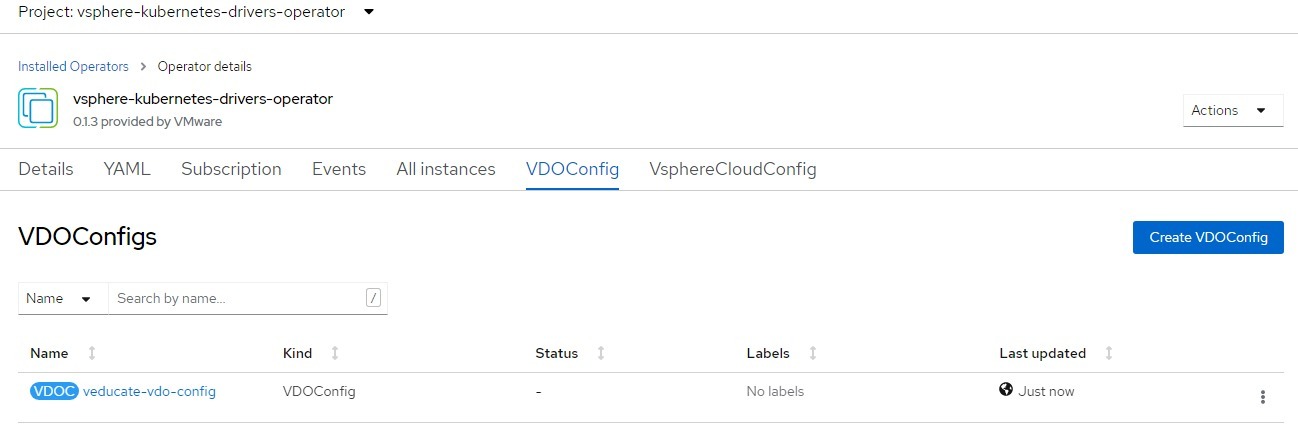

Click to create a VDOConfig instance.

- Provide a name for the configuration and any labels as necessary.

- Then open the Storage Provider heading.

- Provide the vSphere Cloud Config instance name we have just created

- Provide the ClusterDistribution Name – for this blog it will of course be OpenShift

- But remember this VDO is available for any vanilla K8s setup

- Provide a custom kubelet path if applicable

- Provide configuration for VSAN File Services volumes access if applicable

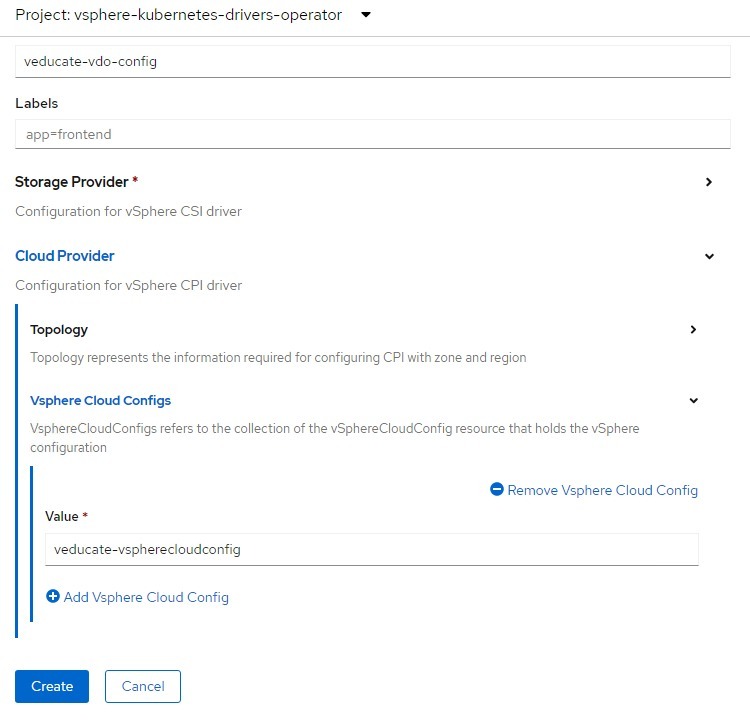

Now open the Cloud Provider configuration. If you have already installed the cloud provider in your environment, you do not need to configure this section. However, the Cloud Provider is a mandatory requirement to be installed when using the vSphere CSI Driver. So, if you don’t have it installed already, install it as part of this configuration.

- Provide any topology information if applicable. You can read more about deploying the vSphere CSI and CPI in a topology aware mode here.

- Provide the name for the vSphere Cloud Config

- Click Create

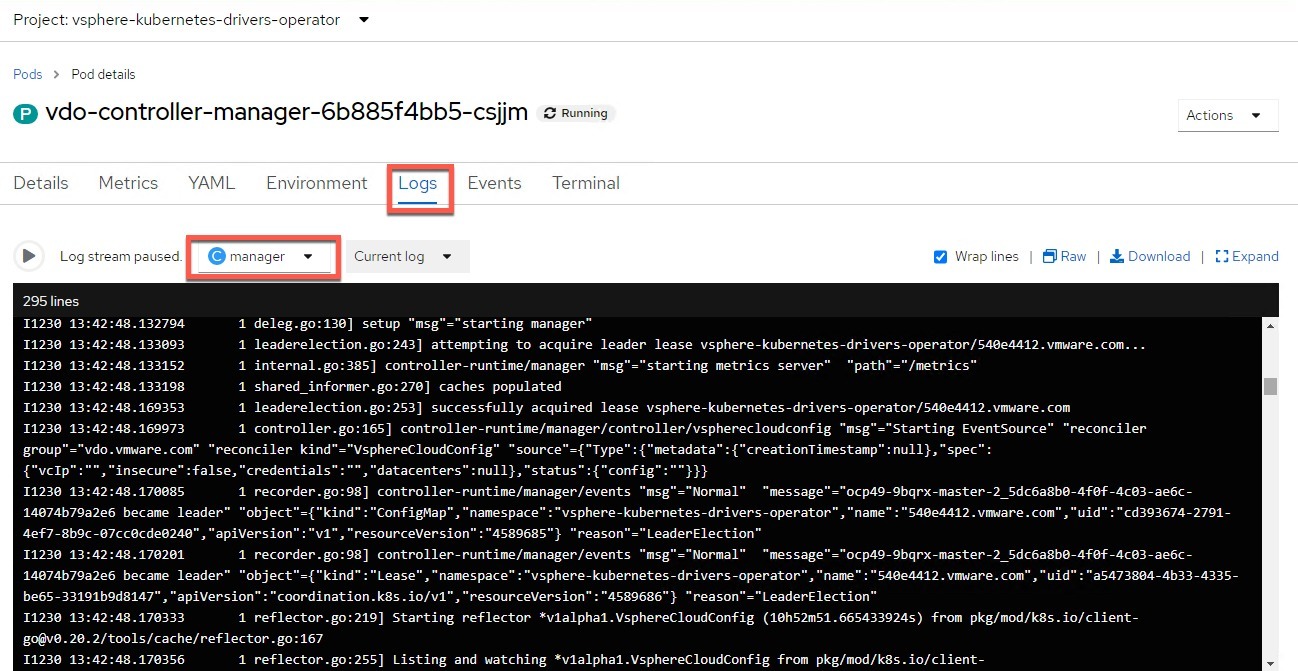

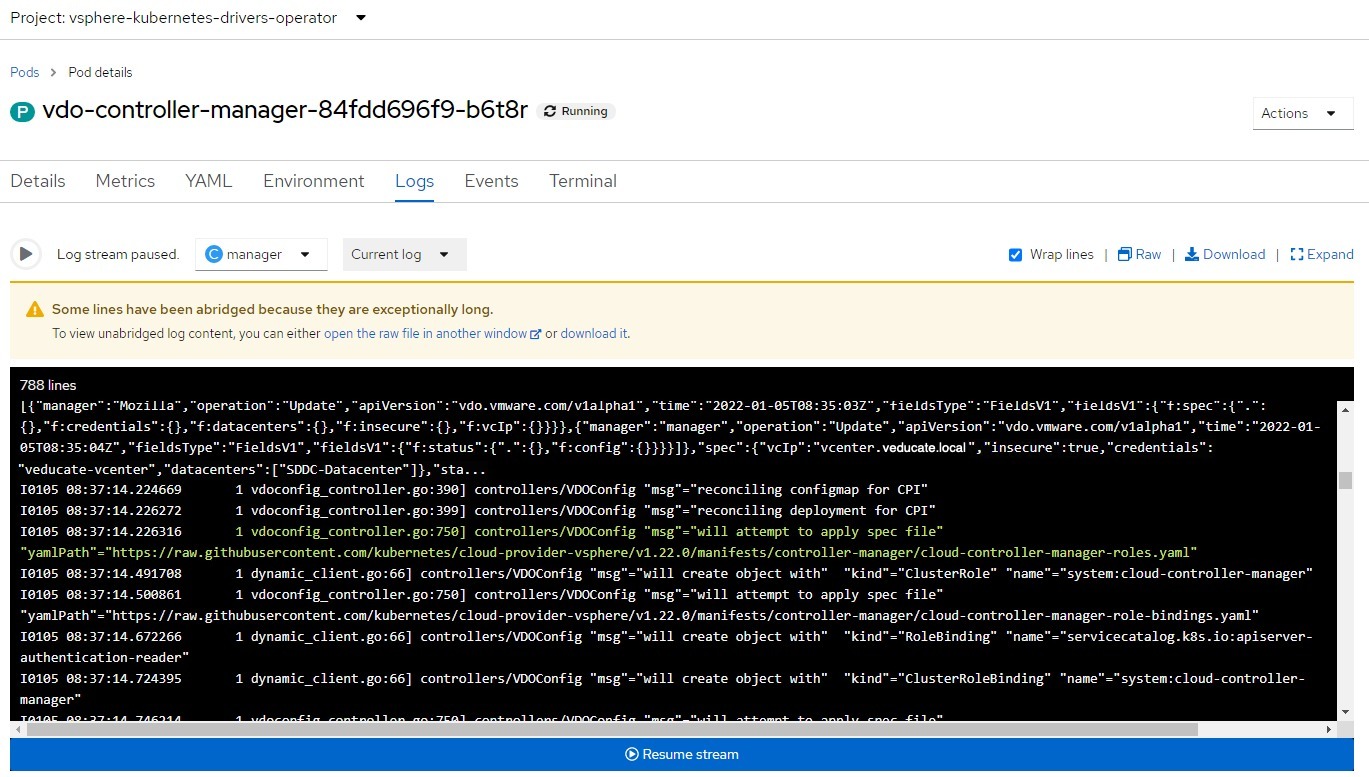

You can monitor the Operator performing its actions by going to the “vdo-controller-manager” pod, and viewing the logs from the “manager” container.

Below you can see the reconciler has picked up the configuration instances and is now attempting to install the CPI. You can follow the logs for the full status.

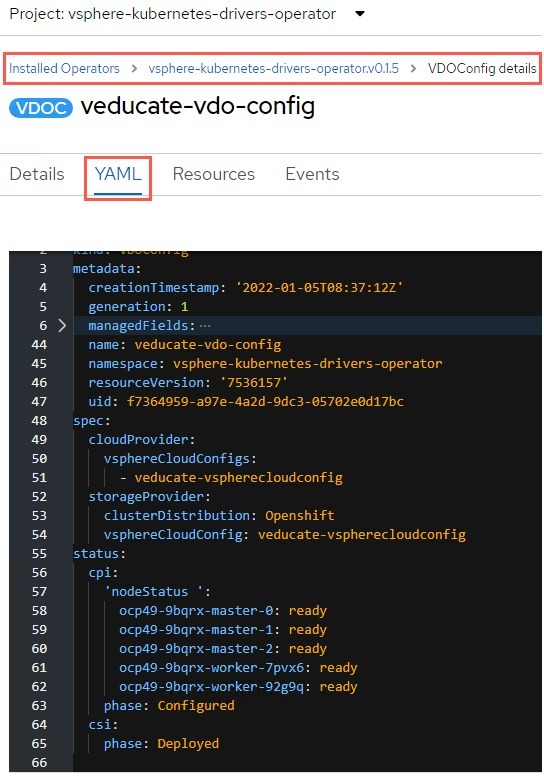

Another Option is to view the VDOConfig instance in the Operator to see the status.

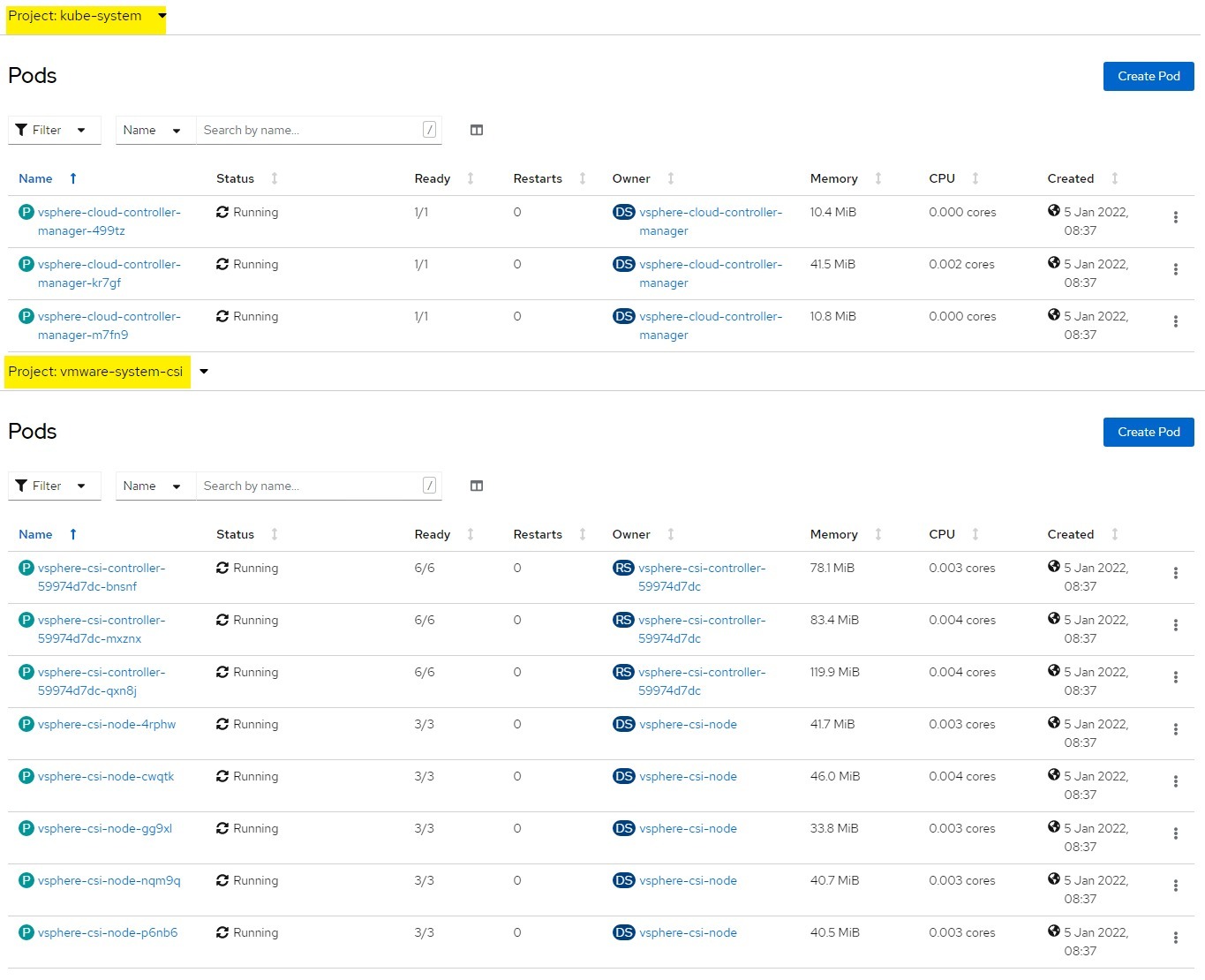

Once completed, you can check the status of the Pods for the configured CPI and CSI via the UI in the highlighted projects, kube-system for CPI, vmware-system-csi for CSI.

Or by running the following command:

# CPI oc get pods -l k8s-app=vsphere-cloud-controller-manager # CSI oc get pods -n vmware-system-csi

Testing and validating the installation

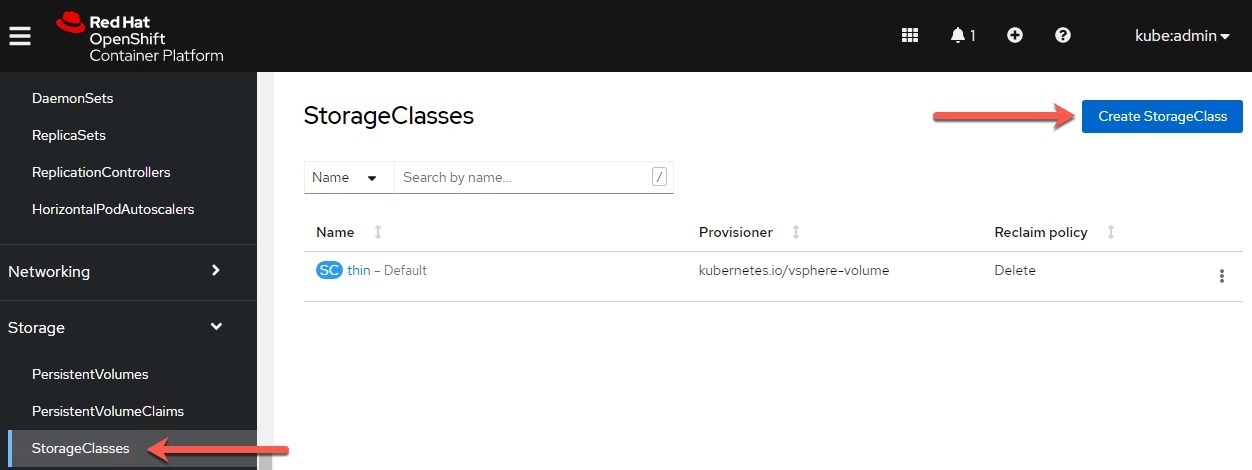

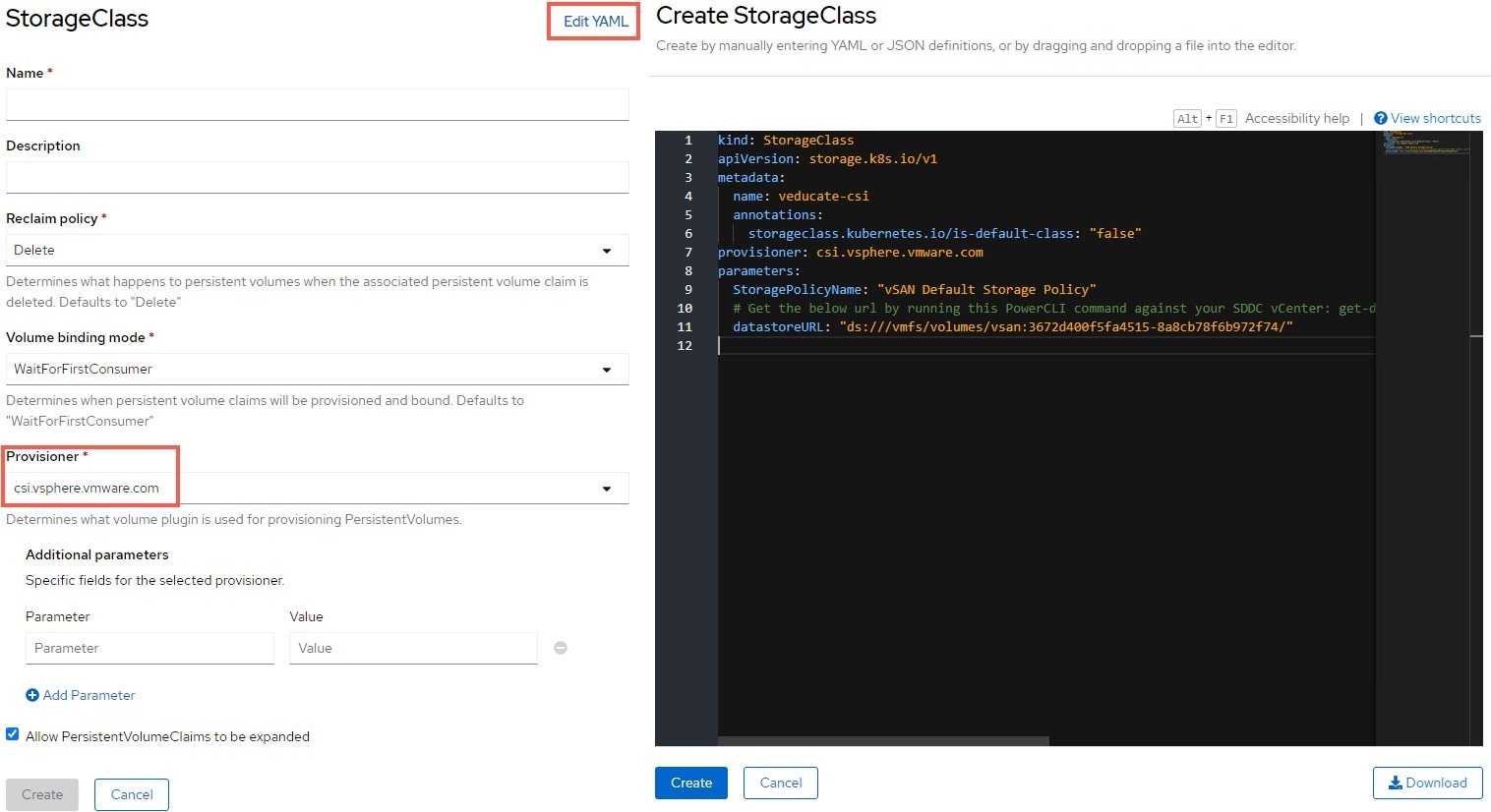

To test the installation, we will configure a StorageClass and then a Persistent Volume Claim.

- To go StorageClasses under Storage

- Click Create StorageClass

Either use the form to fill in, and select the provisioner as “csi.vsphere.vmware.com” or use the “Edit YAML” view and paste in your configuration such as the below example.

kind: StorageClass

apiVersion: storage.k8s.io/v1

metadata:

name: csi-sc-vmc

annotations:

storageclass.kubernetes.io/is-default-class: "false"

provisioner: csi.vsphere.vmware.com

parameters:

StoragePolicyName: "vSAN Default Storage Policy"

datastoreURL: "ds:///vmfs/volumes/vsan:3672d400f5fa4515-8a8cb78f6b972f74/"

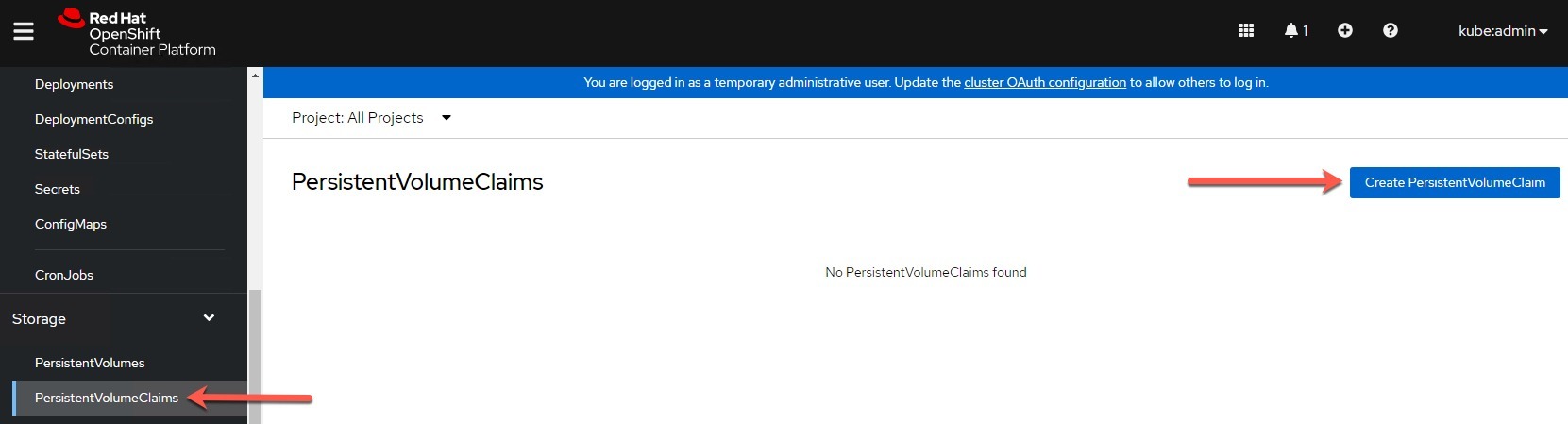

To create a Persistent Volume Claim (PVC)

- Under Storage navigation heading select PersistentVolumeClaims

- Click Create PersistentVolumeClaim

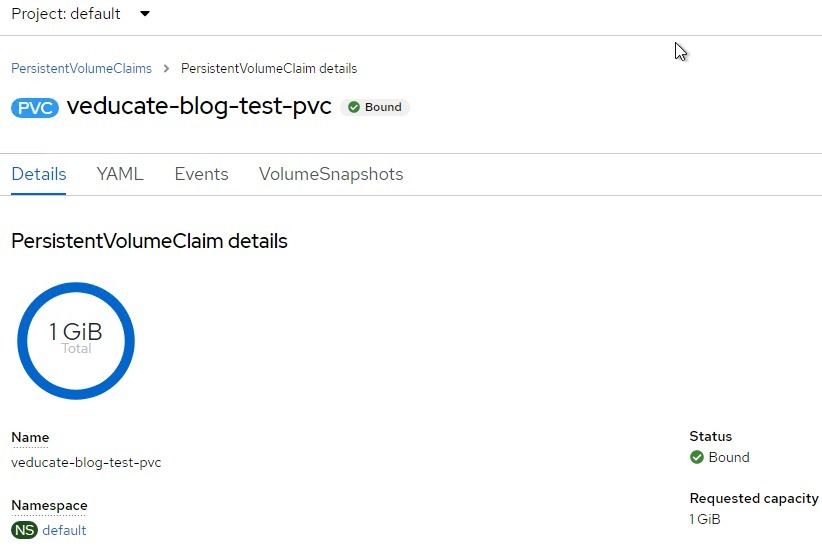

Provide the necessary details, such as selecting your Storage Class and the correct Volume mode. Again you have the ability to use the “Edit YAML” option and provide the configuration such as the below example:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: veducate-blog-test-pvc

labels:

name: veducate-blog-test-pvc

annotations:

volume.beta.kubernetes.io/storage-class: veducate-csi

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

And we are looking for a status of Bound.

Performing upgrades of the Operator and deployed CPI/CSI

The operator will follow the upgrade option you provided during install, either automatically when a new version is released, or manually. You can read more about this behaviour on the Red Hat OpenShift Documentation site.

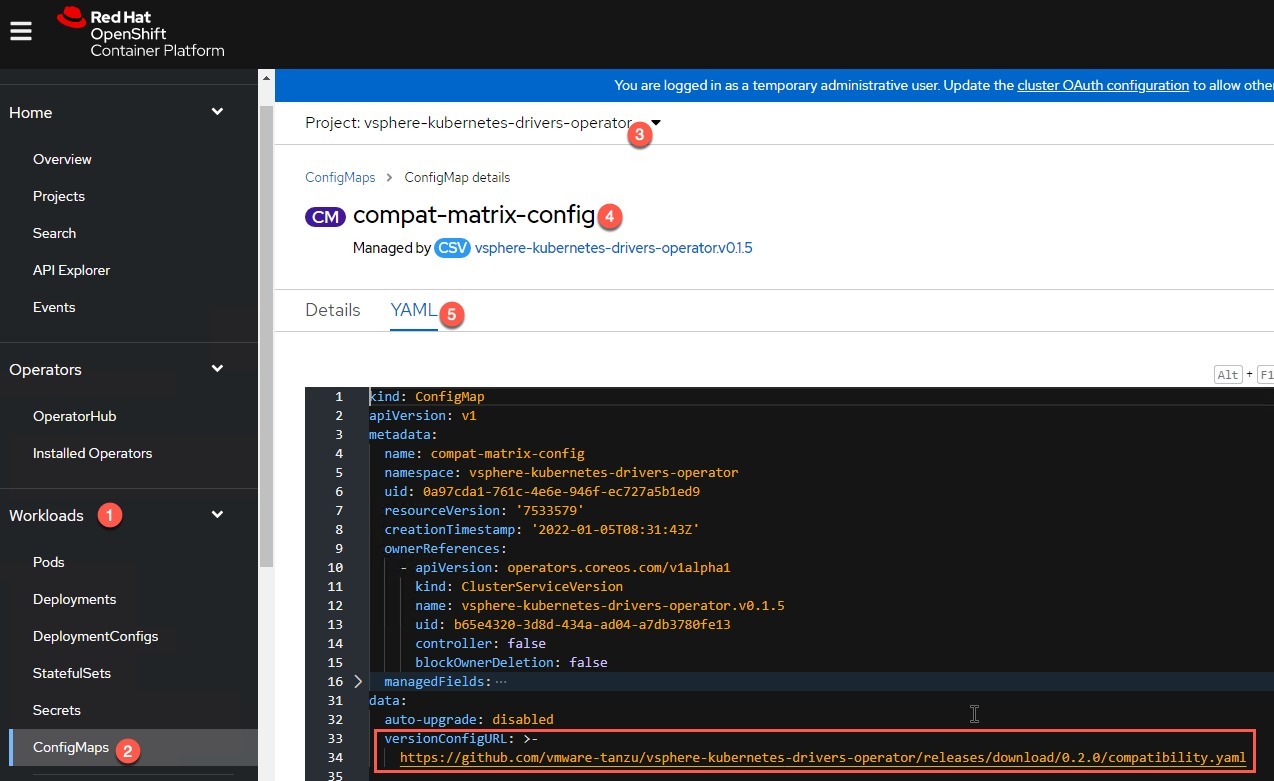

The CPI and CSI will be installed and aligned to the compatibility matrix in use. You can check which file is in use by going to:

- Workloads > Config Maps > Ensure you are in the vSphere Kubernetes Driver Operator namespace > compat-matrix-config

Drivers can be updated by updating the compatibility matrix using the following command:

vdoctl update compatibility-matrix <path-to-updated-compat-matrix> # Existing pods of CloudProvider and StorageProvider are terminated and new pods are spawned according to the compatible versions of CSI and CPI # You can either provide your own file, such as one which is edited with the locations of your own modified vSphere CSI deployment files, or a later file from the GitHub Repo releases page.

Summary

This new operator does what it sets out to achieve, to simplify the deployment, configuration and lifecycle of the vSphere Kubernetes Drivers. And for the Red Hat OpenShift customers, it’s fully supported and certified.

The VMware team have made the tooling and ability to consume this new “Master Operator of the Drivers” flexible in terms of consumption, and simple.

Regards