This blog post covers how to delegate DNS control from Cloudflare to AWS Route53. So that you can host records in Route53 for services deployed into AWS, that are resolvable publicly, despite your primary domain being held by another provider (Cloudflare).

My working example for this, I was creating an OpenShift cluster in AWS using the IPI installation method, meaning the installation will create any necessary records in AWS Route 53 on your behalf. I couldn’t rehost my full domain in Route53, so I just decided to delegate the subdomain.

- You will need access to your Cloudflare console and AWS console.

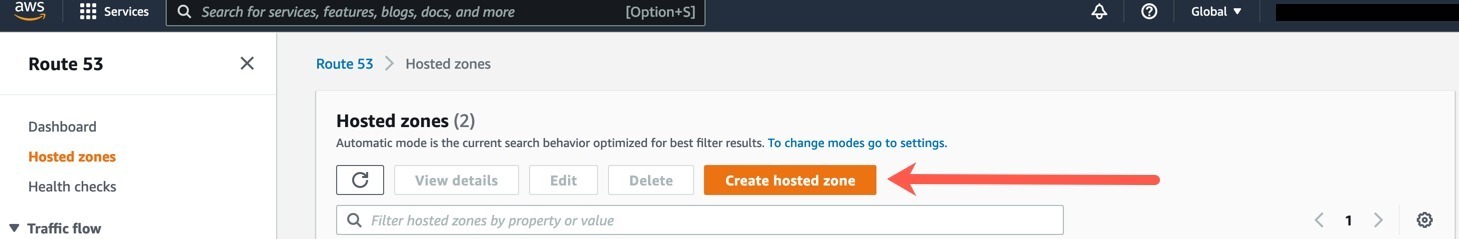

Open your AWS Console, go to Route53, and create a hosted zone.

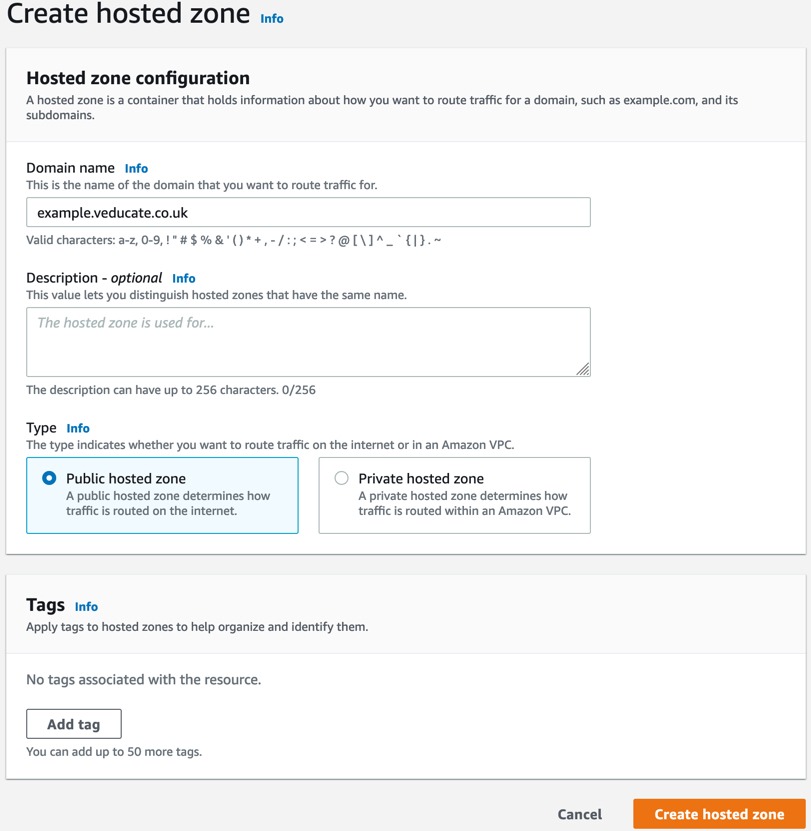

Configure a domain name, this will be along the lines of {subddomain}.{primarydomain}, for example my main domain name is veducate.co.uk, the sub domain I want AWS to manage is example.veducate.co.uk.

I’ve selected this to be a public type, so that I can resolve the records I create publicly.

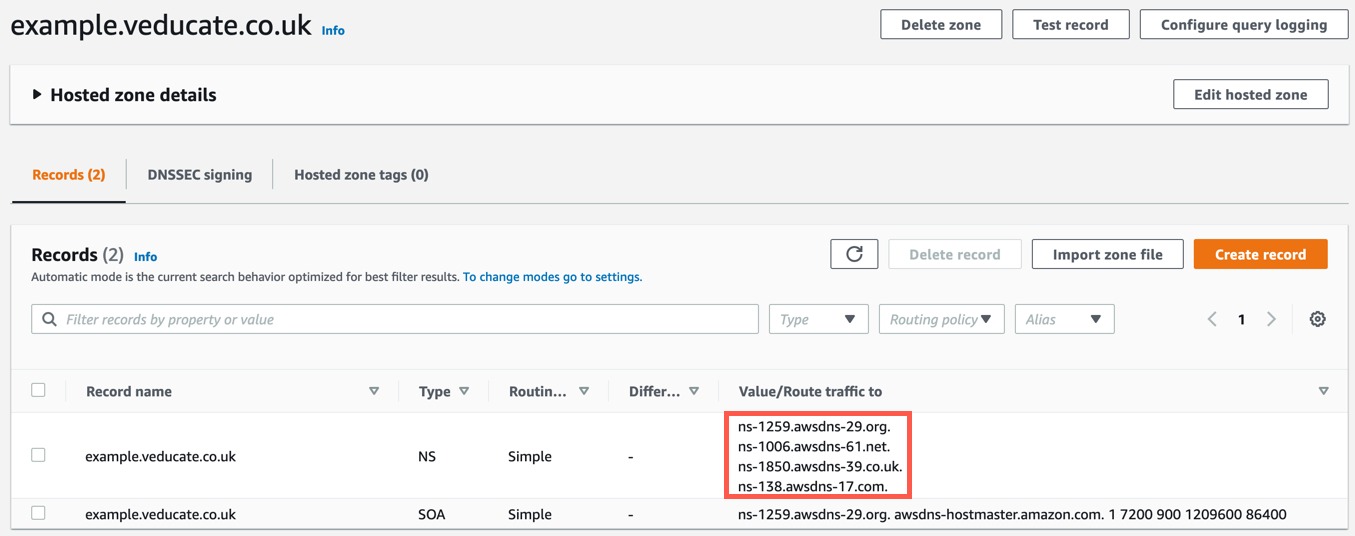

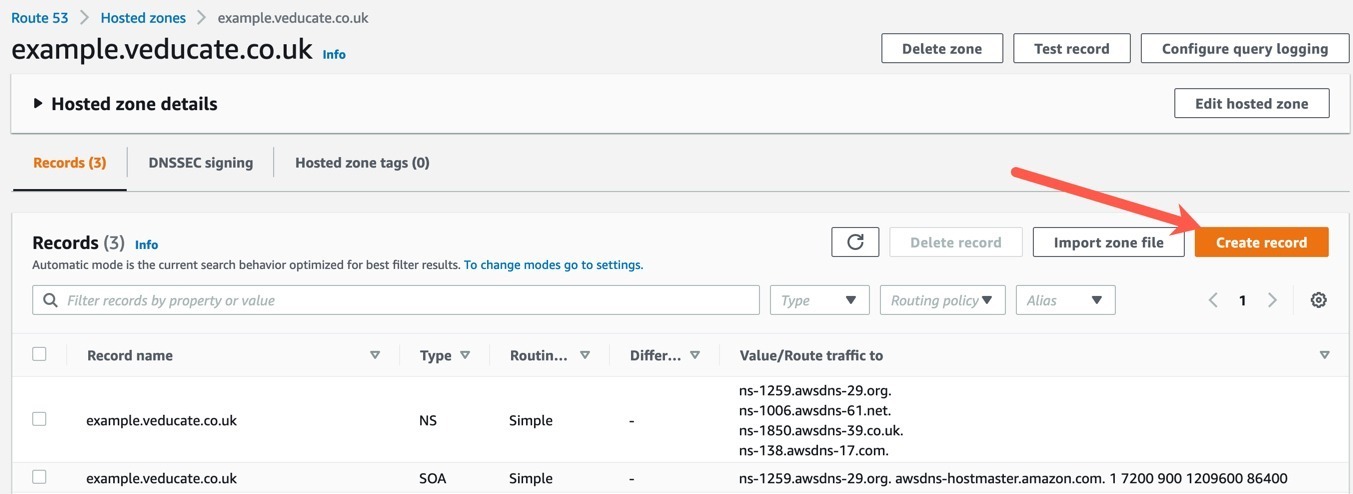

Now my zone is created, I have four Name Servers which will host this zone (Red Box). Take a copy of these.

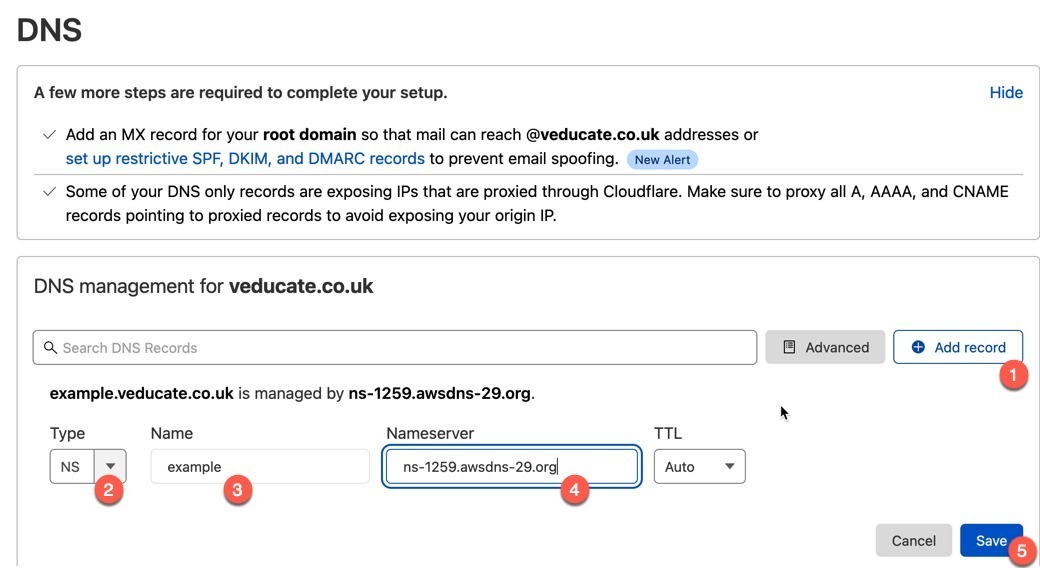

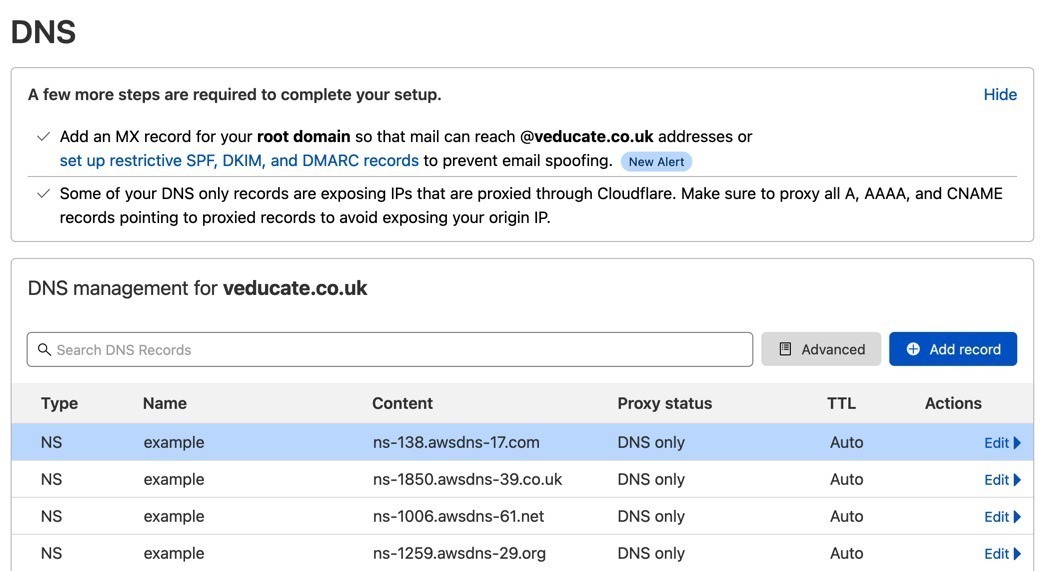

In your DNS provider, for this example, Cloudflare, create a record of type: NS (Name Server), the record name is subdomain, and Name Server is one of the four provided by AWS Route53 Hosted Zone.

Repeat this for each of the four servers.

Below you can see I’ve created the records to map to each of the AWS Route53 Name Servers.

Now back in our AWS Console, for the Route53 service within my hosted zone. I can start to create records.

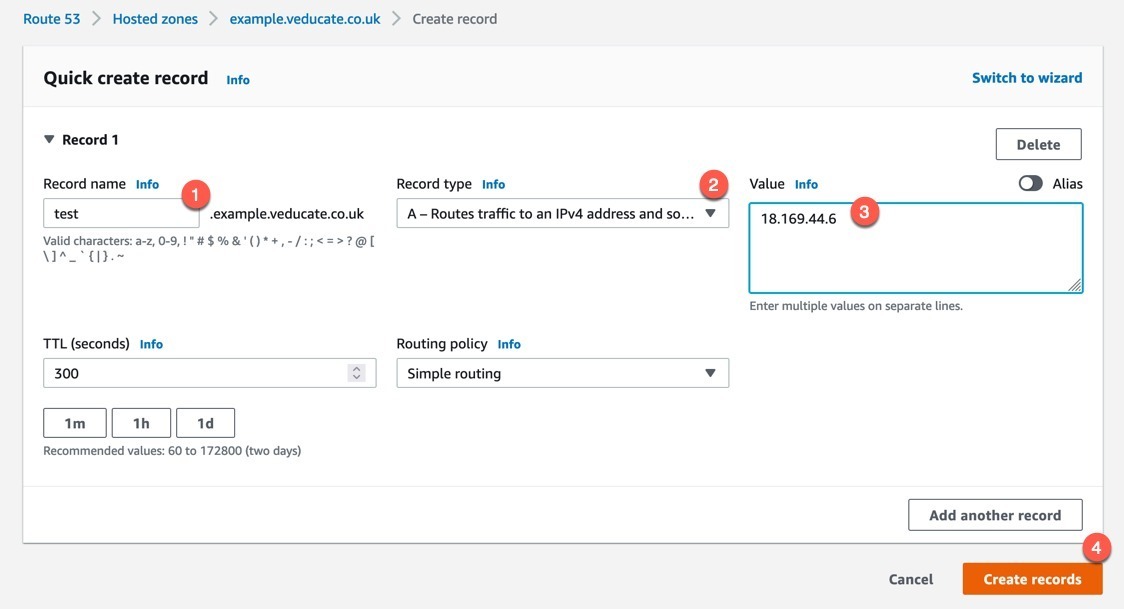

Provide the name, type and value and create.

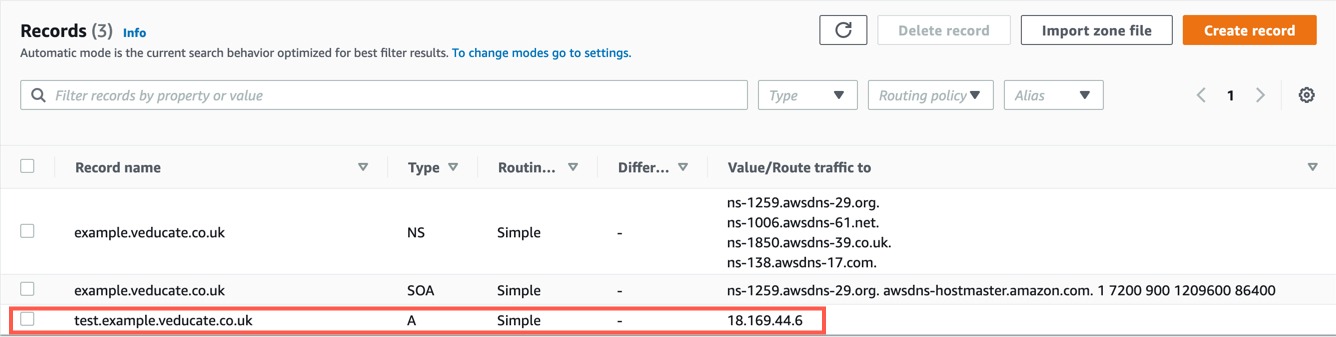

Below you can see the record has been created.

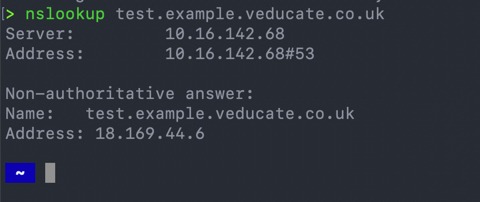

And finally, to test, we can see the DNS record resolving from my laptop.

Regards