In this blog post, I am going to take you through how to get started with VMware Aria Hub, and connect your first public cloud account, in this example, AWS.

What is VMware Aria Hub?

Before we dive into the technical pieces, what is VMware Aria Hub?

If we take the marketing definition:

VMware Aria Hub is a transformational multi-cloud management solution unifying cost, performance, and config and delivery automation in a single platform with a common control plane and data model for any cloud, any platform, any tool, and every persona

To make this simple, VMware Aria Hub is one of the key SaaS based services which sits at the center of the new VMware Aria Cloud Management platform. In which it gives you a single control plane to be able to access and interrogate data across the previously named vRealize Suite of products, now rebranded as Aria [insert product name], store metadata from all of your Infrastructure platforms (VMware, AWS, Azure, Google) and in the future, bring in data from third party systems.

This centralization of data is key. That part in VMware Aria, is called “Aria Graph”, which uses an Entity Datastore, a component derived from an existing VMware product, CloudHealth SecureState product (now VMware Aria Automation for Secure Clouds). This unique component, which is based on GraphQL, provides the product a unique way to store data, query into other products, and enable the consumer to write new data into the platform as well.

Let’s take this practical example, I have my application which is made up of the typical three tier app standards:

- Load Balancer – AWS

- 2 x Web Servers – AWS

- App Server – AWS

- Database Server – On-Prem DC – vSphere

All these components are deployed by Aria Automation (vRealize Automation), monitored by Aria Operations (vRealize Operations), with application logs sent to Aria Operations for Logs (vRealize Log Insight). The AWS environment is further secured by Aria Automation for Secure Clouds (CloudHealth SecureState), which ensures a number of specific resource tags exist, and they conform to the appropriate CIS benchmark.

Now If I need to query the following information for my application; App owner (who deployed it), Cost Centre, Resource Sizing, and active security alerts. I will need to pretty much either browse the UI or query the API for each of the products mentioned.

By leveraging the new capabilities of VMware Aria Hub, I can browse a single interface to reference all the components of my application, and where this data is stored into the other Aria products, it will pull that data through for me. This would be the same if I am querying for information via the VMware Aria Graph as well, for my programmatic access.

Watch the recording!

As a growing trend is video content, I’ve also produced a recording of the same content of this blog post! So, you can follow along below!

Getting Started with Aria Hub

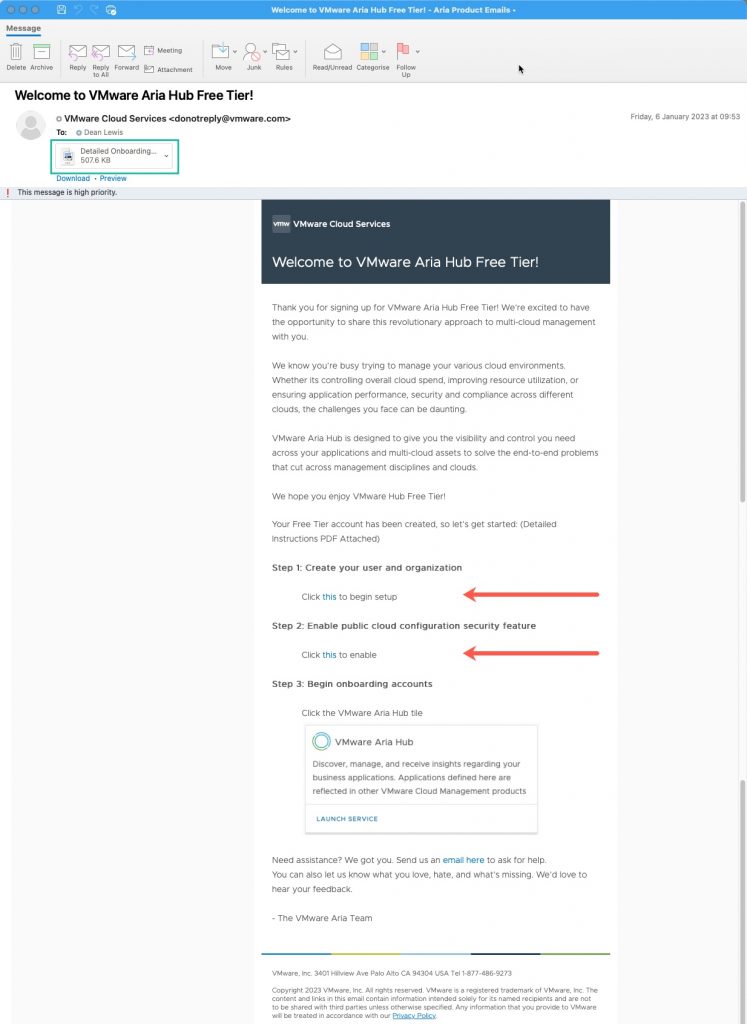

First, you should have an email from VMware welcoming you to the VMware Aria Hub Free Tier. Below I’ve provided a sample email, there are three things to note;

- You need to click on the links in step 1 + 2 to activate the VMware Aria Hub product within the VMware Cloud Services Portal, and enable the Free Tier for VMware Aria Automation for Secure Clouds, which provides the Public Cloud Security Features into the Aria Hub UI

- To setup your VMware Cloud Services Portal organisation and enable the product, there is a PDF attached to the email showing the step-by-step instructions and screenshots if needed (shown in the green box).

Once enabled, in the VMware Cloud Services Portal, click the VMware Aria Hub tile (as in the above email screenshot, step 3).

This will present you with the below opening page.

To get started, you only have one option here:

- Click the “Connect your first data source” blue button.

Continue reading VMware Aria Hub and AWS Setup: A Guide to Getting Started