The Issue

When deploying a vSphere with Tanzu guest cluster via the command line, I hit the following error:

kubectl apply -f cluster.yaml Error from server (spec.settings.network.pods.cidrBlocks intersects with the network range of the external ip pools in network provider's configuration, spec.settings.network.pods.cidrBlocks intersects with the network range of the external ip pools LB in network provider's configuration): error when creating "cluster.yaml": admission webhook "default.validating.tanzukubernetescluster.run.tanzu.vmware.com" denied the request: spec.settings.network.pods.cidrBlocks intersects with the network range of the external ip pools in network provider's configuration, spec.settings.network.pods.cidrBlocks intersects with the network range of the external ip pools LB in network provider's configuration

The Cause

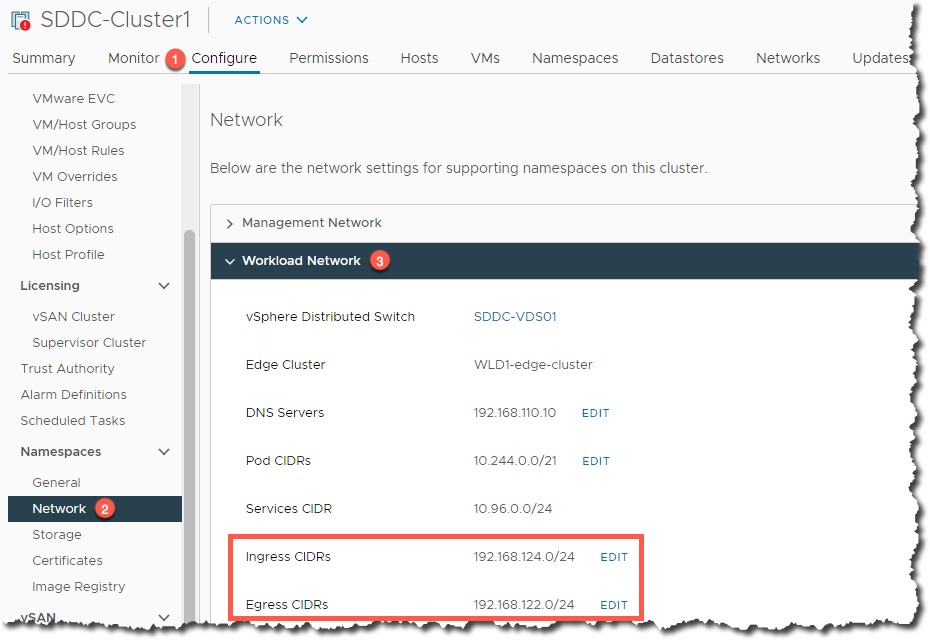

The default CIDR Block used by vSphere with Tanzu for the Pod Networking is 192.168.0.0/16 and for Services Networking is 10.96.0.0/12. Therefore if you have any over laps with this in your Workload Management setup, such as, in my case the Load Balancing configuration when integrating with NSX-T. You will end up with a failure.

This will happen if you use a deployment YAML for your cluster such as the below, there is no pod/service networking settings specified, so the default is chosen.

apiVersion: run.tanzu.vmware.com/v1alpha1

kind: TanzuKubernetesCluster

metadata:

name: veducate-cluster

namespace: deanl

spec:

distribution:

version: v1.18.15

topology:

controlPlane:

class: best-effort-small

count: 1

storageClass: management-storage-policy-thin

workers:

class: best-effort-small

count: 3

storageClass: management-storage-policy-thin

settings:

network:

cni:

name: calico

storage:

defaultClass: management-storage-policy-thin