This walk-through will detail the technical configurations for using vRA Code Stream to deploy Google Kubernetes Clusters (GKE), register them as:

- Kubernetes endpoints in vRA Cloud Assembly and Code Stream

- An attached in Tanzu Mission Control

- Onboard in Tanzu Service Mesh

This post mirrors my other blog posts following similar concepts:

Requirement

After covering EKS and AKS, I thought it was worthwhile to finish off the gang and deploy GKE clusters using Code Stream.

Building on my previous work, I also added in the extra steps to onboard this cluster into Tanzu Service Mesh as well.

High Level Steps

- Create a Code Stream Pipeline

- Create a Google GKE Cluster

- Create GKE cluster as endpoint in both vRA Code Stream and Cloud Assembly

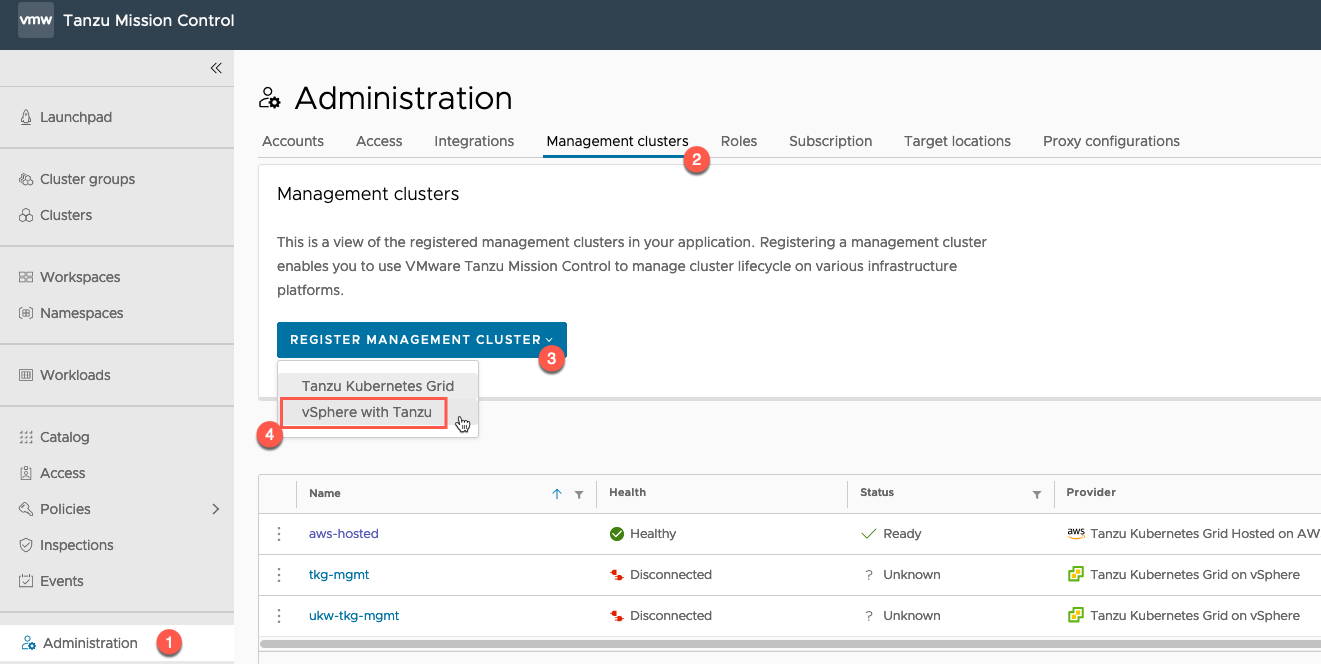

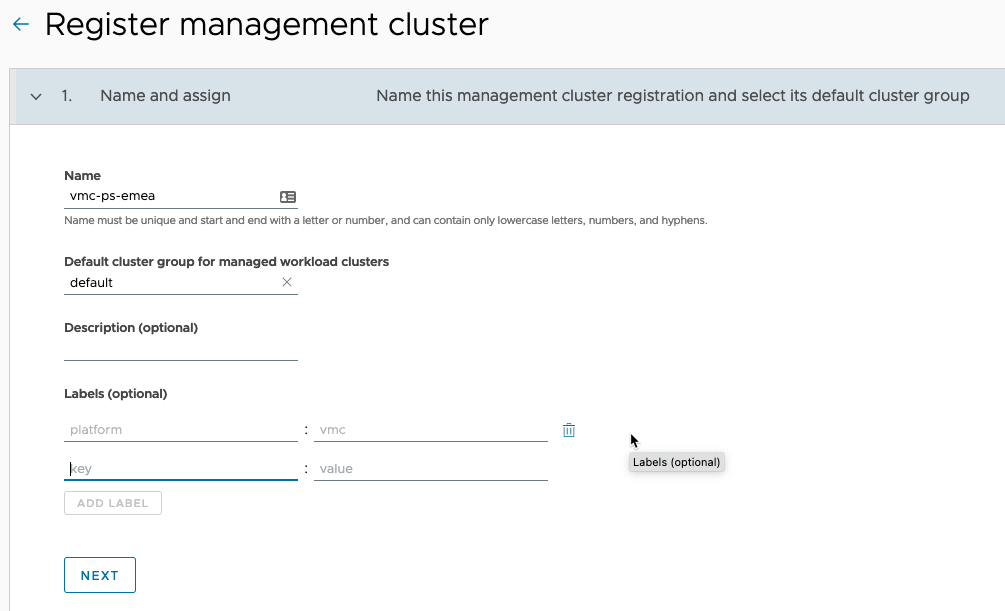

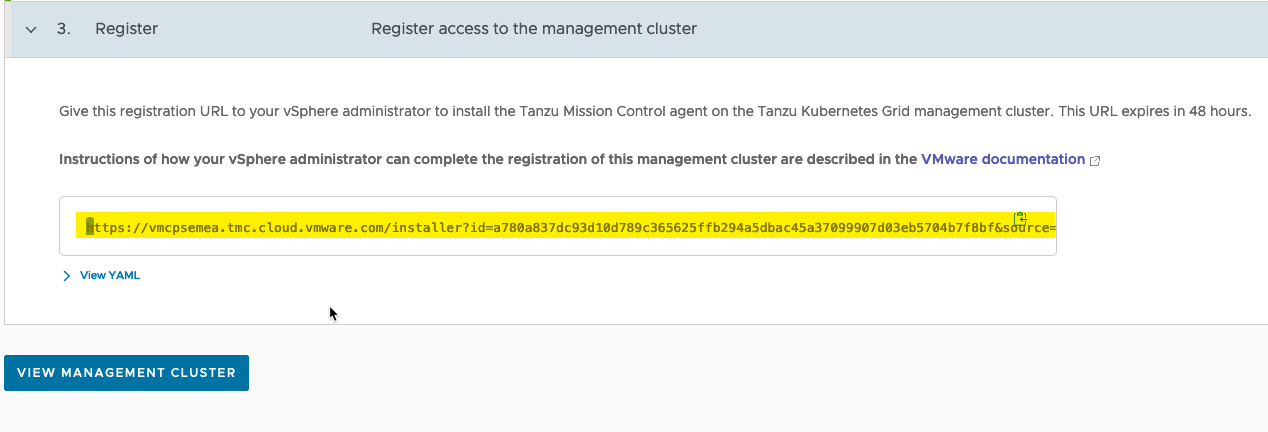

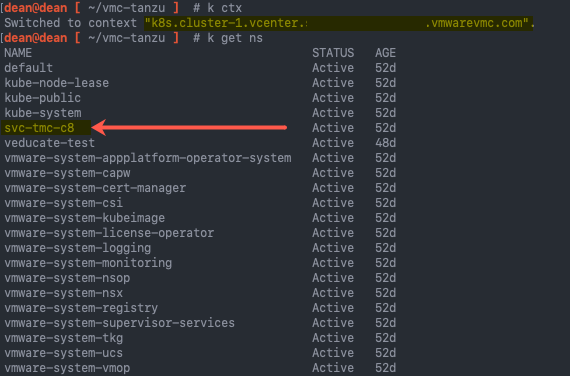

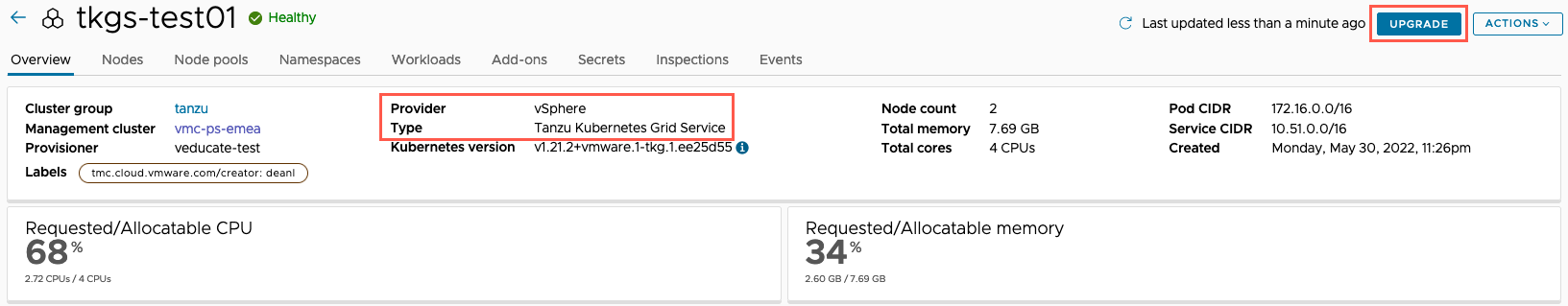

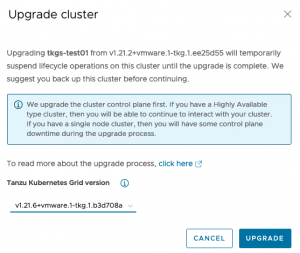

- Register GKE cluster in Tanzu Mission Control

- Onboard the cluster to Tanzu Service Mesh

Pre-Requisites

- vRA Cloud access

- The pipeline can be changed easily for use with vRA on-premises

- Google Cloud account that can provision GKE clusters

- The Kubernetes Engine API needs to be enabled

- Basic knowledge of deploying GKE

- This is a good beginners guide if you need

- You will need to create a Service Account that the gcloud CLI tool can use for authentication

- A Docker host to be used by vRA Code Stream

- Ability to run the container image: gcr.io/google.com/cloudsdktool/google-cloud-cli

- Tanzu Mission Control account that can register new clusters

- VMware Cloud Console Tokens for vRA Cloud, Tanzu Mission Control and Tanzu Service Mesh API access

- The configuration files for the pipeline can be found in this GitHub repository