In this blog post, I am going to cover the new support for Tanzu Kubernetes Grid Management clusters on both VMware Cloud on AWS (VMC) and Azure VMware Solution (AVS). This functionality also allows the provisioning of new Tanzu Kubernetes workload clusters (TKC) to the relevant platform, provisioned by the lifecycle management controls within Tanzu Mission Control.

Below are the other blog posts I’ve wrote covering Tanzu Mission Control.

Tanzu Mission Control - Getting Started Tanzu Mission Control - Cluster Inspections - Workspaces and Policies - Data Protection - Deploying TKG clusters to AWS - Upgrading a provisioned cluster - Delete a provisioned cluster - TKG Management support and provisioning new clusters - TMC REST API - Postman Collection - Using custom policies to ensure Kasten protects a deployed application

Release Notes

Below are the relevant release notes for the features I’ll cover. In this blog post, I’ll just be showing screenshots for a VMC environment, however the same applies to AVS as well.

What's New May 26, 2021

New Features and Improvements

(New Feature update): Tanzu Mission Control now supports the ability to register Tanzu Kubernetes Grid (1.3 & later) management clusters running in vSphere on Azure VMware Solution.

What's New April 30, 2021

New Features and Improvements

(New Feature update): Tanzu Mission Control now supports the ability to register Tanzu Kubernetes Grid (1.2 & later) management clusters running in vSphere on VMware Cloud on AWS. For a list of supported environments, see Requirements for Registering a Tanzu Kubernetes Cluster with Tanzu Mission Control in VMware Tanzu Mission Control Concepts.

Prerequisites

- You must have deployed a Tanzu Kubernetes Grid Management cluster to either VMC or AVS already.

This first management cluster deployment is not supported by TMC, nor is it supported for a management cluster to deploy workload clusters across platforms. For example, a management cluster running in AWS does not have the capability to deploy workload clusters to VMC or AVS or Azure.

The following requirements are from the product documentation.

- The management cluster must be deployed as a production cluster with multiple control plane nodes

- However, in my demo lab I was able to successfully run this using a development deployment.

- Tanzu Kubernetes Grid workload clusters need at least 4 CPUs and 8 GB of memory

- Again, I deployed a small instance type (2 vCPU, 4GB RAM) and this didn’t seem to be an issue.

- Tanzu Kubernetes Grid management clusters (version 1.3 or later) running in vSphere on Azure VMware Solution (AVS).

- Tanzu Kubernetes Grid management clusters (version 1.2 or later) running in vSphere, including vSphere on VMware Cloud on AWS (version 1.12 or 1.14).

- Do not attempt to register any other kind of management cluster with Tanzu Mission Control.

- Tanzu Mission Control does not support the registration of Tanzu Kubernetes Grid management clusters prior to version 1.2.

Registering our Tanzu Kubernetes Grid Management Cluster

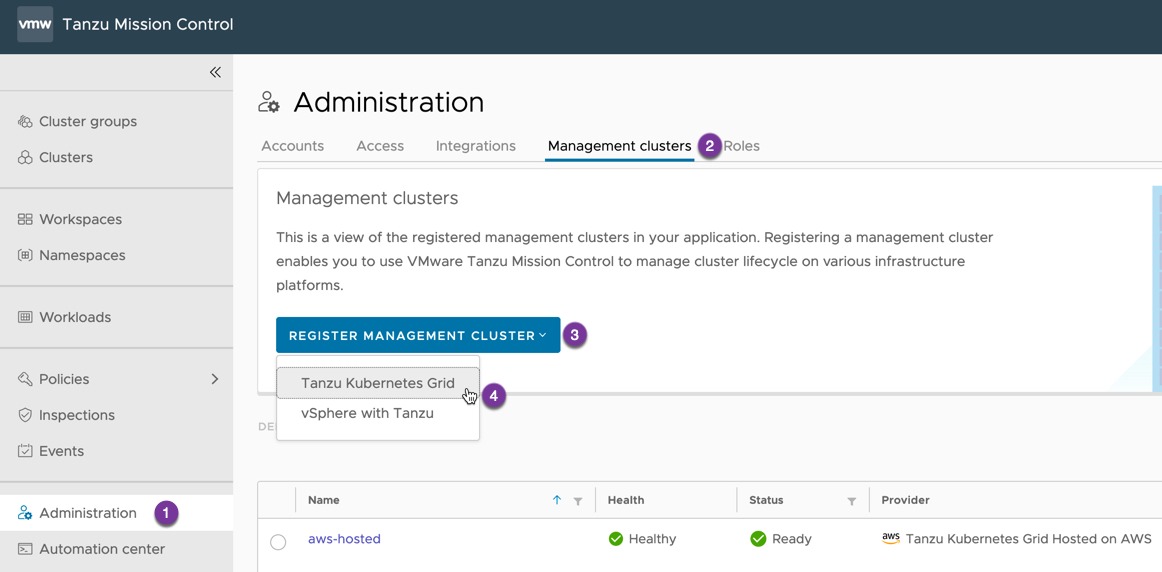

- Go to Administration> Management Clusters > Register Management Cluster > Tanzu Kubernetes Grid

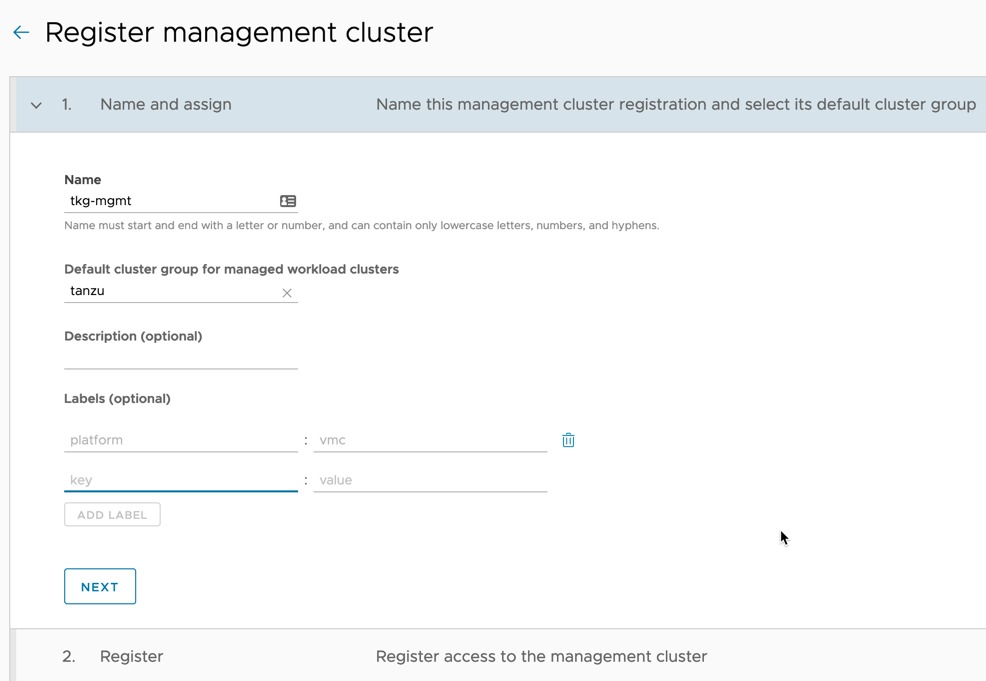

- Provide a friendly name for the cluster in TMC and provide the details as necessary.

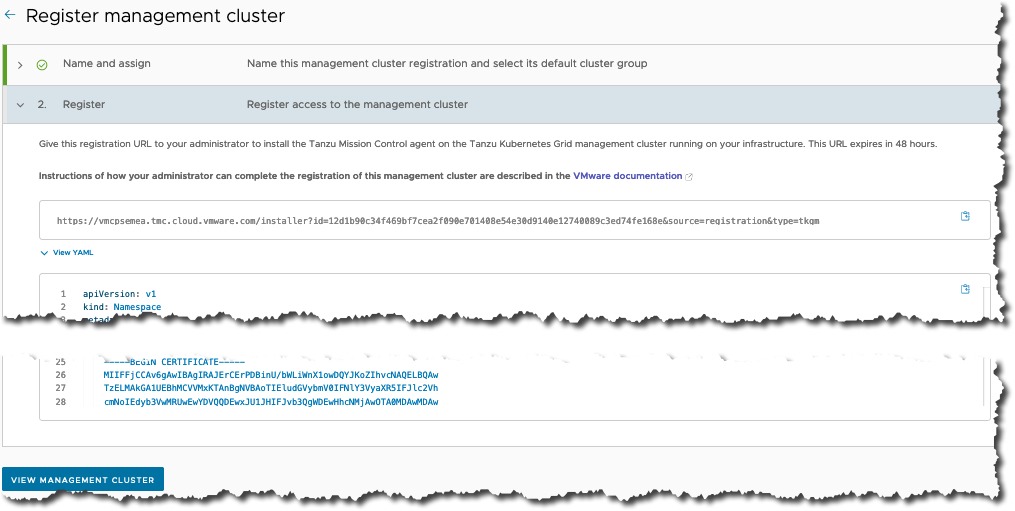

- Grab the YAML file from the URL or within the UI and deploy to your TKG management cluster.

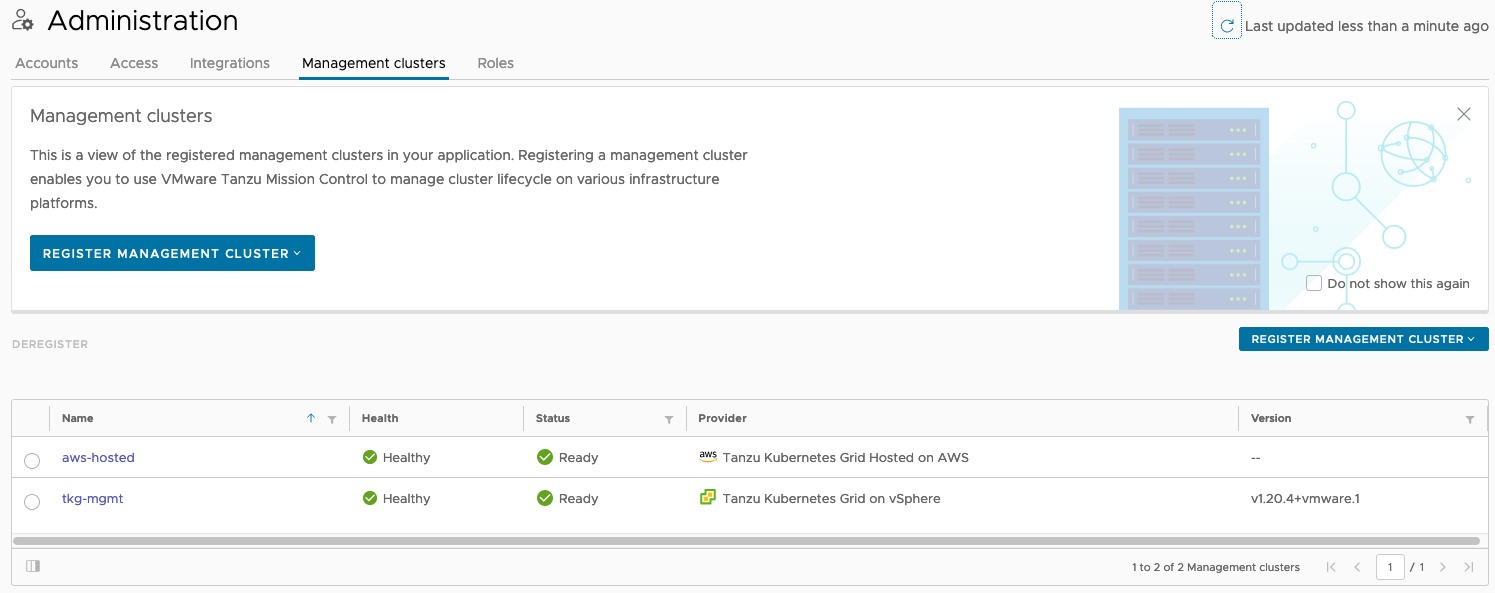

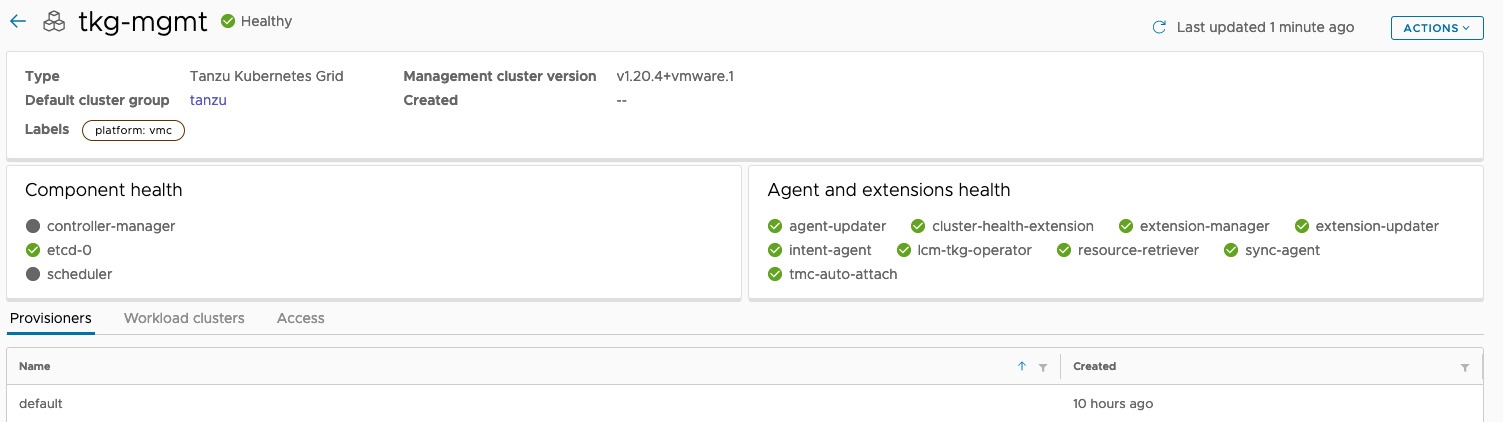

Once your cluster comes online, you will see it appear with a Healthy Status in the Management Clusters page.

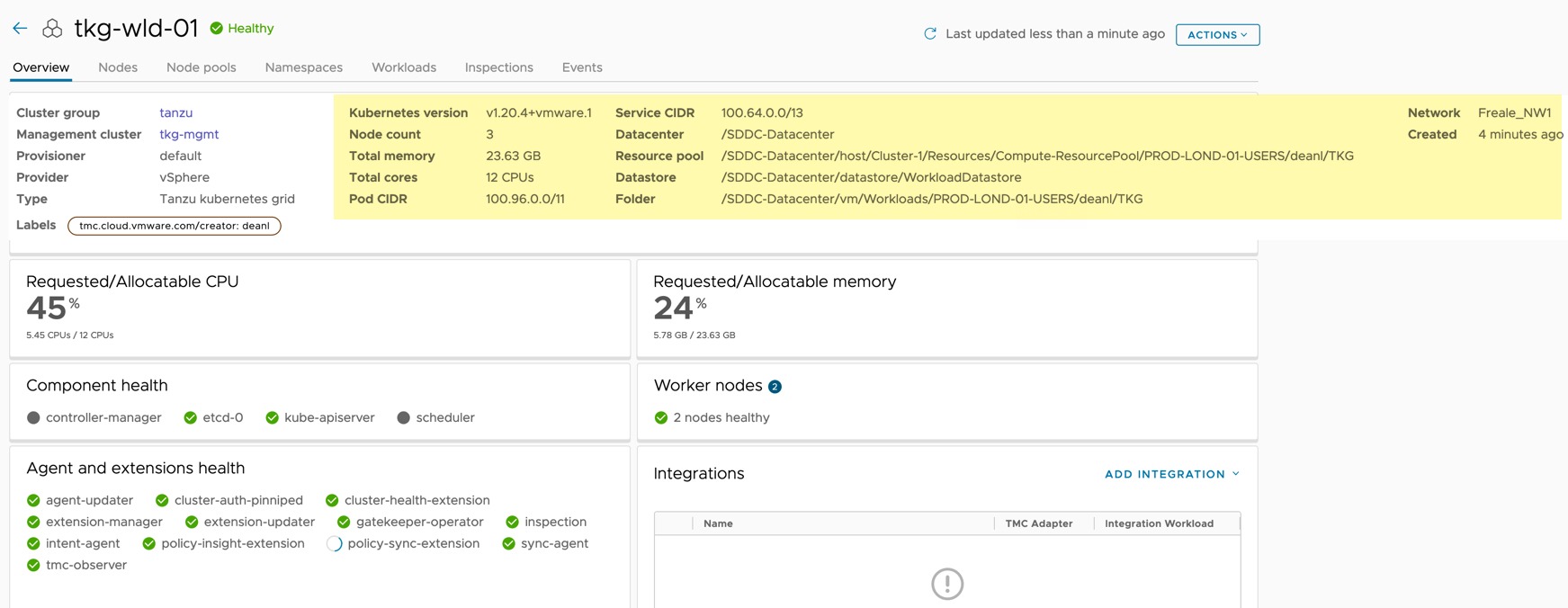

Clicking into the cluster will show overview information, the provisioner will always show a single default, linked to the platform the TKG management cluster is deployed into.

Registering existing workload clusters

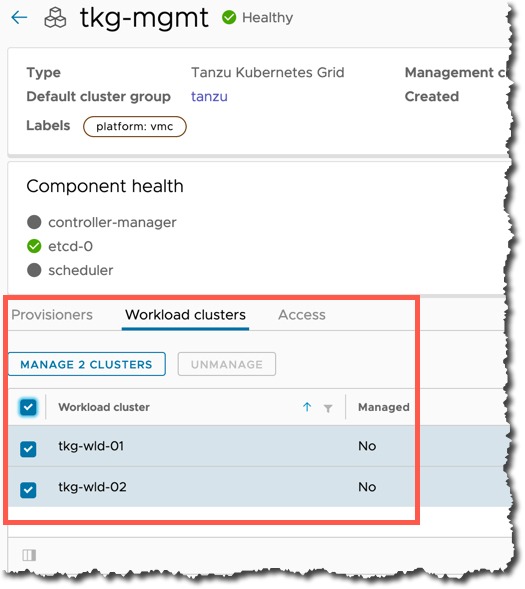

Clicking on the Workload Cluster tab, you can see the provisioned clusters that the management cluster manages, and you can register them straight into TMC.

- Select the necessary clusters, and click the manage button

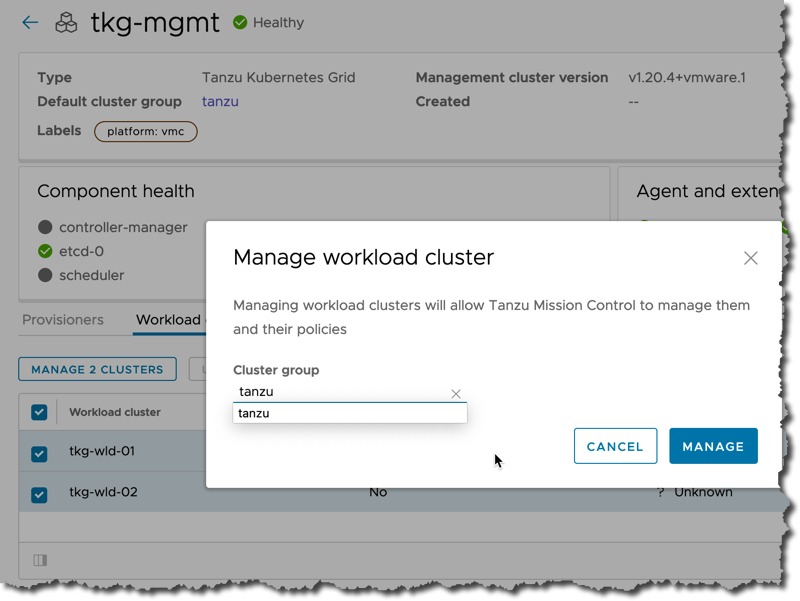

- Select which TMC cluster group to place them into and click the blue manage button

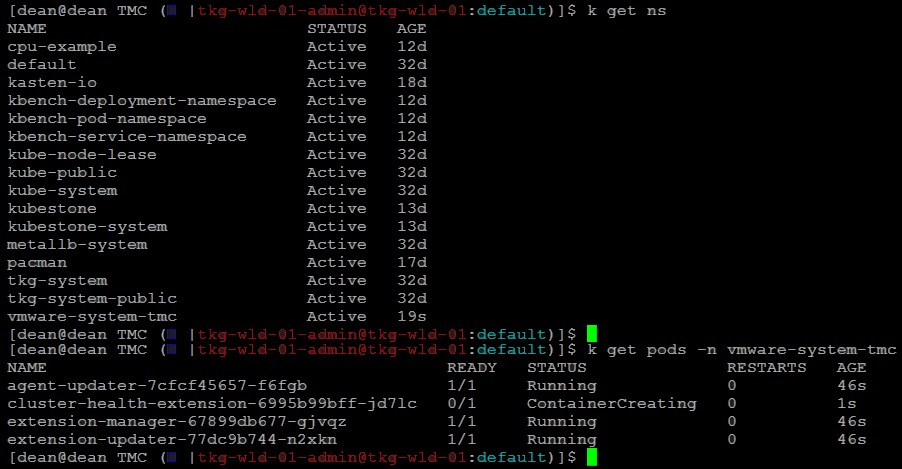

Looking at one of my workload clusters, you can see the “vmware-system-tmc” namespace is created and the pods are creating for the TMC Services.

After a few minutes, your clusters should register and start to show a status in Tanzu Mission Control.

This also pulls through additional information about the vSphere environment that the cluster is deployed into as well.

Scaling an existing workload cluster

One of the features of this integration, is the ability to scale your existing workload clusters using TMC, rather than having to log onto the management cluster directly.

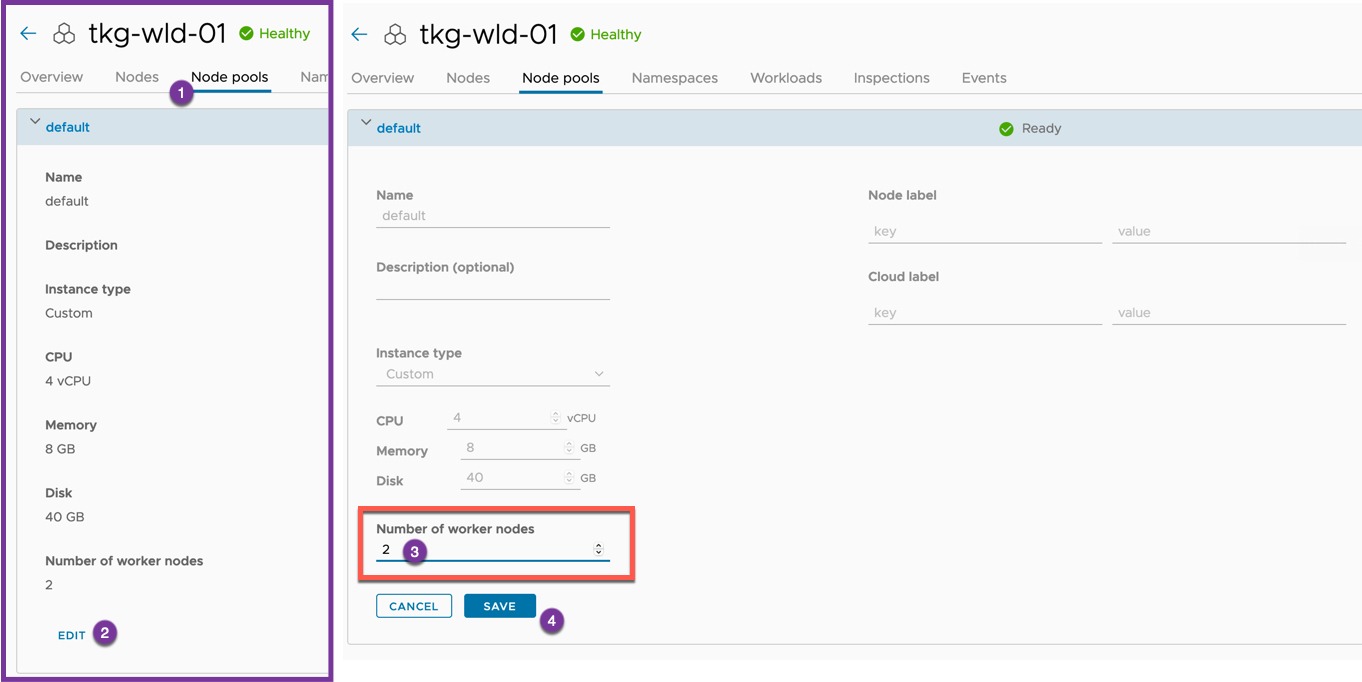

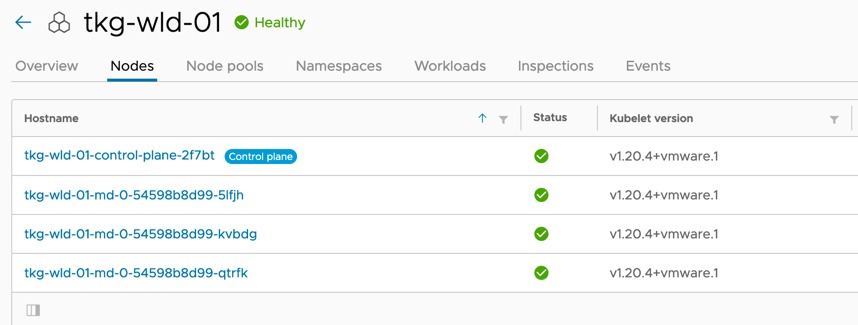

Within your workload cluster, click on the Node Pools Tab. Edit the default node pool, and scale up or down your worker nodes and click save.

TMC will then communicate with the management cluster to scale the workload cluster.

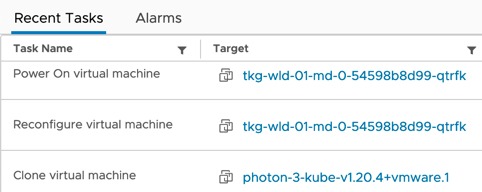

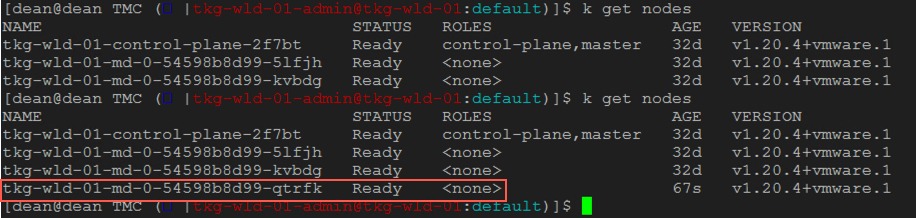

Below you can see the additional virtual machines built in vCenter, the updated nodes list using kubectl and finally showing the additional node in TMC.

Provisioning a new workload cluster via the management cluster

The last piece I am going to cover, is using TMC to provision a new TKG workload cluster to my VMC environment, proxied via the management cluster we attached.

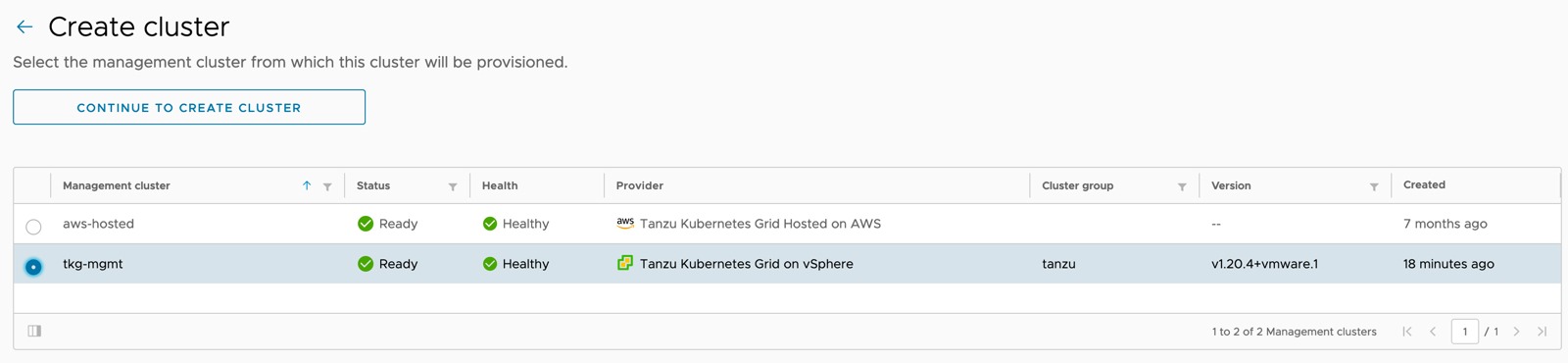

- First on the Clusters page, click to Create Cluster.

- Select your management cluster from which this new cluster will be provisioned

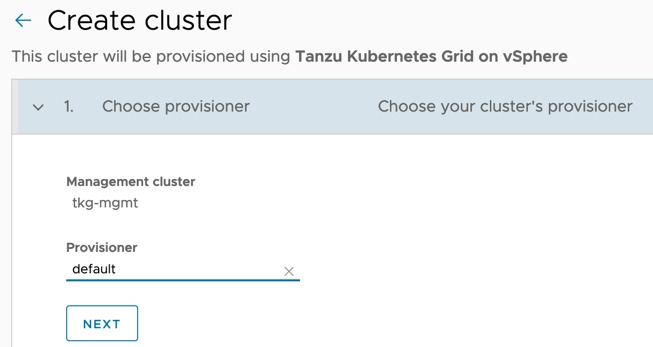

- Select the provisioner (you’re only going to have default as an available option)

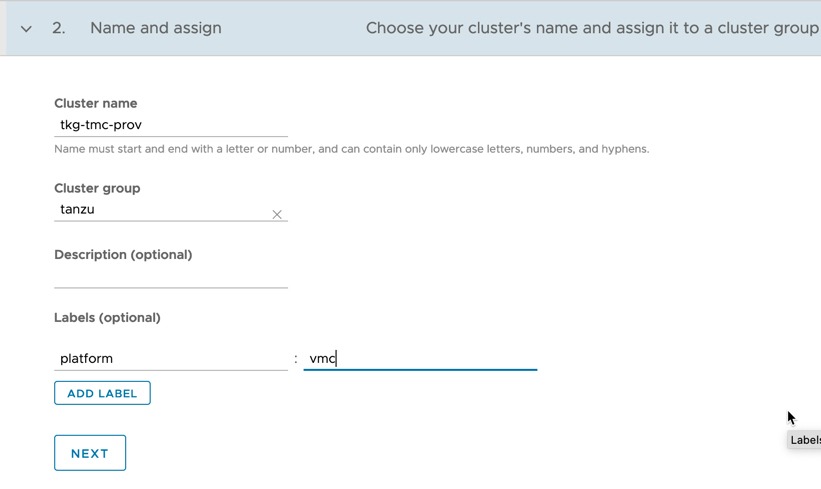

- Provide a cluster name, which cluster group to link with and any descriptions and labels

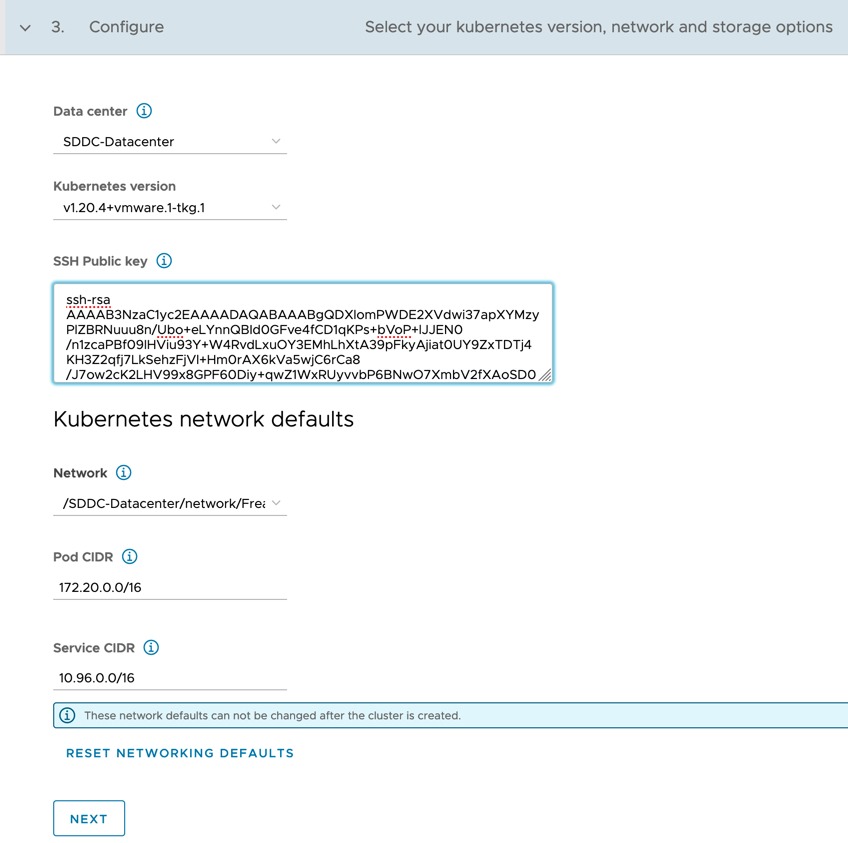

- Select your Datacenter, Kubernetes version and networking configurations, as well as providing an SSH Public Key

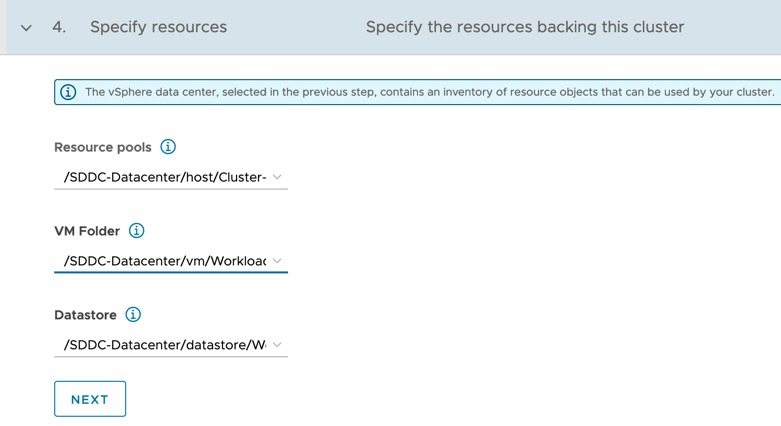

- Specify the resource pool, VM Folder and Datastore you want the new workload cluster you want to be provisioned to

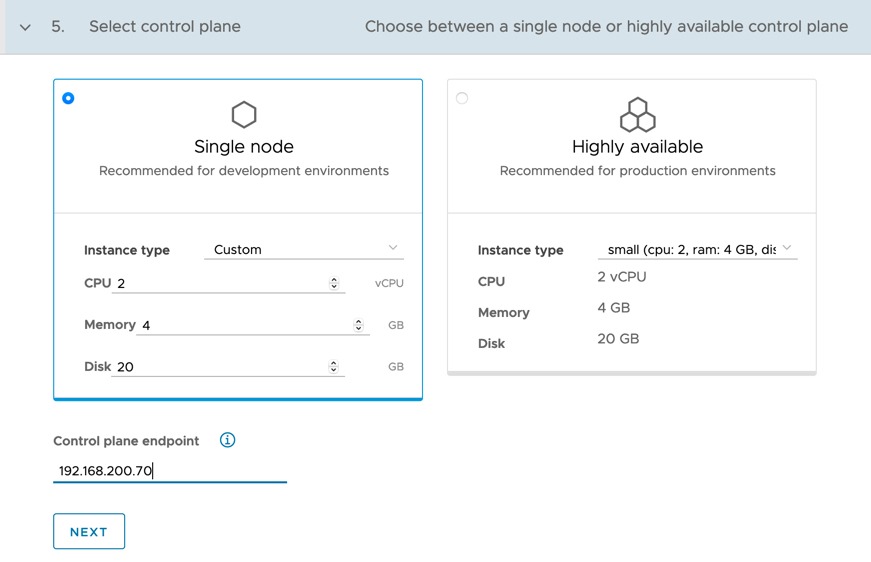

- Choose your control plane type: single node or highly available as well as instance type (size of the nodes). Provide a control plane endpoint.

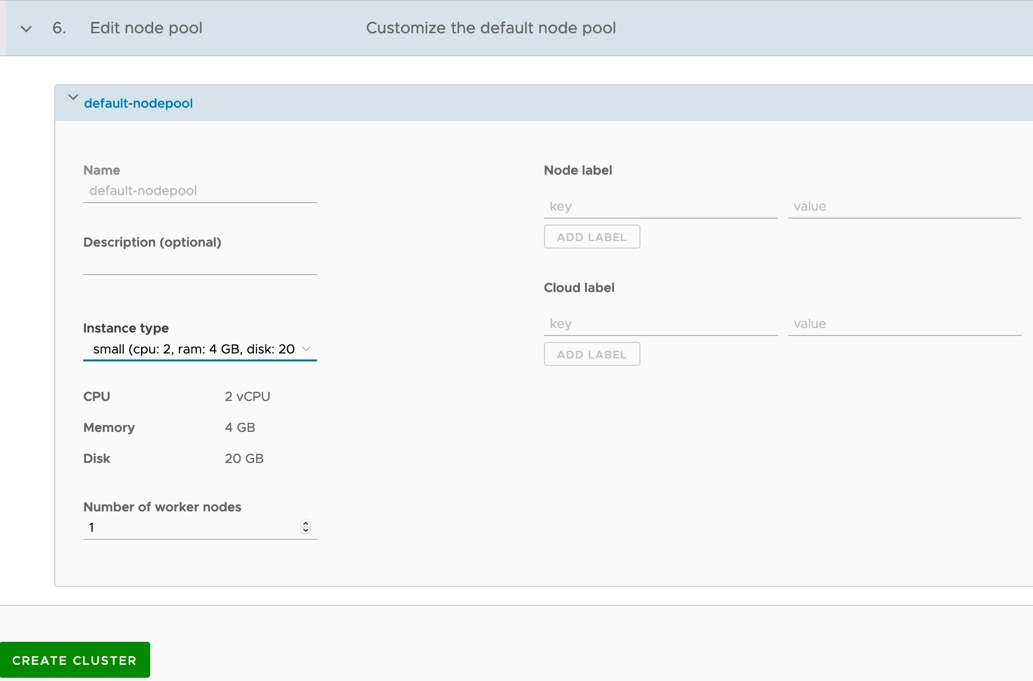

- Customize the default node pool for the worker nodes, you can select from an existing instance type (node size) or specify a custom selection of resources. Select the number of worker nodes for the cluster, as well as optionally any labels you want to provide.

- Node labels are useful for selector purposes when targeting deployments of applications to certain nodes.

- Click Create Cluster

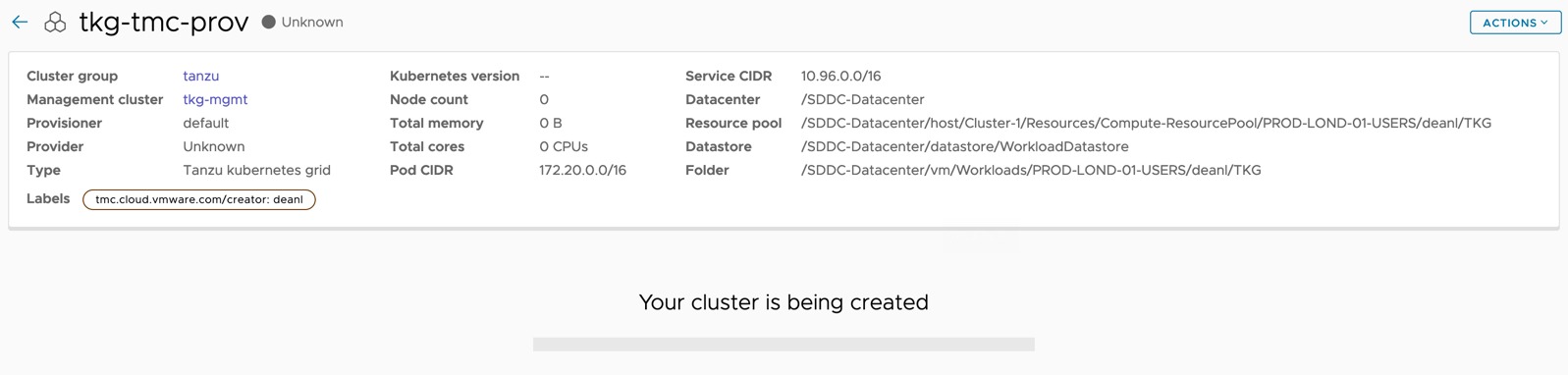

You’ll be shown your new cluster overview page as the cluster is being created.

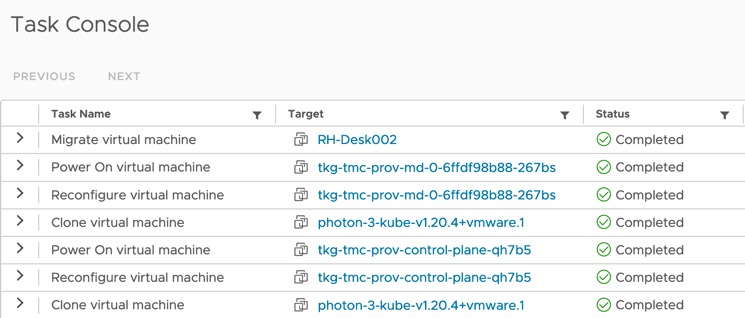

Below you can see the new cluster virtual machines being provisioned in my vCenter.

Finally, once the cluster has been provisioned and online, we can see it’s showing as healthy in TMC, and as in the above section, I have the option to scale my worker nodes, and if I delete the cluster in TMC, I can select to have TMC to also de-provision and delete my cluster in my VMC environment.

Wrap-up and resources

This is a simple walkthrough, but that is the point, it’s quick and easy to use TMC to manage your TKG deployments on VMC and AVS, there is also support for Tanzu Kubernetes Grid Service (TKGS), which is vSphere with Tanzu, the integrated native Kubernetes offering built into vSphere itself.

As a reminder, to take real advantage of TMC I recommend you read the follow posts:

Tanzu Mission Control - Getting Started Tanzu Mission Control - Cluster Inspections - Workspaces and Policies - Data Protection - Deploying TKG clusters to AWS - Upgrading a provisioned cluster - Delete a provisioned cluster - TKG Management support and provisioning new clusters - TMC REST API - Postman Collection - Using custom policies to ensure Kasten protects a deployed application

You can get hands on experience of Tanzu Mission Control yourself over on the VMware Hands-on-Lab website, which is always free!

HOL-2032-01-CNA – VMware Tanzu Mission Control

- In this lab you will be exposed to various aspects of VMware’s Tanzu Mission Control including Kubernetes cluster lifecycle management, health checks, environment at-a-glance monitoring, access policies, and conformance testing.

And I’ll sign off with links to the official resources.

- Tanzu Mission Control

Regards