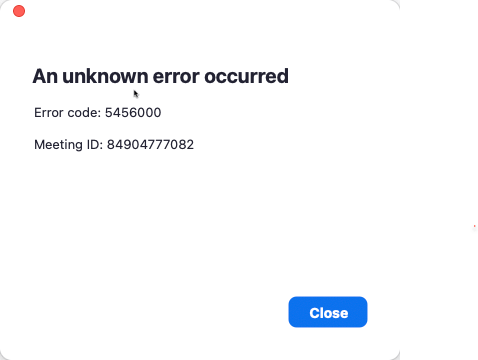

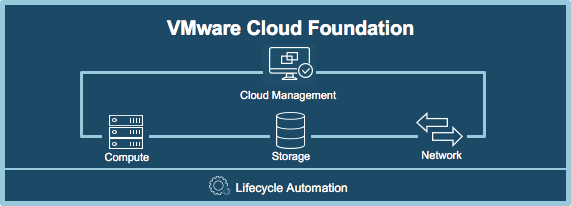

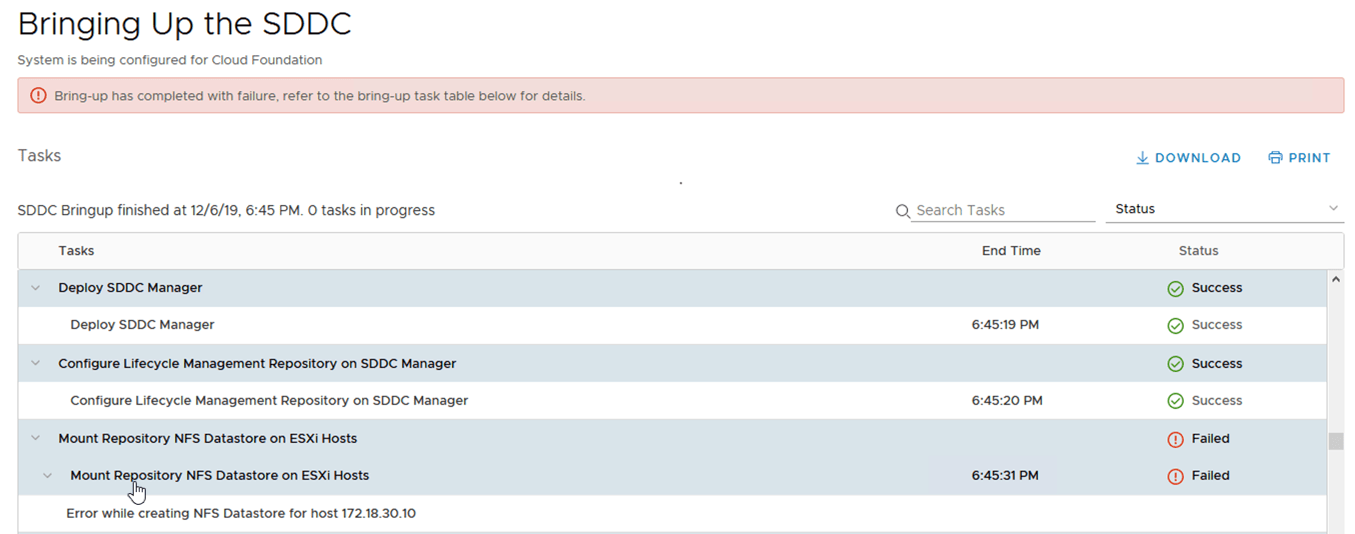

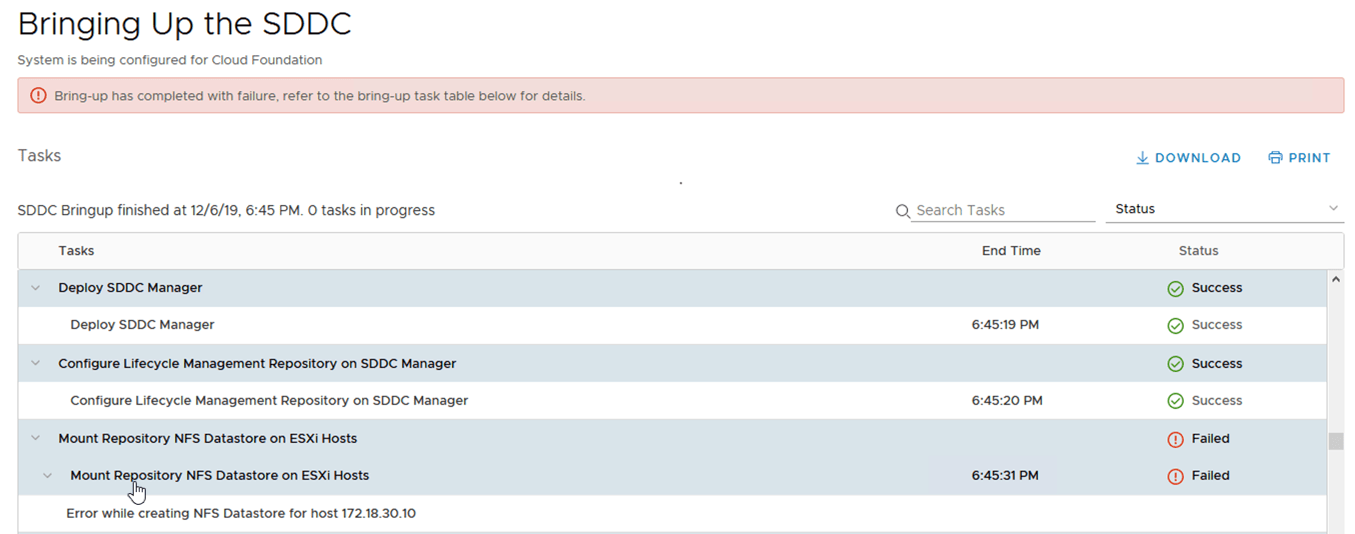

Whilst deploying my nested VCF environment for my home lab, I kept hitting the same issue over and over again, even when I rolled the environment back and redeployed it.

Error while creating NFS Datastore for host XXX.XXX.XXX.XXX

Looking into the debug log files on the Cloud Builder appliance found in the below location;

| vcf-bringup-debug.log |

/var/log/vmware/vcf/bringup/ |

You can see basically the same error message, and not much help.

ERROR [c.v.e.s.o.model.error.ErrorFactory,pool-3-thread-7] [TP9EK1] VCF_HOST_CREATE_NFS_DATASTORE_FAILED

And the log ends with the below comments, I’ve left my Task ID numbers in, but obviously these are unique to my bring up;

DEBUG [c.v.e.s.o.c.ProcessingTaskSubscriber,pool-3-thread-7] Collected the following errors for task with name CreateNFSDatastoreOnHostsAction and ID 7f000001-6ed0-12cd-816e-d1f7a33f006f: [ExecutionError [errorCode=null, errorResponse=LocalizableErrorResponse(messageBundle=com.vmware.vcf.common.fsm.plugins.action.hostmessages)]]

DEBUG [c.v.e.s.o.c.ProcessingTaskSubscriber,pool-3-thread-19] Invoking task CreateNFSDatastoreOnHostsAction.UNDO Description: Mount Repository NFS Datastore on ESXi Hosts, Plugin: HostPlugin, ParamBuilder null, Input map: {hosts=SDDCManagerConfiguration____13__hosts, nasDatastoreName=SDDCManagerConfiguration____13__nasDatastoreName, nfsRepoDirPath=SDDCManagerConfiguration____13__nfsRepoDirPath, repoVMIp=SDDCManagerConfiguration____13__repoVMIp}, Id: 7f000001-6ed0-12cd-816e-d1f7a33f006e ...

DEBUG [c.v.e.s.o.c.c.ContractParamBuilder,pool-3-thread-19] Contract task Mount Repository NFS Datastore on ESXi Hosts input: {"hosts":[{"address":"172.18.30.10","username":"root","password":"*****"},{"address":"172.18.30.11","username":"root","password":"*****"},{"address":"172.18.30.12","username":"root","password":"*****"},{"address":"172.18.30.13","username":"root","password":"*****"}],"nasDatastoreName":"lcm-bundle-repo","nfsRepoDirPath":"/nfs/vmware/vcf/nfs-mount","repoVMIp":"172.18.30.50"}

DEBUG [c.v.e.s.o.c.ProcessingTaskSubscriber,pool-3-thread-19] Collected the following errors for task with name CreateNFSDatastoreOnHostsAction and ID 7f000001-6ed0-12cd-816e-d1f7a33f006f: [ExecutionError [errorCode=null, errorResponse=LocalizableErrorResponse(messageBundle=com.vmware.vcf.common.fsm.plugins.action.hostmessages)]]

WARN [c.v.e.s.o.c.ProcessingOrchestratorImpl,pool-3-thread-10] Processing State completed with failure

INFO [c.v.e.s.o.core.OrchestratorImpl,pool-3-thread-15] End of Orchestration with FAILURE for Execution ID 8c9c5ab1-e48a-414e-9c4d-8936e6f12c91

The Fix

I struggled with this one for a while, at first I considered maybe an IP address conflict with the SDDC manager appliance, but it wasn’t that, I had the same issue after trying again with a different IP address.

I discussed this with our internal support, and I was pointed to the direction of KB 1005948.

When I followed the article, I noticed that the default vmkernel used to access my subnet and the subnet of my SDDC manager was VMK2, which is assigned for VSAN traffic; Continue reading Nested VCF Lab – Error while creating NFS datastore →