Did you know you can use the -f argument with kubectl get? Yep me either.

It’s pretty handy actually, as it will provide the status for all your Kubernetes resources deployed using that file or even file from hyperlink!

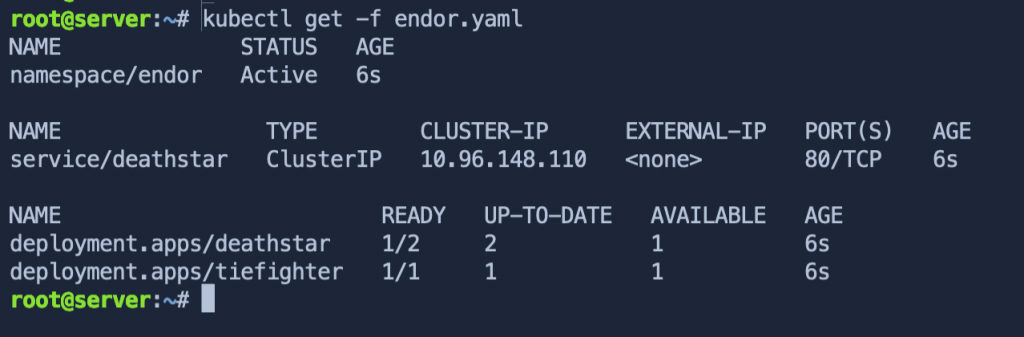

Below is a screenshot example using a file.

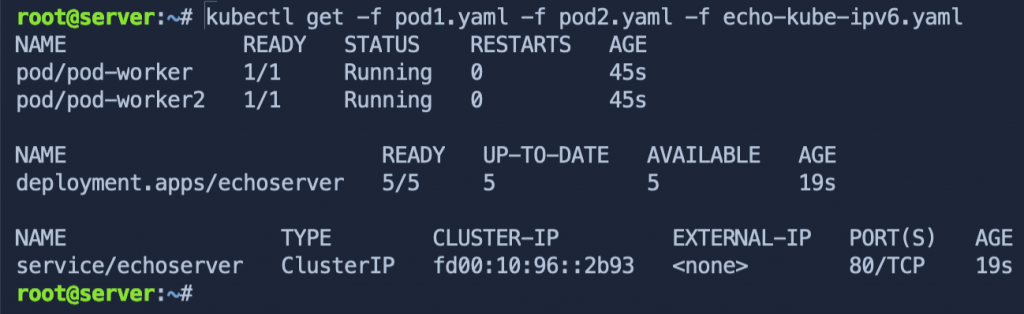

You can also specify multiple files by adding -f {file}for each file you want to check (this also works when deploying resources too!).

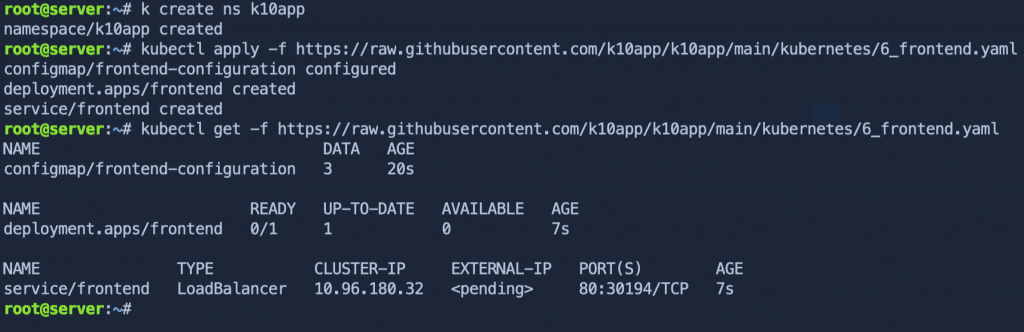

And another example, using a hyperlink as the file location.

Hope this little tip helps someone!

Just for fun, here’s the ChatGPT write-up!

The command “kubectl get -f” is used in Kubernetes to retrieve information about Kubernetes resources defined in a file or multiple files. Let’s break down the command and its components:

- “kubectl”: It is the command-line interface (CLI) tool for interacting with Kubernetes clusters. It allows you to manage and control Kubernetes resources.

- “get”: It is the action or subcommand used to retrieve information about Kubernetes resources.

- “-f”: It is a flag that specifies that the input will be provided from a file or multiple files rather than directly on the command line. It is followed by the path to the file(s) containing the Kubernetes resource definitions.

When you use “kubectl get -f <file>”, Kubernetes reads the file(s) provided and retrieves the information about the resources defined within those files. The information can include the names, statuses, and other details of the resources.

For example, if you have a file named “deployment.yaml” that defines a Kubernetes Deployment resource, you can use the command “kubectl get -f deployment.yaml” to retrieve information about that specific Deployment resource.

You can also provide multiple files by separating them with commas or specifying a directory containing multiple resource files. For instance, “kubectl get -f file1.yaml,file2.yaml” or “kubectl get -f /path/to/files” (where /path/to/files is the directory path).

By using this command, you can quickly retrieve information about Kubernetes resources defined in files without needing to manually create or modify resources using the command line.

Regards

![kubelet Unable to attach or mount volumes unmounted volumescatalog persistent storage unattached volumesk10 k10 token lbqpw catalog persistent storage timed out waiting for the condition 8 kubelet Unable to attach or mount volumes- unmounted volumes=[catalog-persistent-storage], unattached volumes=[k10-k10-token-lbqpw catalog-persistent-storage]- timed out waiting for the condition](https://veducate.co.uk/wp-content/uploads/2021/09/kubelet-Unable-to-attach-or-mount-volumes-unmounted-volumescatalog-persistent-storage-unattached-volumesk10-k10-token-lbqpw-catalog-persistent-storage-timed-out-waiting-for-the-condition.jpg)