The Issue

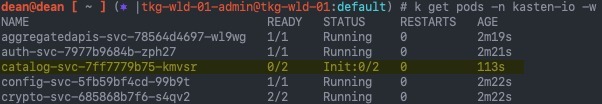

When I updated my Kasten application in my Kubernetes cluster, I found that one of the pods was stuck in “init” status.

dean@dean [ ~ ] (⎈ |tkg-wld-01-admin@tkg-wld-01:default) # k get pods -n kasten-io -w NAME READY STATUS RESTARTS AGE aggregatedapis-svc-78564d4697-wl9wg 1/1 Running 0 3m9s auth-svc-7977b9684b-zph27 1/1 Running 0 3m11s catalog-svc-7ff7779b75-kmvsr 0/2 Init:0/2 0 2m43s

Running a describe on that pod pointed to the fact the volume could not be attached.

Events: Type Reason Age From Message ---- ------ ---- ---- ------- Normal Scheduled 2m58s default-scheduler Successfully assigned kasten-io/catalog-svc-7ff7779b75-kmvsr to tkg-wld-01-md-0-54598b8d99-rpqjf Warning FailedMount 55s kubelet Unable to attach or mount volumes: unmounted volumes=[catalog-persistent-storage], unattached volumes=[k10-k10-token-lbqpw catalog-persistent-storage]: timed out waiting for the condition

The Cause

Some where along the line I found some stale volumeattachments linked to Kubernetes node that no longer exist in my cluster. This looks to be causing some confusion in the cluster who should be attaching the volume

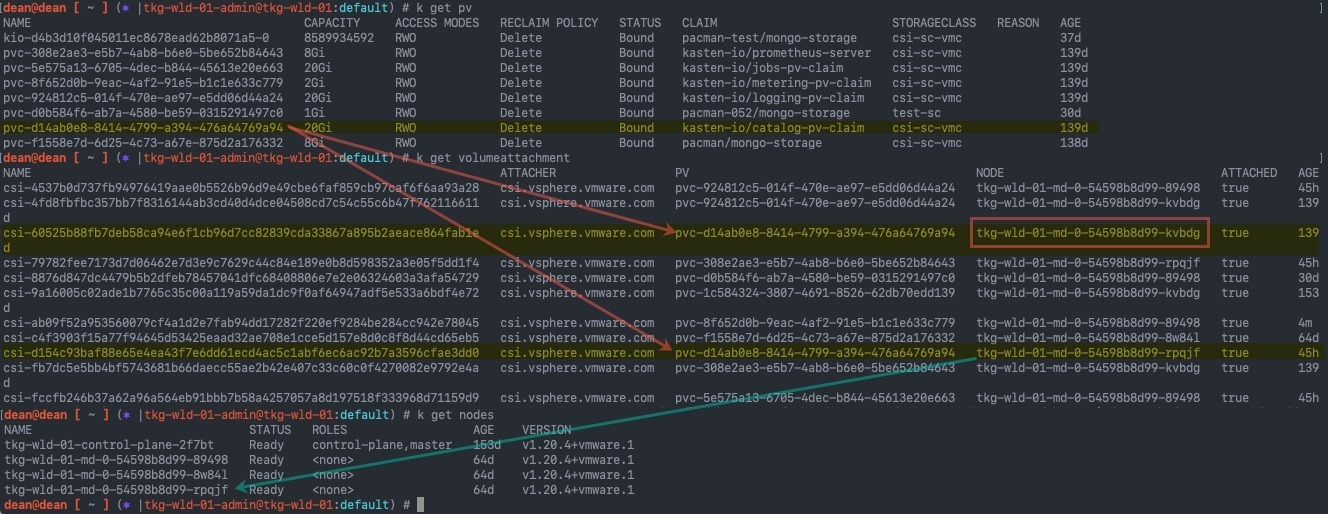

The image below shows:

- Find the Persistent Volume name linked to the associated claim for the failure in the pod events

- Map this to the available VolumeAttachments

- Reference VolumeAttachments for each node to available nodes in the cluster

- I’ve highlighted the missing node in the red box

The Fix

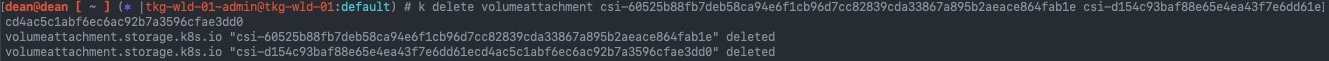

The fix is to remove the stale VolumeAttachment.

kubectl delete volumeattachment [volumeattachment_name]

After this your pod should eventually pick up and retry, or you could remove the pod and let Kubernetes replace it for you (so long as it’s part of a deployment or other configuration managing your application).

Regards

![kubelet Unable to attach or mount volumes unmounted volumescatalog persistent storage unattached volumesk10 k10 token lbqpw catalog persistent storage timed out waiting for the condition 3 kubelet Unable to attach or mount volumes- unmounted volumes=[catalog-persistent-storage], unattached volumes=[k10-k10-token-lbqpw catalog-persistent-storage]- timed out waiting for the condition](https://veducate.co.uk/wp-content/uploads/2021/09/kubelet-Unable-to-attach-or-mount-volumes-unmounted-volumescatalog-persistent-storage-unattached-volumesk10-k10-token-lbqpw-catalog-persistent-storage-timed-out-waiting-for-the-condition.jpg)