The Issue

I had to remove a demo EKS Cluster where I had screwed up an install of a Service Mesh. Unfortunately, it was left in a rather terrible state to clean up, hence the need to just delete it.

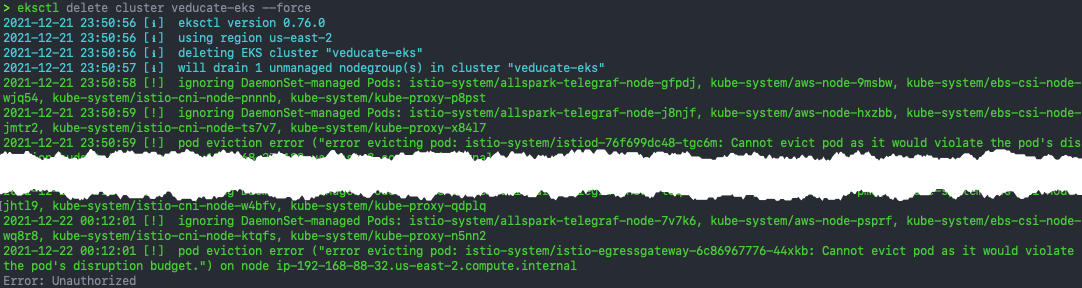

When I tried the usual eksctl delete command, including with the force argument, I was hitting errors such as:

2021-12-21 23:52:22 [!] pod eviction error ("error evicting pod: istio-system/istiod-76f699dc48-tgc6m: Cannot evict pod as it would violate the pod's disruption budget.") on node ip-192-168-27-182.us-east-2.compute.internal

With a final error output of:

Error: Unauthorized

The Cause

Well, the error message does call out the cause, moving the existing pods to other nodes is failing due to the configured settings. Essentially EKS will try and drain all the nodes and shut everything down nicely when it deletes the cluster. It doesn’t just shut everything down and wipe it. This is because inside of Kubernetes there are several finalizers that will call out actions to interact with AWS components (thanks to the integrations) and nicely clean things up (in theory).

To get around this, I first tried the following command, thinking if delete the nodegroup without waiting for a drain, this would bypass the issue:

eksctl delete nodegroup standard --cluster veducate-eks --drain=false --disable-eviction

This didn’t allow me to delete the cluster however, I still got the same error messages.

The Fix

So back to the error message, and then I realised it was staring me in the face!

Cannot evict pod as it would violate the pod's disruption budget

What is a Pod Disruption Budget? It’s essentially a way to ensure availability of your pods from someone killing them accidentality.

A PDB limits the number of Pods of a replicated application that are down simultaneously from voluntary disruptions. For example, a quorum-based application would like to ensure that the number of replicas running is never brought below the number needed for a quorum. A web front end might want to ensure that the number of replicas serving load never falls below a certain percentage of the total.

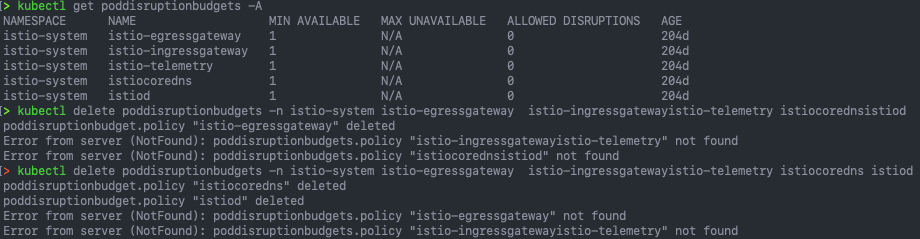

To find all configured Pod Disruption Budgets:

kubectl get poddisruptionbudget -A

Then delete as necessary:

kubectl delete poddisruptionbudget {name} -n {namespace}

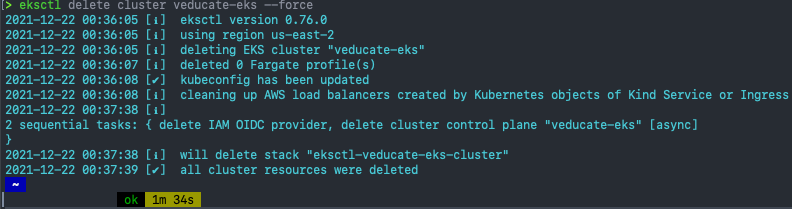

Finally, you should be able to delete your cluster.

Regards