This walk through will detail the technical configurations for using vRA Code Stream to deploy vSphere with Tanzu supervisor namespaces and guest clusters.

Requirement

For a recent customer proof-of-concept, we wanted to show the full automation capabilities and combine this with the consumption of vSphere with Tanzu.

The end goal was to use Cloud Assembly and Code Stream to cover several automation tasks, and then offer them as self-service capability via a catalog item for an end-user to consume.

High Level Steps

To achieve our requirements, we’ll be configuring the following:

- Cloud Assembly

- VCF SDDC Manager Integration

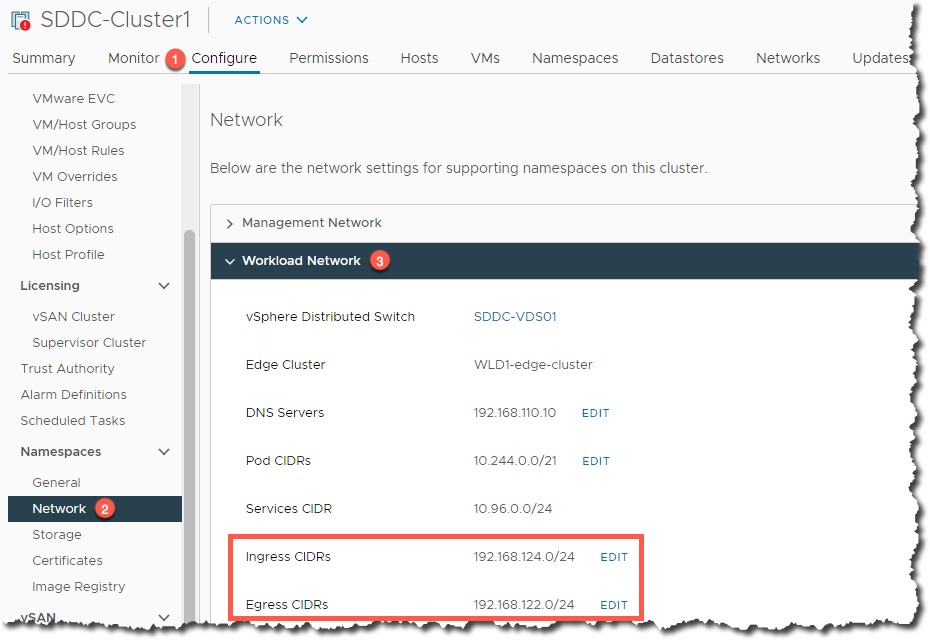

- Kubernetes Cloud Zone – Tanzu Supervisor Cluster

- Cloud Template to deploy a new Tanzu Supervisor Namespace

- Code Stream

- Tasks to provision a new Supervisor Namespace using the Cloud Assembly Template

- Tasks to provision a new Tanzu Guest Cluster inside of the Supervisor namespace using CI Tasks and the kubectl command line tool

- Tasks to create a service account inside of the Tanzu Guest Cluster

- Tasks to create Kubernetes endpoint for the new Tanzu Guest Cluster in both Cloud Assembly and Code Stream

- Service Broker

- Catalog Item to allow End-Users to provision a brand new Tanzu Guest Cluster in its own Supervisor Namespace

Pre-Requisites

In my Lab environment I have the following deployed:

- VMware Cloud Foundation 4.2

- With Workload Management enabled (vSphere with Tanzu)

- vRealize Automation 8.3

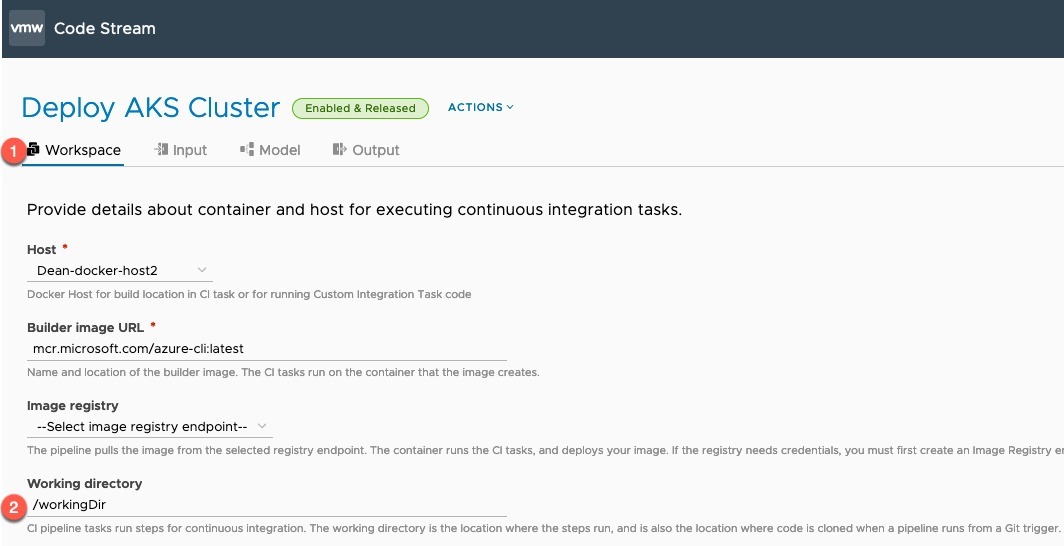

- A Docker host to be used by Code Stream

For the various bits of code, I have placed them in my GitHub repository here.

Configuring Cloud Assembly to deploy Tanzu supervisor namespaces

This configuration is detailed in this blog post, I’ll just cover the high-level configuration below.

- Configure an integration for SDDC manager under Infrastructure Tab > Integrations

Continue reading Walk through – Using vRA to deploy vSphere with Tanzu Namespaces & Guest Clusters