OpenShift Container Platform defaults to using an in-tree (non-CSI) plug-in to provision vSphere storage.

What’s New?

In OpenShift 4.9, the out-of-the-box installation of the vSphere CSI driver was tech preview. This has now moved to GA!

This means during an Installer-Provisioned-Installation cluster bring up, the vSphere CSI driver will be enabled.

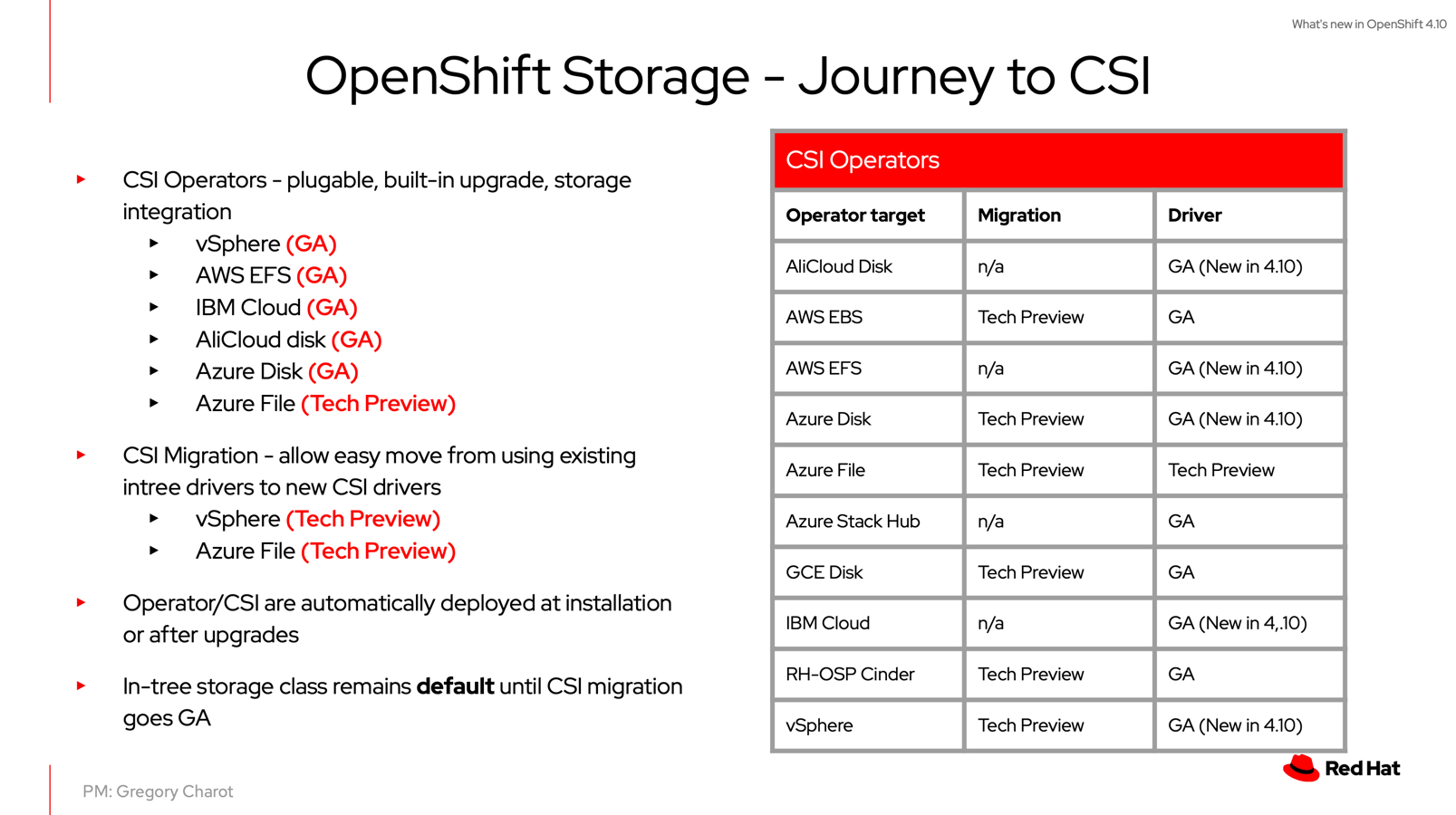

This is part of the future “journey” of OpenShift to CSI drivers. As you may be aware, the original storage implementations “in-tree” drivers will be removed from future versions of Kubernetes, making way for the CSI Drivers, a better storage integration implementation.

Therefore, the Red Hat team have been working with the upstream native vSphere CSI Driver, which is open-source and VMware Storage team, to integrating into the OpenShift installation.

The aim here is two-fold, take further advantage of the VMware platform, and to enable CSI Migration. So that is easier for customers to migrate their existing persistent data from in-tree provisioned storage constructs to CSI provisioned constructs.

How do I enable this?

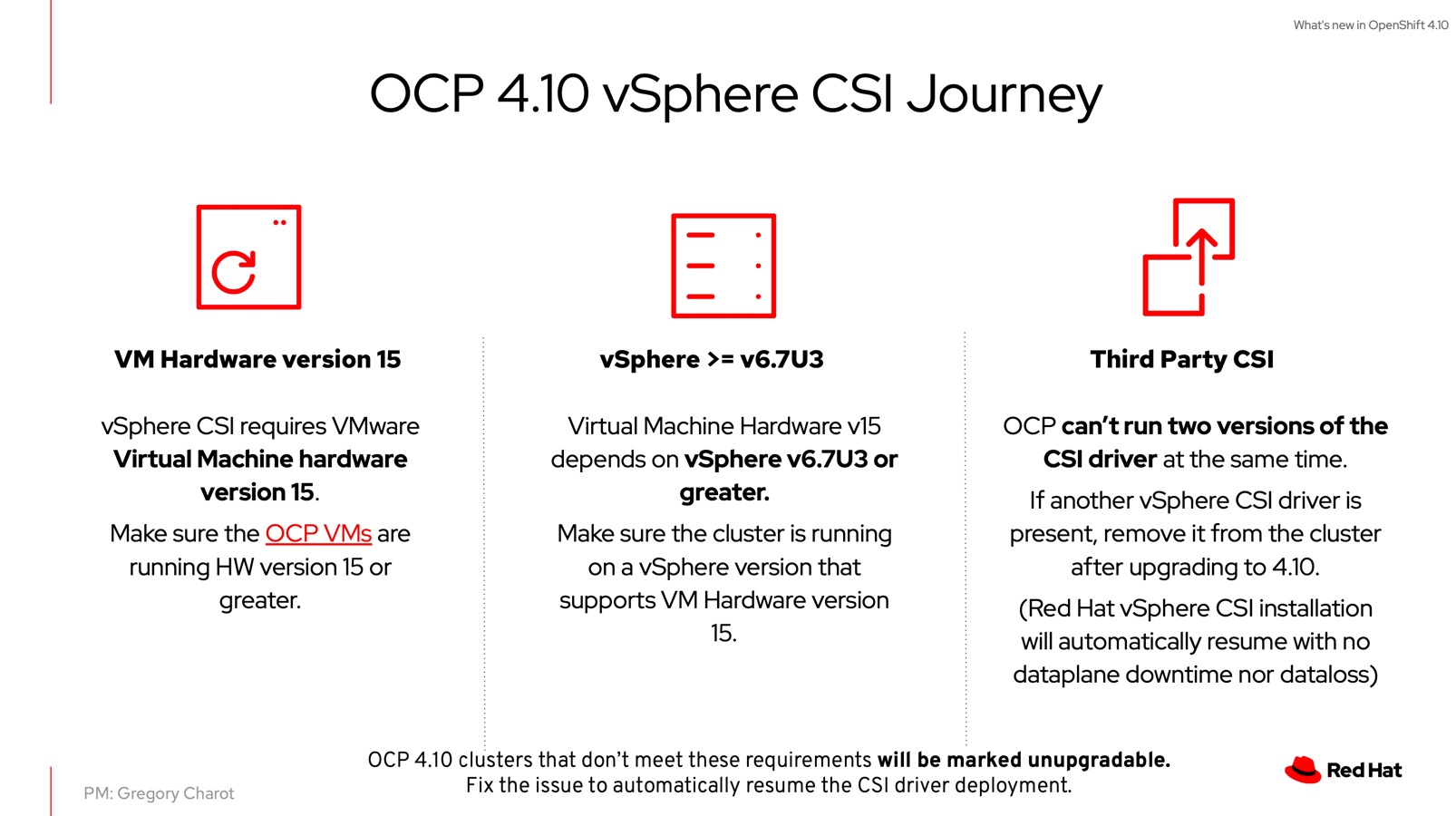

Below are some of the high-level requirements for the vSphere CSI Driver and OpenShift.

As mentioned before, Red Hat have used the upstream vSphere CSI Driver here. (You will even see commits from Red Hat for code improvements and enhancements.)

During your IPI installation, the CSI driver will be configured using the same credentials used to bring up the cluster on your VMware vSphere platform.

What does it look like?

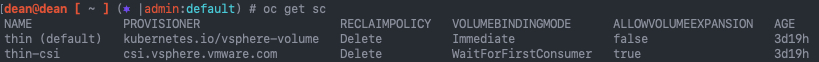

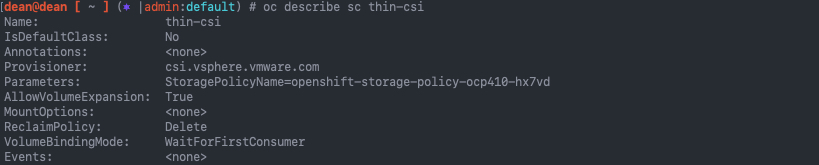

Once up and running, in your cluster, you will now see a second Storage Class “thin-csi”.

OpenShift Container Platform defaults to using an in-tree (non-CSI) plug-in to provision vSphere storage.

Below is the configuration. There are two things to note here:

- There are parameters set to use a vSphere Storage Policy for defining which datastore to use when creating Persistent Volumes.

- The Volume binding mode is configured for “WaitForFirstConsumer”

- This means the volumes will not be created until a Pod is created with a container which consumes the persistent volume linked to this storage class.

You can also create your own Storage Class using the provisioner “csi.vsphere.vmware.com”, such as in this example.

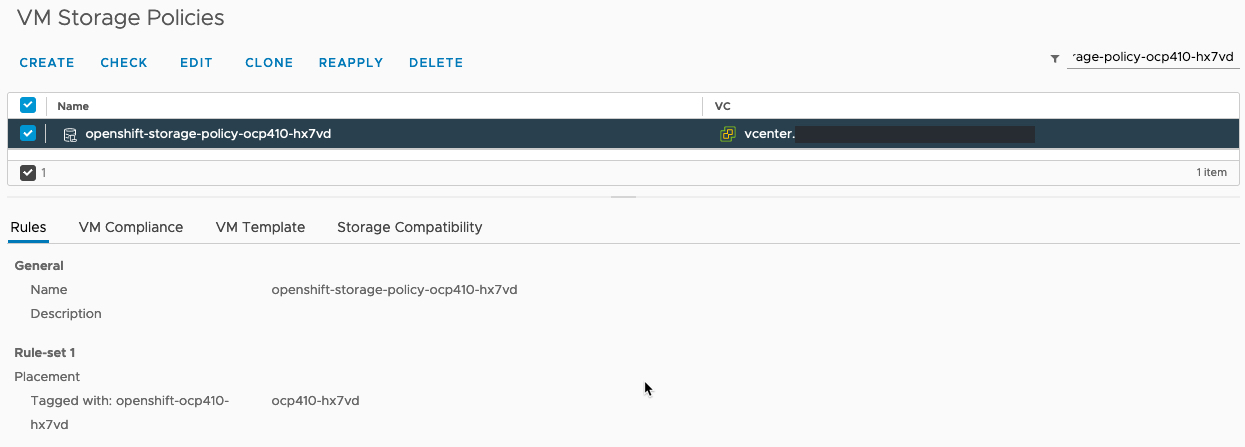

In your vCenter, you will find a storage policy is created automatically by the OpenShift Installer.

It is provisioned by linking to your datastore provided in the “install-config.yaml” file.

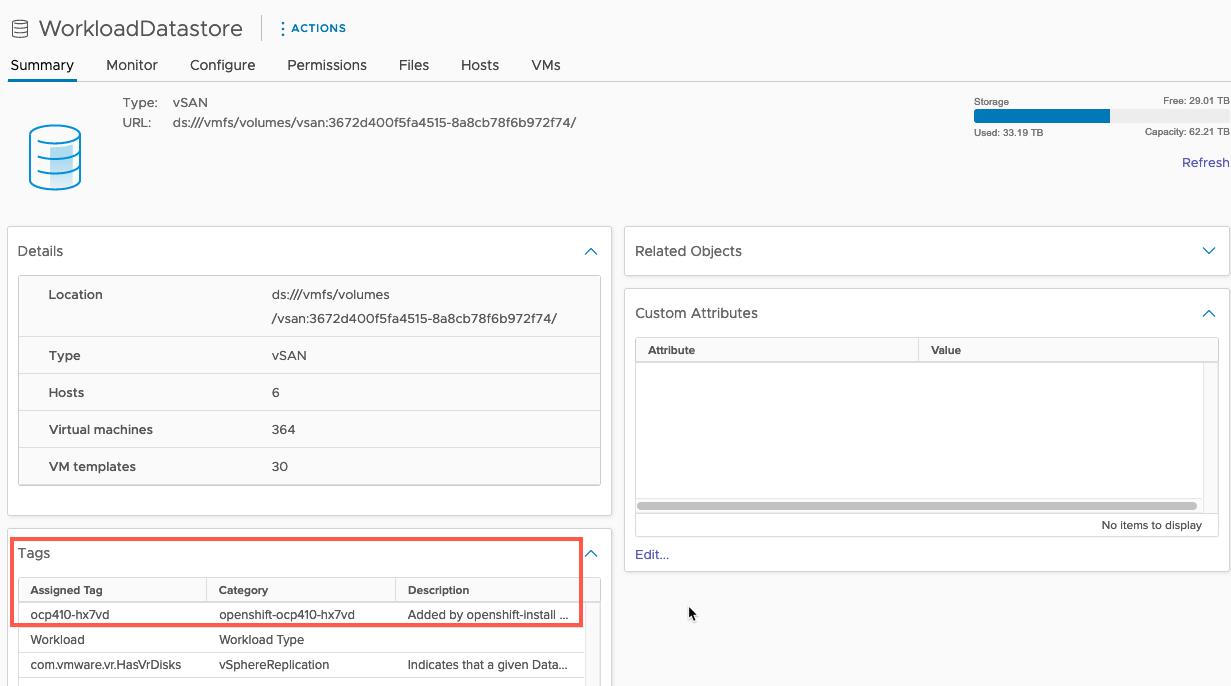

Below you can see the provided datastore in this installation, now has a vSphere tag automatically applied. In earlier versions of OpenShift on VMware using the IPI method, you will have noticed any VMs created also had tags applied.

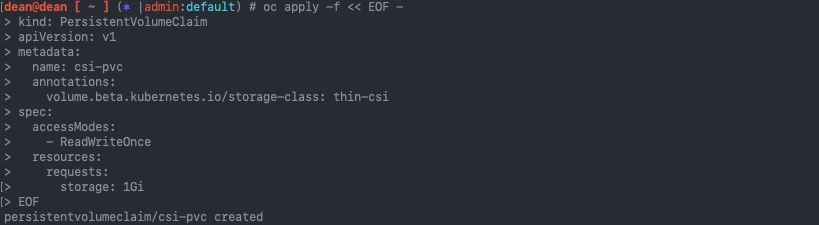

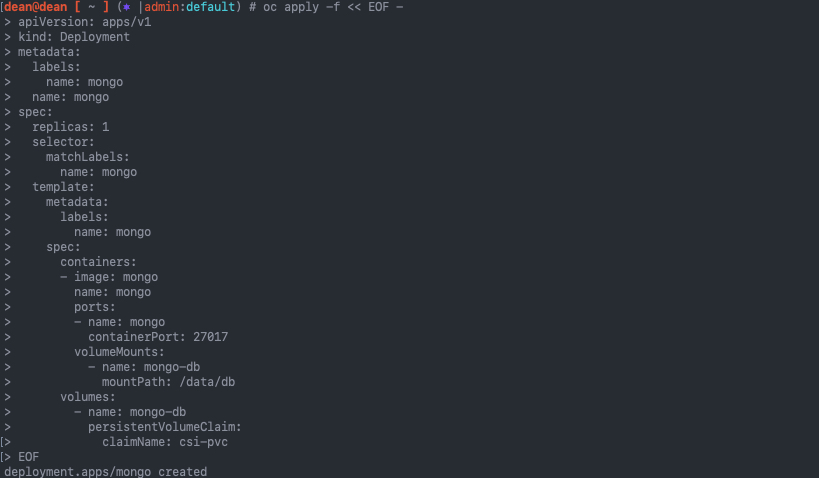

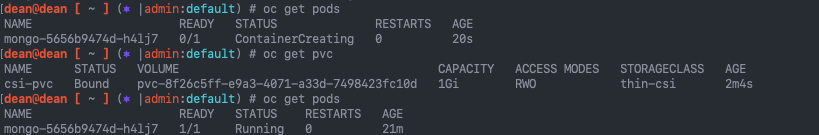

Back in our OpenShift cluster, I’ve created a persistent volume, using the “thin-csi” Storage Class.

Due to the volume binding setting, the PVC status will stay as pending until we create an associated Pod/Container.

Below is a example Pod.

Now we can see as the container is created, the PVC status changes to bound as expected.

What happens if I upgrade my existing OpenShift Cluster to 4.10?

If you have the vSphere CSI driver already installed, then nothing. Your original CSI configuration will stay in place. But ensure that the version deployed supports the version of OpenShift.

If you are using the vSphere Driver Operator to manage the vSphere CSI deployment. The same applies.

If you have no vSphere CSI installed at all. Then the OpenShift installer will provision this for you.

Who do I go to for support?

If you have issue:

- Inside your OpenShift cluster > Red Hat.

- With your vSphere environment > VMware.

If you need both companies to work together. That’s fine, there is agreements in place for both companies to provide the best support for any joint customers. As a customer however, you may need to open a ticket yourself with both vendors and provide the ticket numbers. This is standard practice across most vendors I’ve worked with in my time working in technology.

Regards