The Issue

Yep, I forgot my password. So I followed the official documentation to reset my local admin password.

- SSH to your vRLI appliance (primary node if it’s a cluster), as the root user

- Run this script which will output a new password

li-reset-admin-passwd.sh

The issue continued, after I was presented the new password, I still couldn’t login!

The Cause

Essentially after several failed attempts to remember my password, I had locked out the local admin account.

However, the vRealize Log Insight UI doesn’t tell you this. Just continues to say invalid credentials.

The Fix

- SSH to your vRLI appliance (primary node if it’s a cluster), as the root user

First, we will check that the Local user account is indeed locked out.

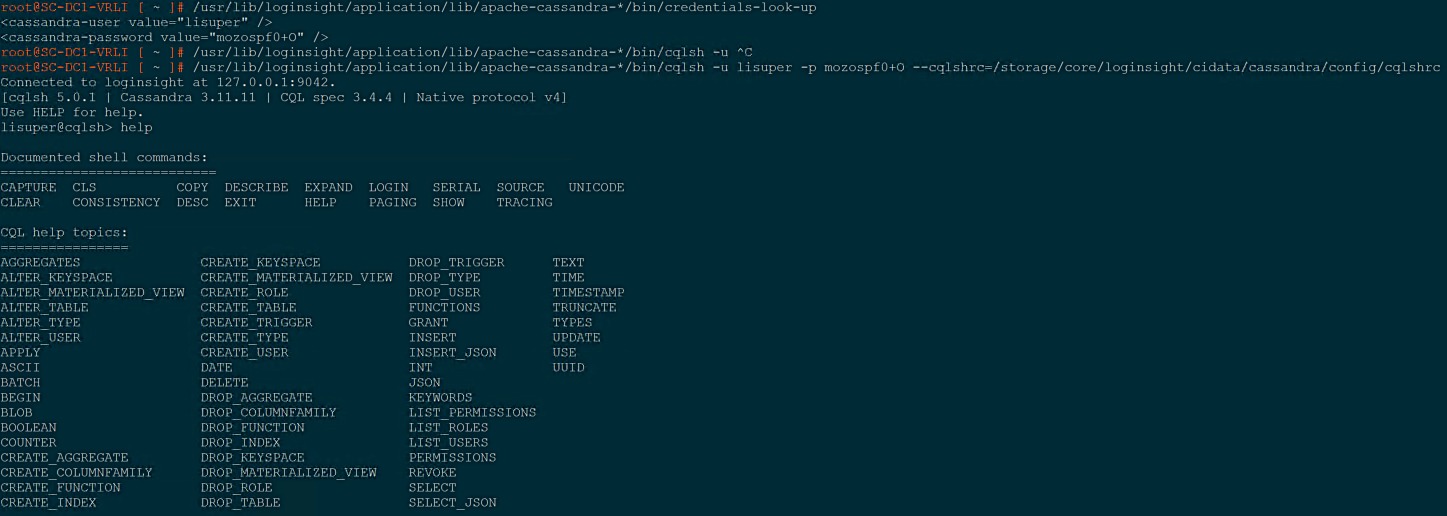

# We need to get the Cassandra DB credentials and login

root@SC-DC1-VRLI [ ~ ]# /usr/lib/loginsight/application/lib/apache-cassandra-*/bin/credentials-look-up

# The output will look something like this

<cassandra-user value="lisuper" />

<cassandra-password value="mozospf0+O" />

# We login with the following command

root@SC-DC1-VRLI [ ~ ]# /usr/lib/loginsight/application/lib/apache-cassandra-*/bin/cqlsh -u lisuper -p {password} --cqlshrc=/storage/core/loginsight/cidata/cassandra/config/cqlshrc

# change to use the correct database lisuper@cqlsh:logdb> USE logdb;

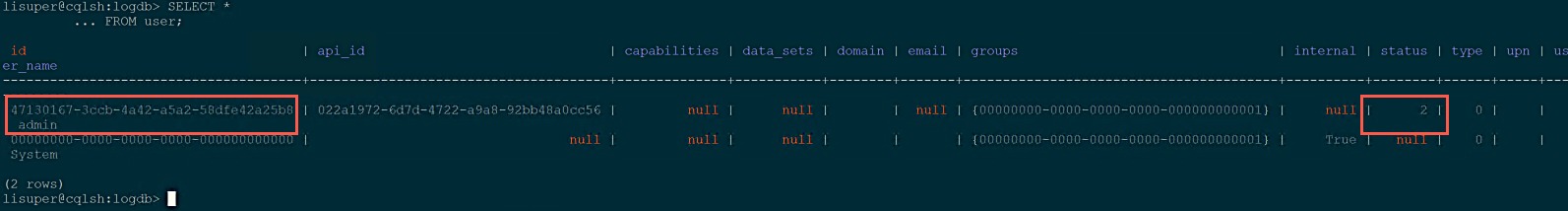

Now to get account status to see if it is locked out

- Status = 1 – Account is active

- Status = 2 – Account is locked out

# Run the below command to get all the rows from the user table

lisuper@cqlsh:logdb> SELECT *

... FROM user;

# the output will look like the following, you will need the id (first column) and to ensure the status is set to 2

id | api_id | capabilities | data_sets | domain | email | groups | internal | status | type | upn | user_name

--------------------------------------+--------------------------------------+--------------+-----------+--------+-------+----------------------------------------+----------+--------+------+-----+-----------

47130167-3ccb-4a42-a5a2-58dfe42a25b8 | 022a1972-6d7d-4722-a9a8-92bb48a0cc56 | null | null | | null | {00000000-0000-0000-0000-000000000001} | null | 2 | 0 | | admin

00000000-0000-0000-0000-000000000000 | null | null | null | | | {00000000-0000-0000-0000-000000000001} | True | null | 0 | | System

(2 rows)

lisuper@cqlsh:logdb>

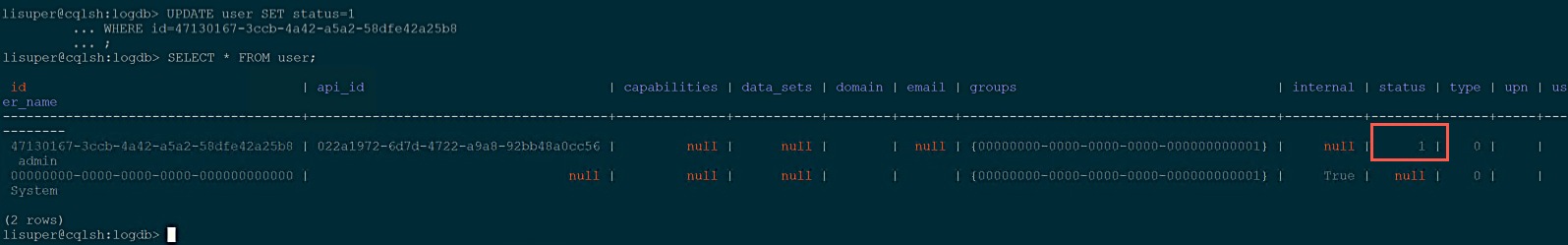

Now to re-enable the account

# Run the update command and input your users id

lisuper@cqlsh:logdb> UPDATE user SET status=1

... WHERE id=47130167-3ccb-4a42-a5a2-58dfe42a25b8

... ;

# Confirm the user status is now 1

lisuper@cqlsh:logdb> SELECT * FROM user;

id | api_id | capabilities | data_sets | domain | email | groups | internal | status | type | upn | user_name

--------------------------------------+--------------------------------------+--------------+-----------+--------+-------+----------------------------------------+----------+--------+------+-----+-----------

47130167-3ccb-4a42-a5a2-58dfe42a25b8 | 022a1972-6d7d-4722-a9a8-92bb48a0cc56 | null | null | | null | {00000000-0000-0000-0000-000000000001} | null | 1 | 0 | | admin

00000000-0000-0000-0000-000000000000 | null | null | null | | | {00000000-0000-0000-0000-000000000001} | True | null | 0 | | System

(2 rows)

lisuper@cqlsh:logdb>

Regards