In this blog post I’m going to dive into how you can create a Tanzu Kubernetes Grid cluster and specify your own container network interface, for example, Cilium. Expanding on the installation, I’ll also cover installing a load balancer service, deploying a demo app, and showing some of the observability feature as well.

What is Cilium?

Cilium is an open source software for providing, securing and observing network connectivity between container workloads - cloud native, and fueled by the revolutionary Kernel technology eBPF

Let’s unpack that from the official website marketing tag line.

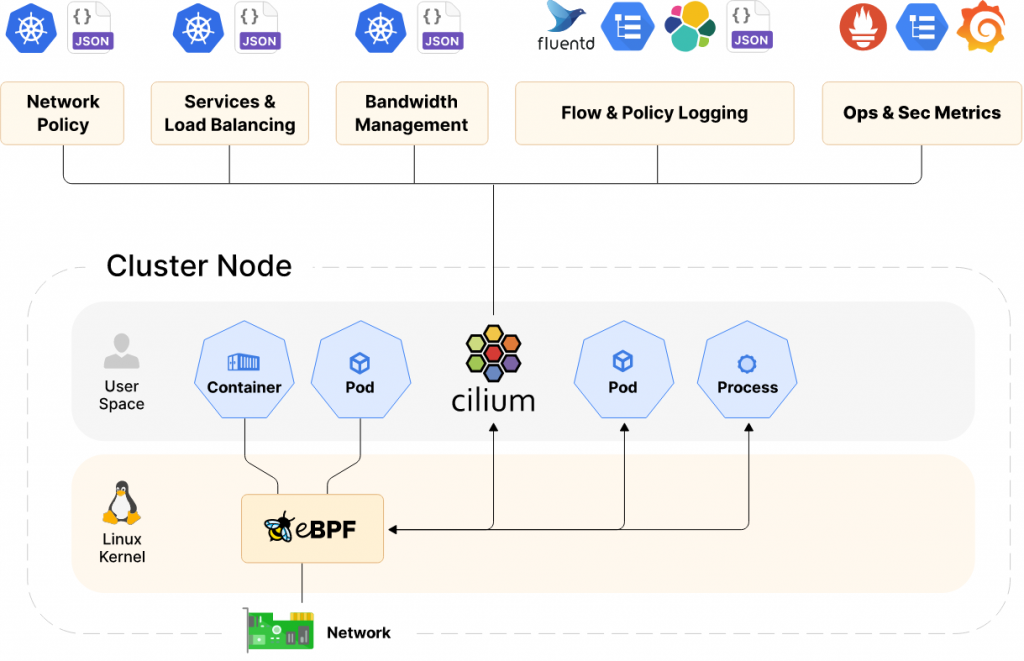

Cilium is a container network interface for Kubernetes and other container platforms (apparently there are others still out there!), which provides the cluster networking functionality. It goes one step further than other CNIs commonly used, by using a Linux Kernel software technology called eBPF, and allows for the insertion of security, visibility, and networking control logic into the Linux kernel of your container nodes.

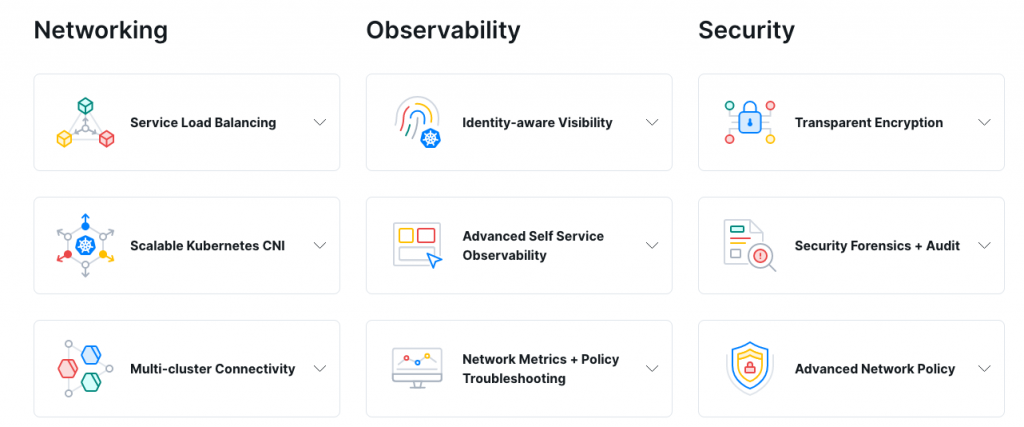

Below is a high-level overview of the features.

And a high-level architecture overview.

Is it supported to run Cilium in Tanzu Kubernetes cluster?

Tanzu Kubernetes Grid allows you to bring your own Kubernetes CNI to the cluster as part of the Cluster bring-up. You will be required to take extra steps to build a cluster during this type of deployment, as described below in this blog post.

As for support for a CNI outside of Calico and Antrea, you as the customer/consumer must provide that. If you are using Cilium for example, then you can gain enterprise level support for the CNI, from the likes of Isovalent.

Recording

How to deploy a Tanzu Kubernete Cluster with Cilium

Before we get started, we need to download the Cilium CLI tool, which is used to install Cilium into our cluster.

The below command downloads and installs the latest stable version to your /usr/local/bin location. You can find more options here.

CILIUM_CLI_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/cilium-cli/master/stable.txt)

CLI_ARCH=amd64

if [ "$(uname -m)" = "aarch64" ]; then CLI_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/cilium-cli/releases/download/${CILIUM_CLI_VERSION}/cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

sha256sum --check cilium-linux-${CLI_ARCH}.tar.gz.sha256sum

sudo tar xzvfC cilium-linux-${CLI_ARCH}.tar.gz /usr/local/bin

rm cilium-linux-${CLI_ARCH}.tar.gz{,.sha256sum}

Essentially there are two steps for creating a Tanzu Kubernetes Grid cluster using your own CNI. The first is to specify the below config in the cluster file:

-

CNI: none

Second, is to install the CNI before the cluster shows as Ready status. You can find the details below in the official documentation.

First lets create a cluster configuration, I’ve copied and altered a file that exists in my clusterconfigs folder. As you can see below, I’ve highlighted the CNI config.

#cat ~/.config/tanzu/tkg/clusterconfigs/tkg-cilium-2.yaml AVI_ENABLE: "false" CLUSTER_CIDR: 100.96.0.0/11 CLUSTER_NAME: tkg-cilium-2 CLUSTER_PLAN: dev CNI: none ENABLE_CEIP_PARTICIPATION: "true" ENABLE_MHC: "true" IDENTITY_MANAGEMENT_TYPE: none INFRASTRUCTURE_PROVIDER: vsphere OS_NAME: ubuntu SERVICE_CIDR: 100.64.0.0/13 TKG_HTTP_PROXY_ENABLED: "false" VSPHERE_CONTROL_PLANE_DISK_GIB: "20" VSPHERE_CONTROL_PLANE_ENDPOINT: 192.168.200.71 VSPHERE_CONTROL_PLANE_MEM_MIB: "4096" VSPHERE_CONTROL_PLANE_NUM_CPUS: "2" VSPHERE_DATACENTER: /Datacenter VSPHERE_DATASTORE: /Datacenter/datastore/Datastore VSPHERE_FOLDER: /Datacenter/vm/TKG VSPHERE_NETWORK: vEducate_NW1 VSPHERE_PASSWORD: VMware1! VSPHERE_RESOURCE_POOL: /Datacenter/host/Cluster/Resources/TKG VSPHERE_SERVER: vcenter.veducate.co.uk VSPHERE_SSH_AUTHORIZED_KEY: ssh-rsa AAA.... VSPHERE_TLS_THUMBPRINT: .... VSPHERE_USERNAME: [email protected] VSPHERE_WORKER_DISK_GIB: "20" VSPHERE_WORKER_MEM_MIB: "4096" VSPHERE_WORKER_NUM_CPUS: "2"

Another quick area to call out before we proceed, I found that creating and bootstrapping a PhotonOS Node with Cilium would fail but works fine with Ubuntu. I reached out to a friend who works with Cilium to confirm they have not tested the PhotonOS for deployment, so consider it unsupported by them.

Create your Tanzu Cluster using the cluster config file you have created.

tanzu cluster create {cluster_name} -f {filename}

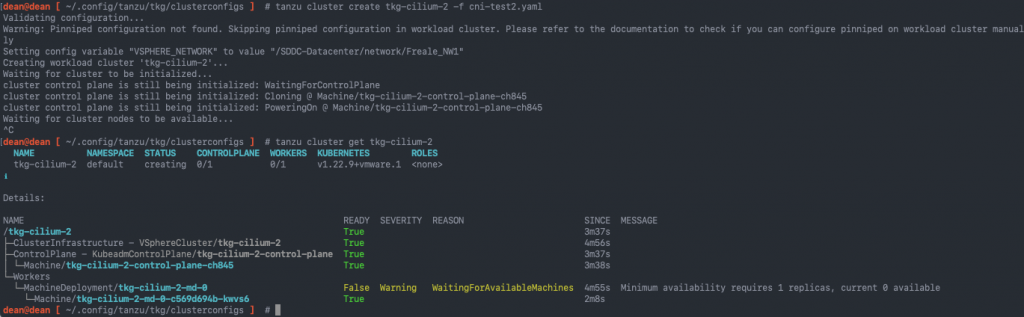

Because there is no CNI being installed by the cluster configuration, your console output will look like the below.

Validating configuration... Warning: Pinniped configuration not found. Skipping pinniped configuration in workload cluster. Please refer to the documentation to check if you can configure pinniped on workload cluster manually Setting config variable "VSPHERE_NETWORK" to value "/SDDC-Datacenter/network/Freale_NW1" Creating workload cluster 'tkg-cilium-2'... Waiting for cluster to be initialized... cluster control plane is still being initialized: WaitingForControlPlane cluster control plane is still being initialized: Cloning @ Machine/tkg-cilium-2-control-plane-ch845 cluster control plane is still being initialized: PoweringOn @ Machine/tkg-cilium-2-control-plane-ch845 Waiting for cluster nodes to be available...

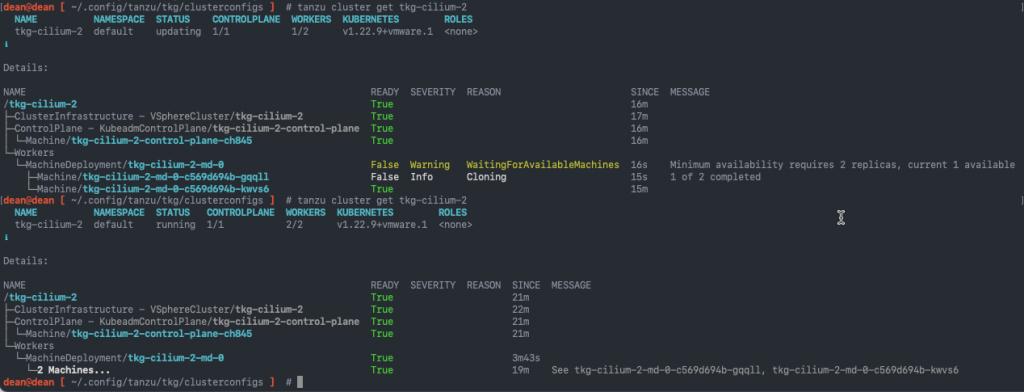

At this point you will need to break the process, so that you can get the kubeconfig file, change to that cluster context, and install the Cilium CNI. In the below screenshot I also show the output of the “tanzu cluster get” command.

Get the Kubeconfig Admin file and change your context.

tanzu cluster kubeconfig get {cluster_name} --admin

kubectl config use-context {kube_context

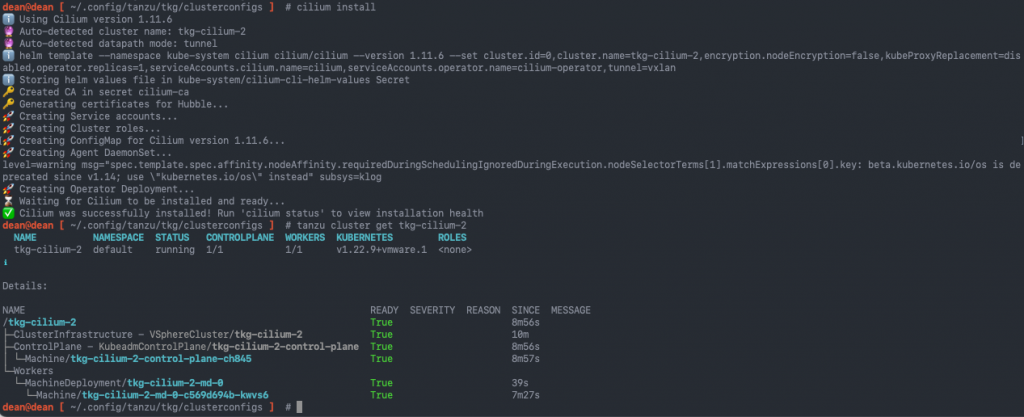

Now install the Cilium CNI using the Cilium CLI.

cilium install ℹ️ Using Cilium version 1.11.6 ? Auto-detected cluster name: tkg-cilium-2 ? Auto-detected datapath mode: tunnel ℹ️ helm template --namespace kube-system cilium cilium/cilium --version 1.11.6 --set cluster.id=0,cluster.name=tkg-cilium-2,encryption.nodeEncryption=false,kubeProxyReplacement=disabled,operator.replicas=1,serviceAccounts.cilium.name=cilium,serviceAccounts.operator.name=cilium-operator,tunnel=vxlan ℹ️ Storing helm values file in kube-system/cilium-cli-helm-values Secret ? Created CA in secret cilium-ca ? Generating certificates for Hubble... ? Creating Service accounts... ? Creating Cluster roles... ? Creating ConfigMap for Cilium version 1.11.6... ? Creating Agent DaemonSet... level=warning msg="spec.template.spec.affinity.nodeAffinity.requiredDuringSchedulingIgnoredDuringExecution.nodeSelectorTerms[1].matchExpressions[0].key: beta.kubernetes.io/os is deprecated since v1.14; use \"kubernetes.io/os\" instead" subsys=klog ? Creating Operator Deployment... ⌛ Waiting for Cilium to be installed and ready... ✅ Cilium was successfully installed! Run 'cilium status' to view installation health

In the below screenshot, you can see I’ve also included the output of the “tanzu cluster get” command, showing it is now showing as running, after the CNI installation.

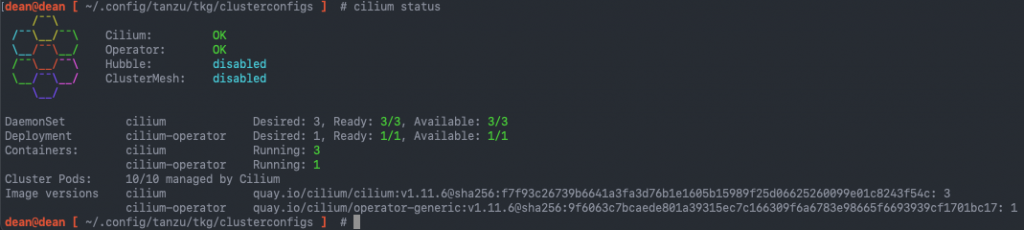

Furthermore, you can validate the Cilium install via the CLI tool.

dean@dean [ ~/.config/tanzu/tkg/clusterconfigs ] # cilium status

/¯¯\

/¯¯\__/¯¯\ Cilium: OK

\__/¯¯\__/ Operator: OK

/¯¯\__/¯¯\ Hubble: disabled

\__/¯¯\__/ ClusterMesh: disabled

\__/

DaemonSet cilium Desired: 3, Ready: 3/3, Available: 3/3

Deployment cilium-operator Desired: 1, Ready: 1/1, Available: 1/1

Containers: cilium Running: 3

cilium-operator Running: 1

Cluster Pods: 10/10 managed by Cilium

Image versions cilium quay.io/cilium/cilium:v1.11.6@sha256:f7f93c26739b6641a3fa3d76b1e1605b15989f25d06625260099e01c8243f54c: 3

cilium-operator quay.io/cilium/operator-generic:v1.11.6@sha256:9f6063c7bcaede801a39315ec7c166309f6a6783e98665f6693939cf1701bc17: 1

Validating the Cilium CNI

First, we shall run the tests with a single worker node in my cluster.

- Cilium CLI provides a built-in command for testing

- cilium connectivity test

dean@dean [ ~/.config/tanzu/tkg/clusterconfigs ] # cilium connectivity test ℹ️ Single-node environment detected, enabling single-node connectivity test ℹ️ Monitor aggregation detected, will skip some flow validation steps ✨ [tkg-cilium-2] Creating namespace for connectivity check... ✨ [tkg-cilium-2] Deploying echo-same-node service... ✨ [tkg-cilium-2] Deploying DNS test server configmap... ✨ [tkg-cilium-2] Deploying same-node deployment... ✨ [tkg-cilium-2] Deploying client deployment... ✨ [tkg-cilium-2] Deploying client2 deployment... ⌛ [tkg-cilium-2] Waiting for deployments [client client2 echo-same-node] to become ready... ⌛ [tkg-cilium-2] Waiting for deployments [] to become ready... ⌛ [tkg-cilium-2] Waiting for CiliumEndpoint for pod cilium-test/client-6488dcf5d4-hnpvp to appear... ⌛ [tkg-cilium-2] Waiting for CiliumEndpoint for pod cilium-test/client2-5998d566b4-qwx8k to appear... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client-6488dcf5d4-hnpvp to reach DNS server on cilium-test/echo-same-node-764cf88fd-hb8x9 pod... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client2-5998d566b4-qwx8k to reach DNS server on cilium-test/echo-same-node-764cf88fd-hb8x9 pod... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client-6488dcf5d4-hnpvp to reach default/kubernetes service... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client2-5998d566b4-qwx8k to reach default/kubernetes service... ⌛ [tkg-cilium-2] Waiting for CiliumEndpoint for pod cilium-test/echo-same-node-764cf88fd-hb8x9 to appear... ⌛ [tkg-cilium-2] Waiting for Service cilium-test/echo-same-node to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.194:32289 (cilium-test/echo-same-node) to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.188:32289 (cilium-test/echo-same-node) to become ready... ℹ️ Skipping IPCache check ? Enabling Hubble telescope... ⚠️ Unable to contact Hubble Relay, disabling Hubble telescope and flow validation: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp 127.0.0.1:4245: connect: connection refused" ℹ️ Expose Relay locally with: cilium hubble enable cilium hubble port-forward& ℹ️ Cilium version: 1.11.6 ? Running tests... [=] Test [no-policies] ...................... [=] Test [allow-all-except-world] ........ [=] Test [client-ingress] .. [=] Test [echo-ingress] .. [=] Test [client-egress] .. [=] Test [to-entities-world] ...... [=] Test [to-cidr-1111] .... [=] Test [echo-ingress-l7] .. [=] Test [client-egress-l7] ........ [=] Test [dns-only] ........ [=] Test [to-fqdns] ...... ✅ All 11 tests (70 actions) successful, 0 tests skipped, 0 scenarios skipped.

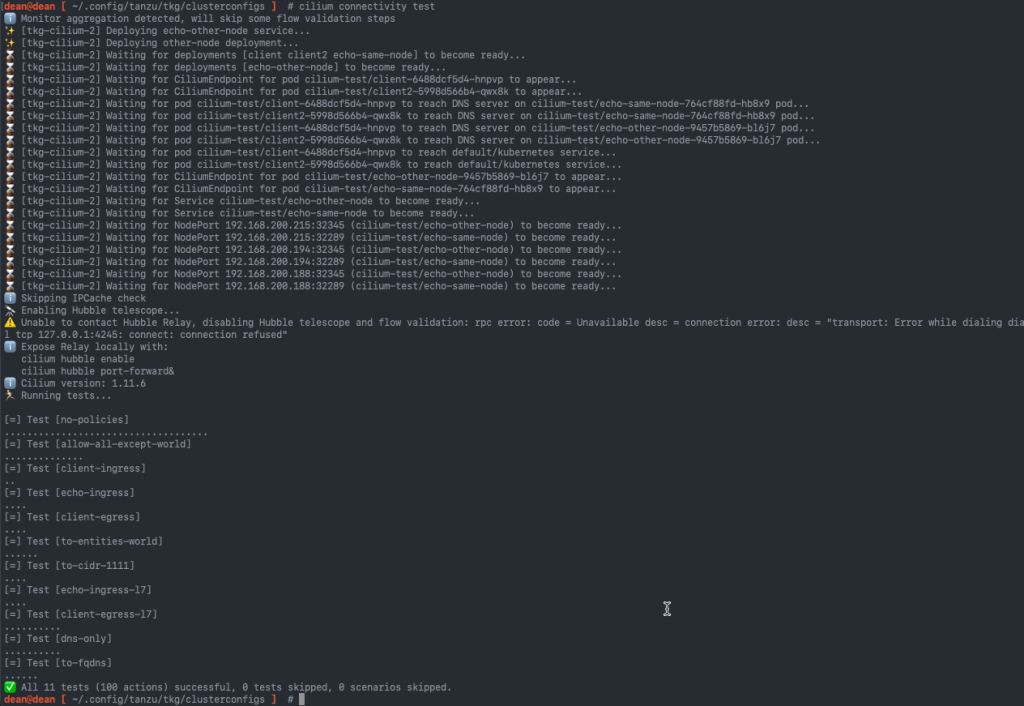

Now, I’ve scaled my cluster to two worker nodes. And re-ran the tests, which Cilium increases the number of dynamically.

tanzu cluster scale tkg-cilium-2 -w 2

Now when we run the “Cilium connectivity test” command, additional tests will be run as the tool picks up that multiple nodes are configured in the cluster.

ℹ️ Monitor aggregation detected, will skip some flow validation steps ✨ [tkg-cilium-2] Deploying echo-other-node service... ✨ [tkg-cilium-2] Deploying other-node deployment... ⌛ [tkg-cilium-2] Waiting for deployments [client client2 echo-same-node] to become ready... ⌛ [tkg-cilium-2] Waiting for deployments [echo-other-node] to become ready... ⌛ [tkg-cilium-2] Waiting for CiliumEndpoint for pod cilium-test/client-6488dcf5d4-hnpvp to appear... ⌛ [tkg-cilium-2] Waiting for CiliumEndpoint for pod cilium-test/client2-5998d566b4-qwx8k to appear... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client-6488dcf5d4-hnpvp to reach DNS server on cilium-test/echo-same-node-764cf88fd-hb8x9 pod... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client2-5998d566b4-qwx8k to reach DNS server on cilium-test/echo-same-node-764cf88fd-hb8x9 pod... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client-6488dcf5d4-hnpvp to reach DNS server on cilium-test/echo-other-node-9457b5869-bl6j7 pod... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client2-5998d566b4-qwx8k to reach DNS server on cilium-test/echo-other-node-9457b5869-bl6j7 pod... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client-6488dcf5d4-hnpvp to reach default/kubernetes service... ⌛ [tkg-cilium-2] Waiting for pod cilium-test/client2-5998d566b4-qwx8k to reach default/kubernetes service... ⌛ [tkg-cilium-2] Waiting for CiliumEndpoint for pod cilium-test/echo-other-node-9457b5869-bl6j7 to appear... ⌛ [tkg-cilium-2] Waiting for CiliumEndpoint for pod cilium-test/echo-same-node-764cf88fd-hb8x9 to appear... ⌛ [tkg-cilium-2] Waiting for Service cilium-test/echo-other-node to become ready... ⌛ [tkg-cilium-2] Waiting for Service cilium-test/echo-same-node to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.215:32345 (cilium-test/echo-other-node) to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.215:32289 (cilium-test/echo-same-node) to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.194:32345 (cilium-test/echo-other-node) to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.194:32289 (cilium-test/echo-same-node) to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.188:32345 (cilium-test/echo-other-node) to become ready... ⌛ [tkg-cilium-2] Waiting for NodePort 192.168.200.188:32289 (cilium-test/echo-same-node) to become ready... ℹ️ Skipping IPCache check ? Enabling Hubble telescope... ⚠️ Unable to contact Hubble Relay, disabling Hubble telescope and flow validation: rpc error: code = Unavailable desc = connection error: desc = "transport: Error while dialing dial tcp 127.0.0.1:4245: connect: connection refused" ℹ️ Expose Relay locally with: cilium hubble enable cilium hubble port-forward& ℹ️ Cilium version: 1.11.6 ? Running tests... [=] Test [no-policies] .................................... [=] Test [allow-all-except-world] .............. [=] Test [client-ingress] .. [=] Test [echo-ingress] .... [=] Test [client-egress] .... [=] Test [to-entities-world] ...... [=] Test [to-cidr-1111] .... [=] Test [echo-ingress-l7] .... [=] Test [client-egress-l7] .......... [=] Test [dns-only] .......... [=] Test [to-fqdns] ...... ✅ All 11 tests (100 actions) successful, 0 tests skipped, 0 scenarios skipped.

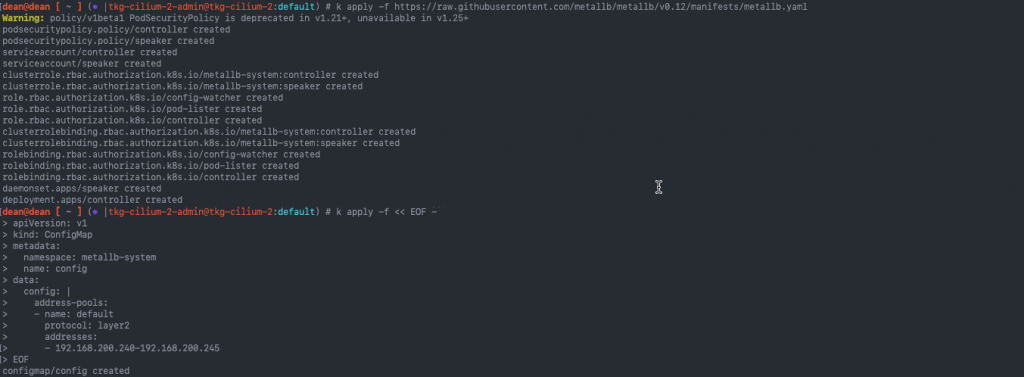

Deploying Metallb as a Kubernetes Load Balancer

For the next components, I’m going to go need access to the services running in the cluster, for this, I’ve deployed Metallb, to provide external connectivity to those services.

I had to use v0.12 in this environment, as I found the latest stable build v0.13.5 wouldn’t pass traffic into the Kubernetes cluster.

kubectl apply -f https://github.com/metallb/metallb/raw/v0.12/manifests/namespace.yaml kubectl apply -f https://github.com/metallb/metallb/raw/v0.12/manifests/metallb.yaml

Then add your IP Pool to be used for Load Balancer addresses

kubectl apply -f << EOF -

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 192.168.200.240-192.168.200.245

EOF

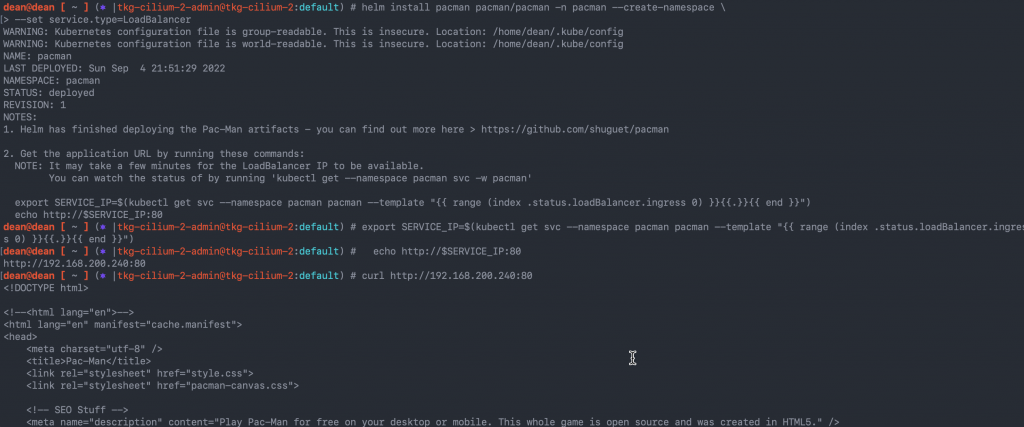

Installing the Pac-Man application

To test some of the observability pieces that Cilium provides, I’ll install my trusty Pac-Man application.

This time, I’m actually going to use the forked version by Sylvain Huguet, who took the original app code, updated it, and created a helm install.

I install the application using the below options, which includes creating a service type Load Balancer

helm repo add pacman https://shuguet.github.io/pacman/

helm install pacman pacman/pacman -n pacman --create-namespace \

--set service.type=LoadBalancer

# Get the IP address for your app by running the below

export SERVICE_IP=$(kubectl get svc --namespace pacman pacman --template "{{ range (index .status.loadBalancer.ingress 0) }}{{.}}{{ end }}")

echo http://$SERVICE_IP:80

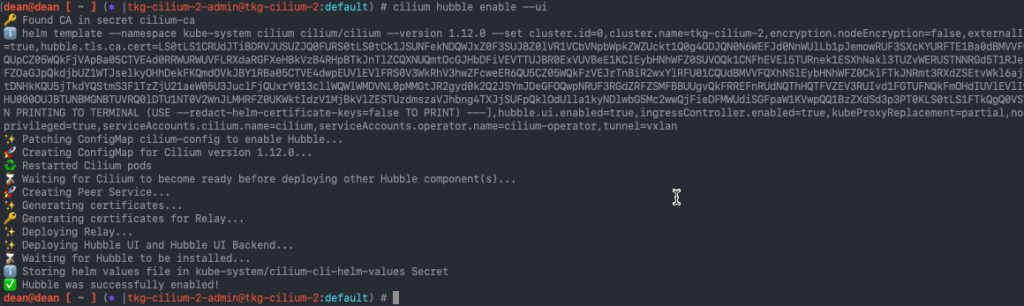

Deploying Hubble to provide observability

Hubble is the observability offering tied to the Cilium CNI providing monitoring, alerting, security, and application dependency mapping.

Run the following command to install the Hubble components including the UI.

cilium hubble enable --ui

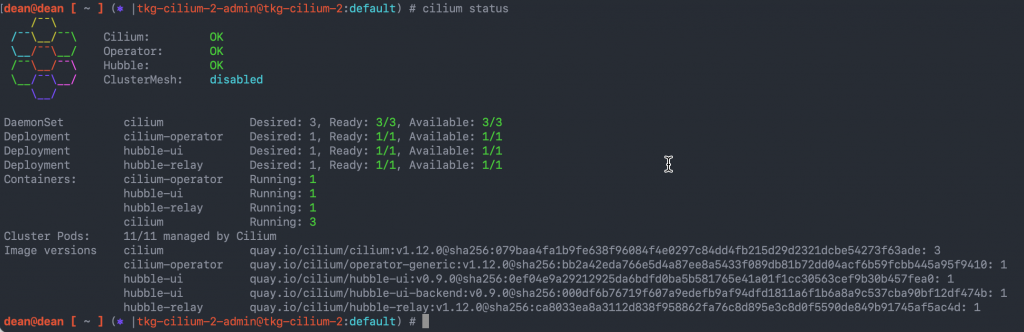

Running the command: cilium status will show that Hubble is installed and reporting correctly.

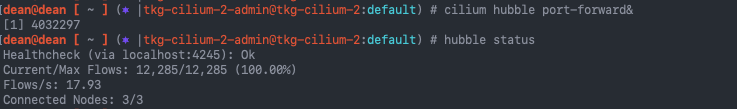

First, we are going to use the Hubble CLI tool to view the observability data. You need to download the Hubble CLI tool separately from the Cilium CLI tool.

# Download the Hubble CLI. Mac OS X example below, see above link for other OS options.

export HUBBLE_VERSION=$(curl -s https://raw.githubusercontent.com/cilium/hubble/master/stable.txt)

HUBBLE_ARCH=amd64

if [ "$(uname -m)" = "arm64" ]; then HUBBLE_ARCH=arm64; fi

curl -L --fail --remote-name-all https://github.com/cilium/hubble/releases/download/$HUBBLE_VERSION/hubble-darwin-${HUBBLE_ARCH}.tar.gz{,.sha256sum}

shasum -a 256 -c hubble-darwin-${HUBBLE_ARCH}.tar.gz.sha256sum

sudo tar xzvfC hubble-darwin-${HUBBLE_ARCH}.tar.gz /usr/local/bin

rm hubble-darwin-${HUBBLE_ARCH}.tar.gz{,.sha256sum}

# Port forward the API for the Hubble CLI tool to access on port 4245

cilium hubble port-forward&

# Validate the status of Hubble and that all your nodes show as connected

hubble status

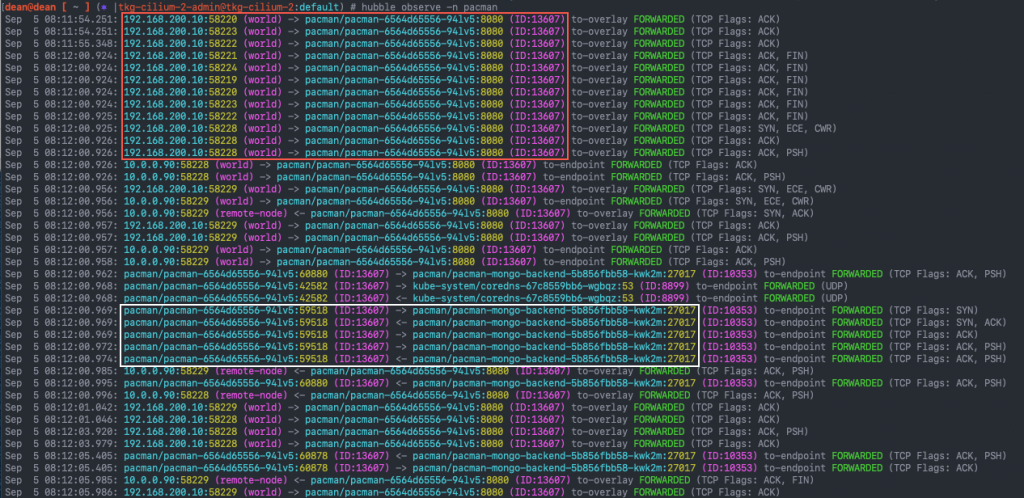

In the background I’ve reloaded my browser accessing the Pac-Man App via the web browser to generate some traffic, and save a high score, which will be wrote to the MongoDB Pod. To view the flows using the Hubble CLI I can run the command.

hubble observe --namespace {namespace}

# Example

hubble observe -n pacman

In the below screenshot, I’ve highlighted the web browser traffic from my machine hitting the front-end UI for Pacman in the red box. The white box shows the Pac-Man pod communicating to the MongoDB backend to save the high score.

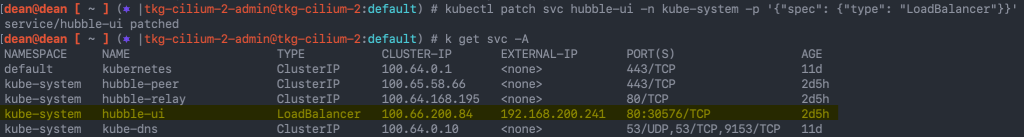

Now we’ll view the same data, but via the Hubble UI, which also provides us a service dependency mapping graphic as well. To access the Hubble UI, we can use the

kubectl port-forward command, alternatively, expose it externally via the Load Balancer, of which I’ve provided the command to alter the service to do this.

kubectl patch svc hubble-ui -n kube-system -p '{"spec": {"type": "LoadBalancer"}}'

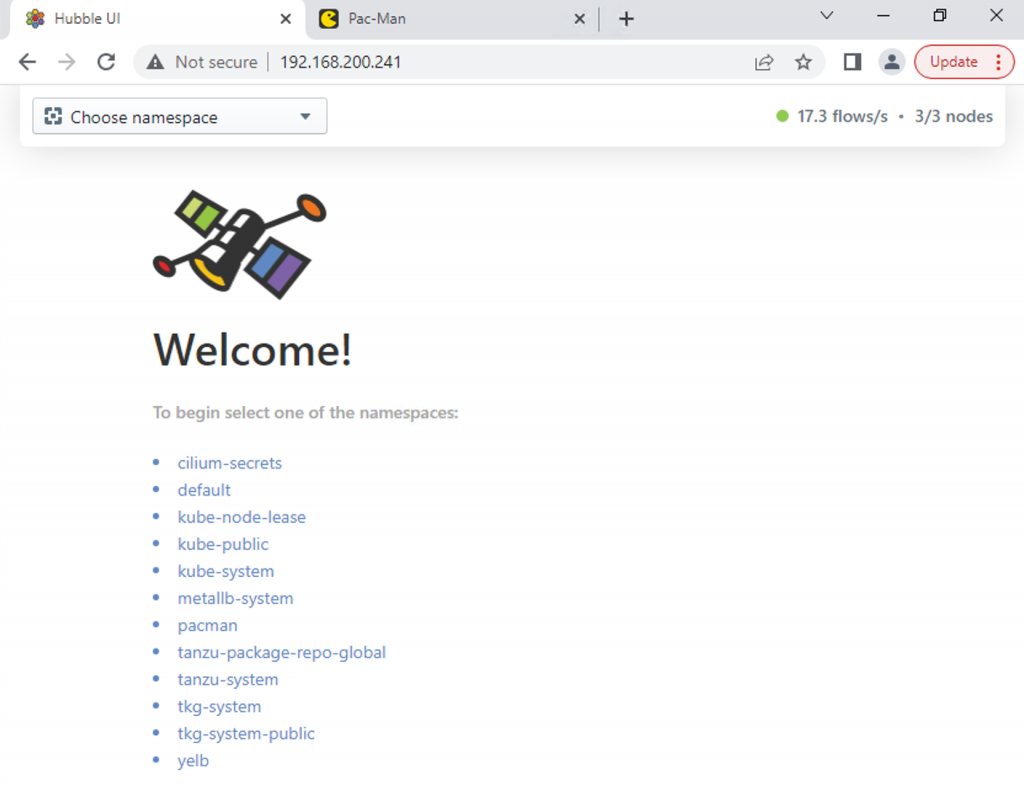

Loading up the IP address in our web browser, we get the Hubble UI, in the top right-hand corner, we can see that 17.3 flows per second are being collected from my 3 cluster nodes.

To continue I need to select a namespace to view.

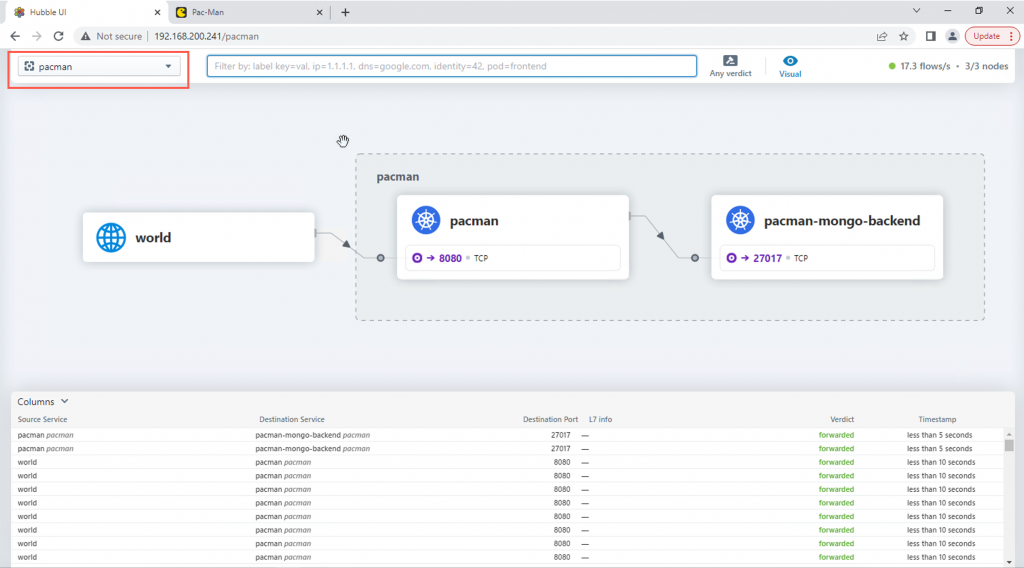

Below you can see the service dependency mapping for all the traffic observed within the namespace, with the individual flows in the bottom table.

Next to the namespace, in the red box, you can filter the traffic flows shown, which will also affect the service map show as well.

Summary and wrap-up

I’m going to end this blog post here, having covered the cluster build with the Cilium CNI, deploying a demo app to consume a load balancer service. And then using the Cilium features for observability.

The only hurdle I found was not Cilium related, but Metallb not passing traffic when using the latest version. Everything else was slick and quick and easy to setup and consume.

From here you can continue to use the Cilium official documentation for the next steps, such as setting Network security policies, Service Mesh between clusters and more!

Regards