Following on with the setup guide of the Nimble Secondary Flash Array, I am going to go through the deployment options, and the settings needed for implementation with Veeam Backup and Replication.

What will be covered in this blog post?

- Quick overview of the SFA

- Deployment Options

- Utilizing features of Veeam with the SFA

- Using a backup repository LUN

- Best practices to use as backup repository

- Veeam Proxy – Direct SAN Access

- Creating your LUN on the SFA for use as a backup repository

- Setting up your backup repository in Veeam

- vPower NFS Service on the mount server

- Backup Job settings

- SureBackup / SureReplica

- Backup Job – Nimble Storage Primary Snapshot – Configure Secondary destinations for this job

- Encryption – Don’t do it in Veeam!

- Viewing data reduction savings on the Nimble Secondary Storage

- Summary

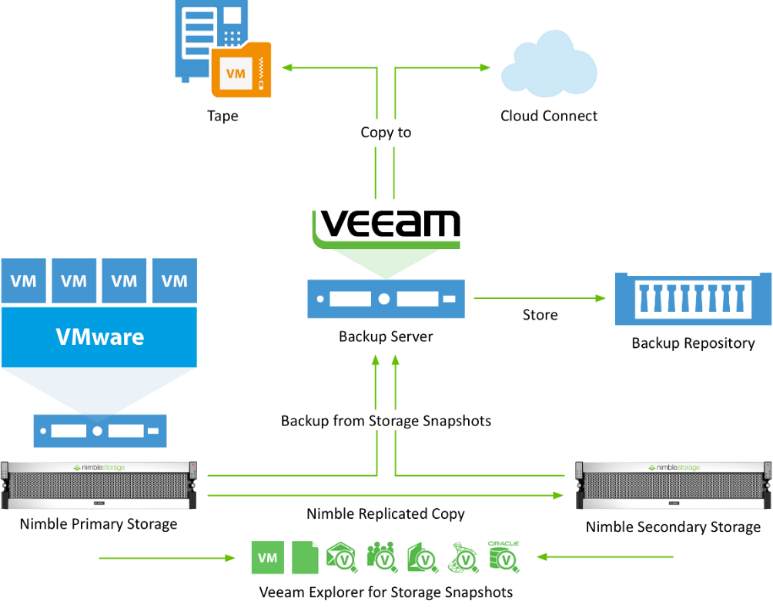

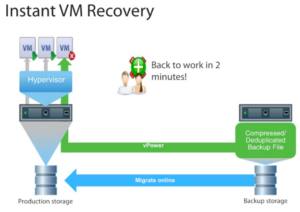

My test lab looks similar to the below diagram provided by Veeam (Benefits of using Nimble SFA with Veeam).

Quick overview of the SFA

The SFA is essentially the same as the previous Nimble Storage devices before it, the same hardware and software. But with one key difference, the software has been optimized against data reduction and space-saving efficiencies, rather than for performance. Which means you would purchase the Nimble CS/AF range for production workloads, with high IOP performance and low latency. And the SFA would be used for your DR environment, backup solution, providing the same low latency to allow for high-speed recovery, and long-term archival of data.

Deployment options

With the deployment of an SFA, you are looking at roughly the same deployment options as the CS/AF array for use with Veeam (This blog, Veeam Blog). However with the high dedupe expectancy, you are able to store a hell of a lot more data!

So the options are as follows;

- iSCSI or FC LUN to your server as a Veeam Backup Repo.

- Instant VM Recovery

- Backup Repository

- SureBackup / SureReplica

- Virtual Labs

- Replication Target for an existing Nimble.

- Utilizing Veeam Storage Integration

- Backup VMs from Secondary Storage Snapshot

- Control Nimble Storage Snapshot schedules and replication of volumes

- Utilizing Veeam Storage Integration

If we take option one, we open up a few options directly with Veeam. You can use the high IOPs performance and low latency, for features such as Instant VM recovery, where by the Veeam Backup and Replication server hosts an NFS datastore to your virtual environment and spins up a running copy of your recovered virtual machine quickly with little fuss.

Continue reading First Look – Leveraging the Nimble Secondary Flash Array with Veeam – Setup guide

Continue reading First Look – Leveraging the Nimble Secondary Flash Array with Veeam – Setup guide