So I’m in the middle of an upgrade of environment that has;

- 2 x Hosts on ESX 4.0

- SAN using SAS connections to Hosts

- No resources available for failover and testing

- Limited network ports

I’m installing;

- 3 x Hosts on ESXi 5.5

- Nimble SAN – iSCSI

So to move all the virtual machines from the SAS SAN to the Nimble, I need to move the VM’s whilst turned on,

I’ve setup the iSCSI networking on the ESX host,

- Creating VMKernal ports with IP addresses,

- Mapping a VMNIC to each VMKernel directly

- Setting MTU size to 9000

Next up,

- Add youriSCSI Initiator,

- set it up,

- And ensure you have your datastores added into your ESX host.

- Set your paths to use Round Robin

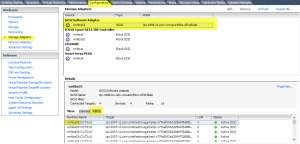

Mapping the VMKernals to iSCSI Software Adapter

This needs to be done at the CLI level;

You need your vmhba address,

Configuration > Storage adapters > Paths

At the CLI prompt;

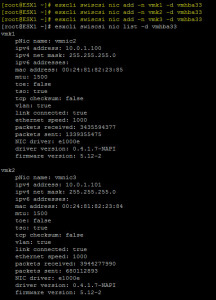

esxcli swicli nic list -d vmhba(No.)

This will confirm that no VMKernels have been “binded” to the iSCSI Software Adapter (I use that term loosely due to ESXi 5.0 and above).

Find your VMKernel details and then follow the below to add them into the config, and then run the above command again to see the results.

esxcli swicli nic add -n vmk(No.) -d vmhba(No.) example; esxcli swicli nic add -n vmk3 -d vmhba33

The Advance Settings

Log into the ESXCLI so we can configure the additional settings for better performance.

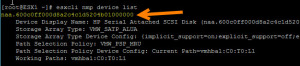

Get your LUN device ID using the following;

esxcli nmp device list

Take your LUN device ID’s and set the following;

esxcli nmp roundrobin setconfig –useANO 1 –device DeviceID esxcli nmp roundrobin setconfig --device DeviceID --iops 0 --type iops esxcli nmp roundrobin setconfig --device DeviceID --type bytes --bytes 0

esxcli nmp roundrobin setconfig –useANO 1 –device eui.ba4cf0185e6840966c9ce900709442aa esxcli nmp roundrobin setconfig --device eui.ba4cf0185e6840966c9ce900709442aa --iops 0 --type iops esxcli nmp roundrobin setconfig --device eui.ba4cf0185e6840966c9ce900709442aa --type bytes --bytes 0

What the commands do

–useANO 1 – This tells the ESX host to use non-equal paths to the storage (Default is 0, which means off)

–iops 0 – This dictates how many IOPs must transverse a nic before the host starts to pass IOPs via another nic.

–bytes – This dictates how many bytes must transverse a nic before the host starts to pass traffic via another nic.

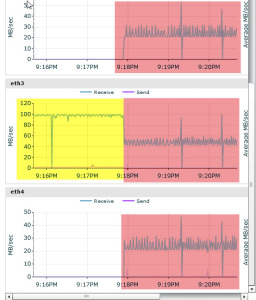

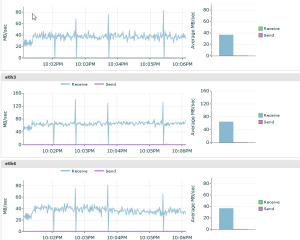

Real World Screenshots

So the first one shows that the ESX host was only using one port for sending traffic, before I made any configuration changes other than setting a VMKernal which could talk to the iSCSI Discovery initiator, this is in yellow. On the storage device you can see that only one port has been utilized there, as if it’s a one to one mapping.

The Red is when I binded the vmkernals (which have one physical NIC assigned each) to the iSCSI Software Adapter. AS you can see both storage interfaces start to receive traffic, however the combined throughput is less than when it was a single NIC. So next was to set the unequal paths, iops and byte counts.

After this, you see an increase, still slightly unequal, so around 50MB/s, 80MB/s and 50MB/s across the NICs. Which adds to around 50MB/s more than when I was using one NIC at the start of all this!

Regards

Dean Lewis