I had the pleasure of working with a customer who wanted to use property groups within vRealize Automation, to provide various configuration data to drive their deployments. They asked some queries about how to use property groups that went beyond the documentation, so I thought it would also make a good blog post.

What are property groups?

Property groups were introduced in vRealize Automation 7.x and sorely missed when the 8.x version was shipped. They were reintroduced in vRA 8.3.

When you several properties that always appear together in your Cloud Templates, you can create a property group to store them together.

This allows you to re-use the same properties over and again across Cloud Templates from a central construct, rather than replicate the same information directly into each cloud template.

The benefit of doing this, is that if you update any information, it is pushed to all linked cloud templates. Potentially this could be a disadvantage as well, so once you use these in production, be mindful of any updates to in-use groups.

There are two types of property groups. When creating a property group, you select the type. You do not have the ability to change or convert the type once the group has been created.

- Inputs

Input property groups gather and apply a consistent set of properties at user request time. Input property groups can include entries for the user to add or select, or they might include read-only values that are needed by the design.

Properties for the user to edit or select can be readable or encrypted. Read-only properties appear on the request form but can’t be edited. If you want read-only values to remain totally hidden, use a constant property group instead.

- Constants

Constant property groups silently apply known properties. In effect, constant property groups are invisible metadata. They provide values to your Cloud Assembly designs in a way that prevents a requesting user from reading those values or even knowing that they’re present. Examples might include license keys or domain account credentials.

Getting Started with a Input Property Group

Ultimately the Input Property Group works the exact same way as Inputs you specify on the cloud template directly. The group option simply provides a way to centralise these inputs for use between cloud templates.

Create an Input Property Group

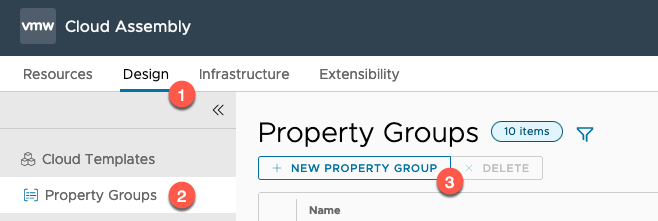

- Click on Design Tab

- Click Property Groups from the left-hand navigation pane

- Select New Property Group

Continue reading vRealize Automation – Property groups deep dive

Continue reading vRealize Automation – Property groups deep dive