In this blog post we will cover the following topics

- Restoring a Backup - - Viewing protected data - - File Level Recovery - - - File Level Recovery Session Log - - Virtual Machine Disk Restore - - Full VM Restore

The follow up blog posts are;

- Getting started with Veeam Backup for Azure - - Configuring the backup infrastructure - - Monitoring - - Protecting your installation - - System and session logs - Configuring a backup policy - - How a backup policy works - - Creating a Backup Policy - - Viewing and Running a Backup Policy - Integrating with Veeam Backup and Replication - - Adding your Azure Repository to Veeam Backup and Replication - - Viewing your protected data - - What can you do with your data? - - - Restore/Recover/Protect

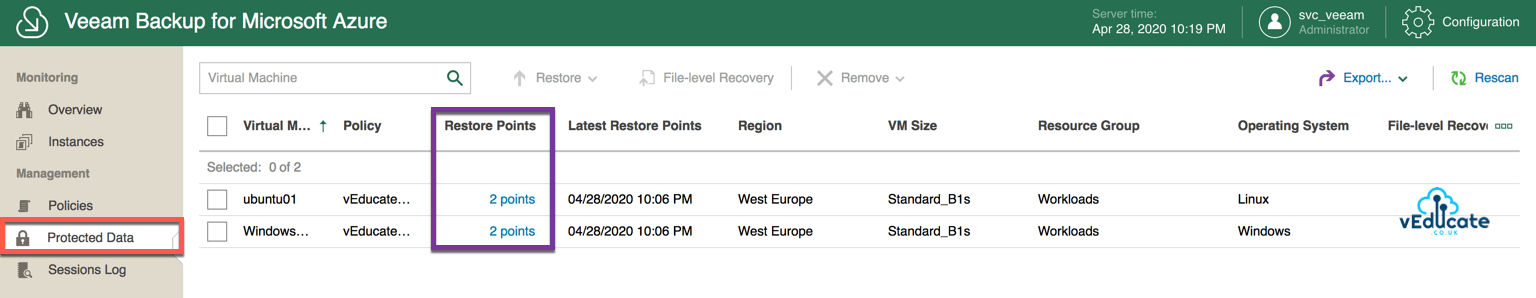

Viewing Protected Data

Once you have a successful backup policy run, you will find that by navigating to “Protected Data” in the left-hand navigation pane, you will find details of your protected workloads and the backups stored.

Highlighted in the purple box above, we are able to click on each of our protected virtual machines and see the details of the restore points held.

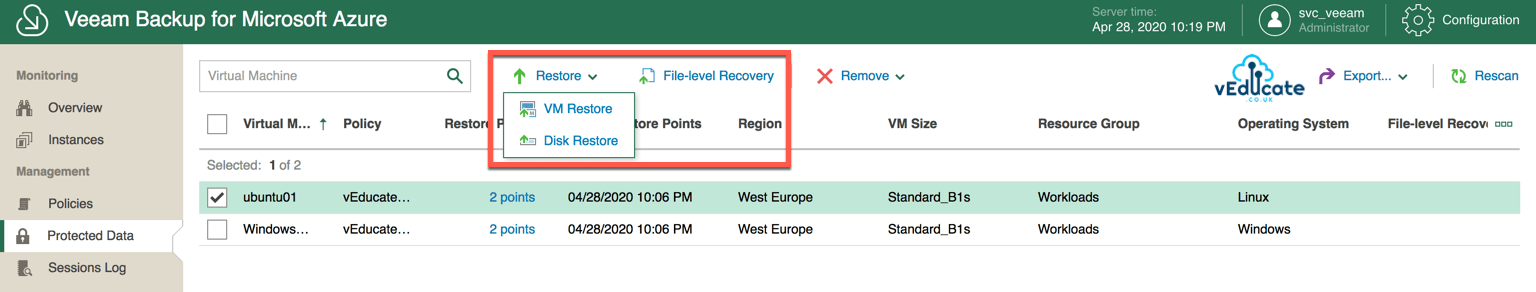

The available restore options are;

- VM Restore

- Restore a full virtual machine to the same or a different location. This restore uses both the VM configuration and VHD backups.

- Disk Restore

- Restore only a virtual machines hard drive to the same or a different location, these will not be attached to any virtual machines when the restore is complete.

- File-Level Recovery

- Restore of files and folders from protected instances, which are available to download to your local machine.

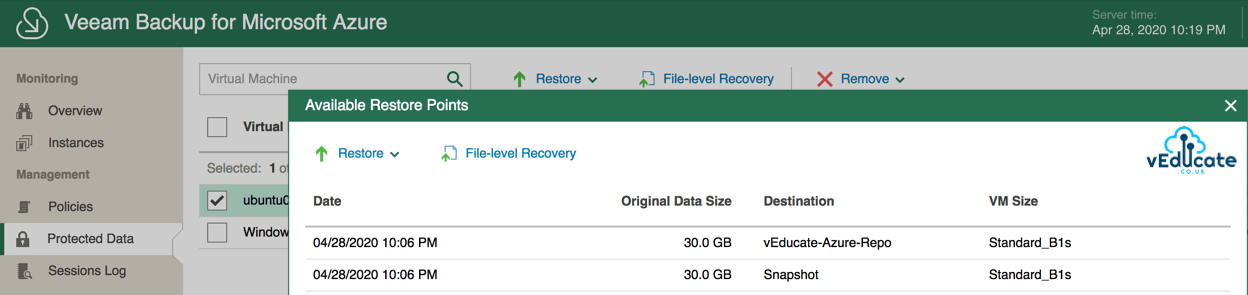

Below, we can see the available restore points for my “Ubuntu01” virtual machine. As the backup policy has only run once, I have a single snapshot held with the VM itself, and a single backup of the full virtual machine (VHDS and VM configuration, which are located in my configured Repository.

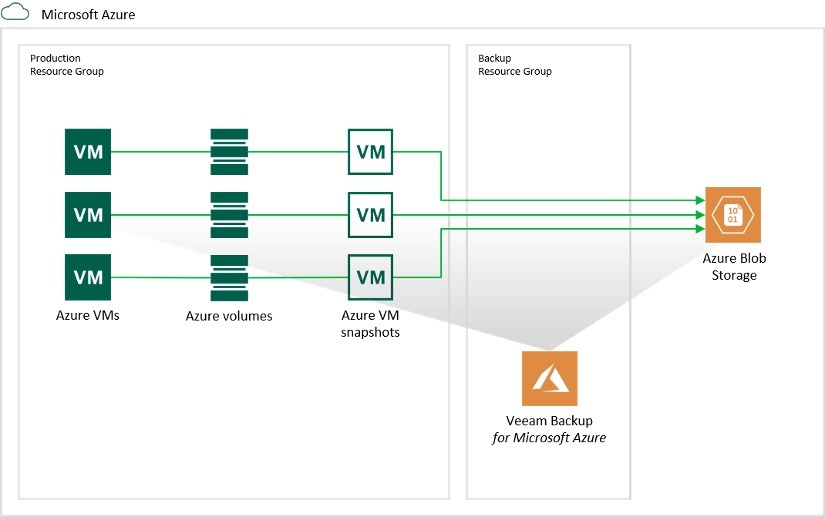

- Backups – Both managed/unmanaged VHDs are saved to the configured Backup Repository.

- Snapshots

- Managed VHDs – snapshot saved to resource group of source VM,

- Unmanaged VHDs – snapshots saved to Azure Storage Account of source VHD

From this view, we can select to restore the Full VM, the individual VHDs, under the Restore option, or we can perform a file-level Recovery under the second self-named option.

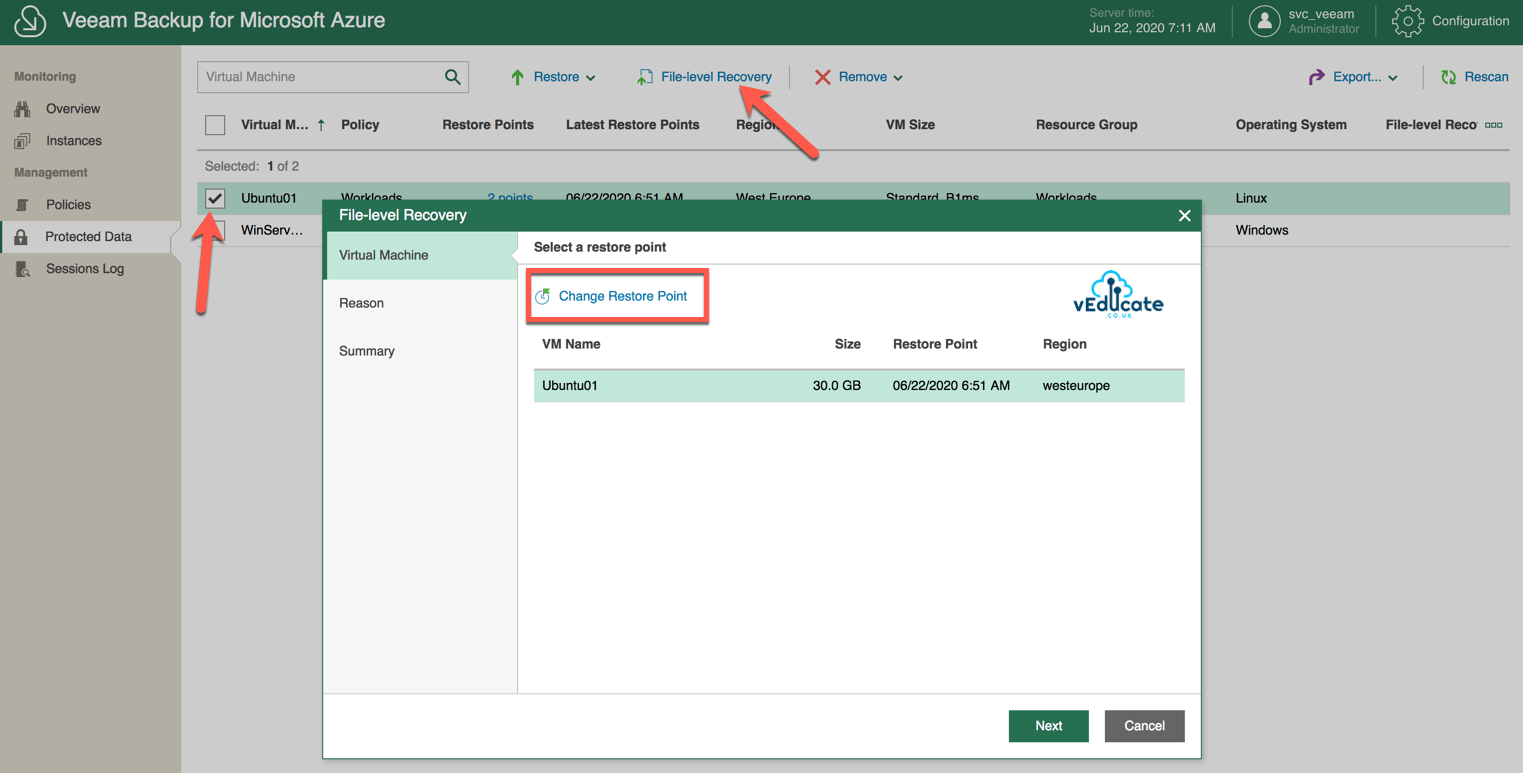

File Level Recovery

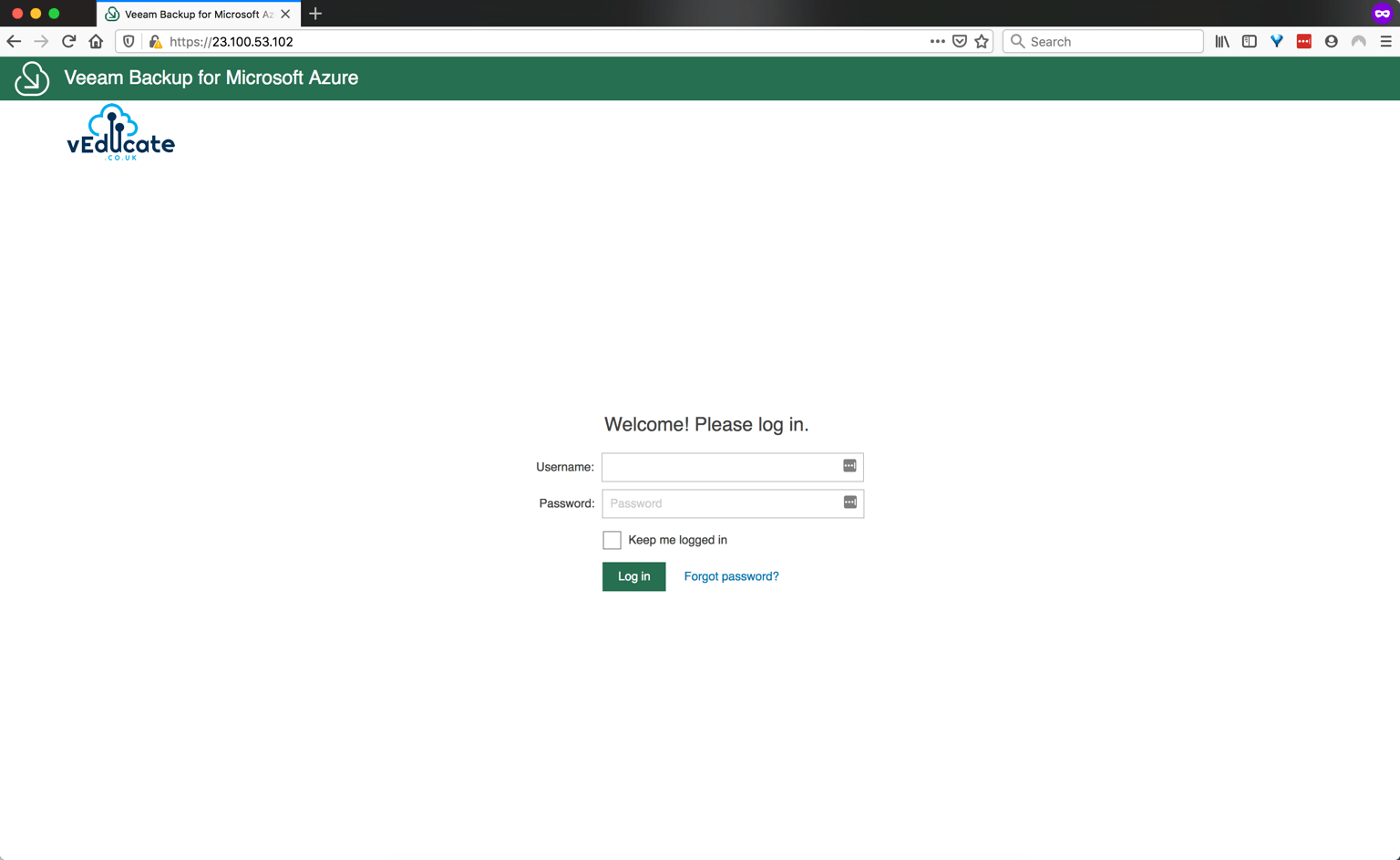

You can enter a file level recovery as per the above screenshot, or from the main screen by highlighting your protected VMs and clicking file level recovery.

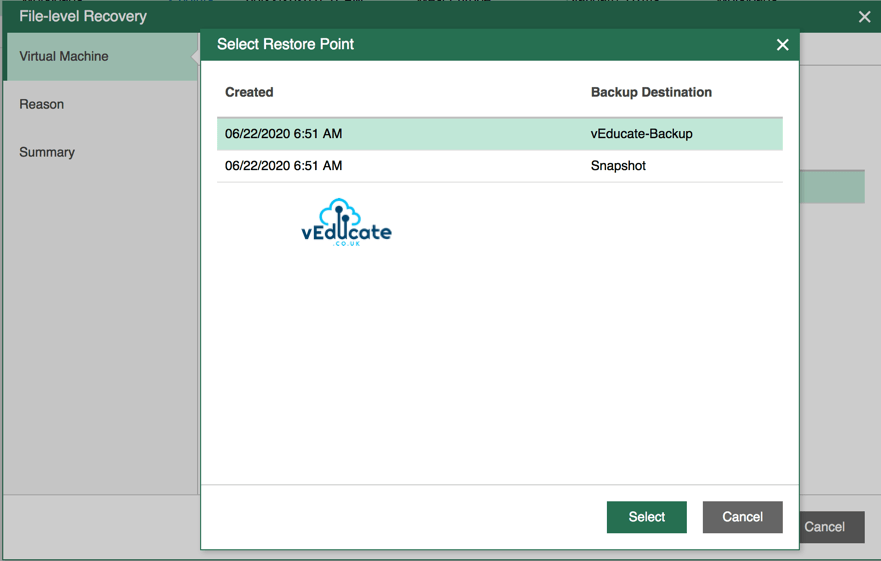

By clicking “Change Restore Point” you will of course see the various points in time available.

Continue reading Veeam Backup for Microsoft Azure – Restoring a Backup

Continue reading Veeam Backup for Microsoft Azure – Restoring a Backup