In this post I’m just documenting the steps on how to upgrade the vSphere CSI Driver, especially if you must make a jump in versioning to the latest version.

Upgrade from pre-v2.3.0 CSI Driver version to v2.3.0

You need to figure out what version of the vSphere CSI Driver you are running.

For me it was easy as I could look up the Tanzu Kubernetes Grid release notes. Please refer to your deployment manifests in your cluster. If you are still unsure, contact VMware Support for assistance.

Then you need to find your manifests for your associated version. You can do this by viewing the releases by tag.

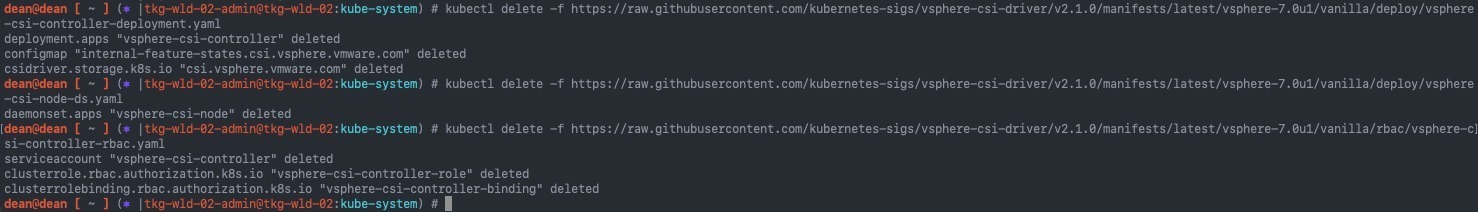

Then remove the resources created by the associated manifests. Below are the commands to remove the version 2.1.0 installation of the CSI.

kubectl delete -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.1.0/manifests/latest/vsphere-7.0u1/vanilla/deploy/vsphere-csi-controller-deployment.yaml kubectl delete -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.1.0/manifests/latest/vsphere-7.0u1/vanilla/deploy/vsphere-csi-node-ds.yaml kubectl delete -f https://raw.githubusercontent.com/kubernetes-sigs/vsphere-csi-driver/v2.1.0/manifests/latest/vsphere-7.0u1/vanilla/rbac/vsphere-csi-controller-rbac.yaml

Now we need to create the new namespace, “vmware-system-csi”, where all new and future vSphere CSI Driver components will run. Continue reading Upgrading the vSphere CSI Driver (Storage Container Plugin) from v2.1.0 to latest