The Issue

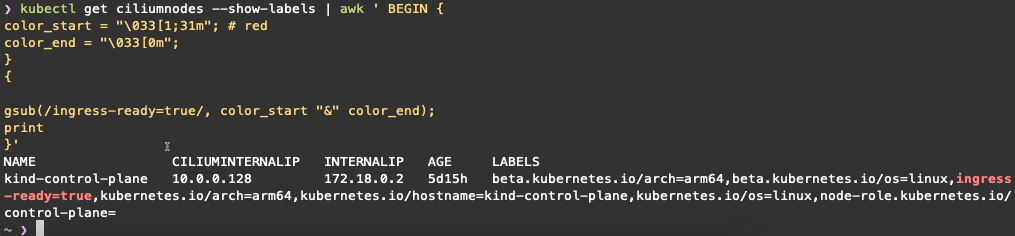

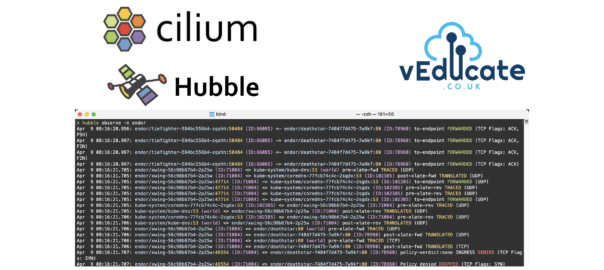

In a platform that’s deployed with Cilium, when using Hubble either to view the full JSON output or to configure which events are captured using the allowlist or denylist you may have seen a field called event_type which uses an integer.

Below is an example allow list using “event_type”, to define which flows to be captured. When I first saw this, I was confused; where do these numbers come from? How do I map this back to a friendly name that I understand?;

allowlist:

- '{"source_pod":["kube-system/"],"event_type":[{"type":1}]}'

- '{"destination_pod":["kube-system/"],"event_type":[{"type":1}]}'

Example Hubble Dynamic Exporter configuration;

hubble:

export:

dynamic:

enabled: true

config:

enabled: true

content:

- name: "test001"

filePath: "/var/run/cilium/hubble/test001.log"

fieldMask: []

includeFilters: []

excludeFilters: []

end: "2023-10-09T23:59:59-07:00"

- name: "test002"

filePath: "/var/run/cilium/hubble/test002.log"

fieldMask: ["source.namespace", "source.pod_name", "destination.namespace", "destination.pod_name", "verdict"]

includeFilters:

- source_pod: ["default/"]

event_type:

- type: 1

- destination_pod: ["frontend/webserver-975996d4c-7hhgt"]

and finally, a Hubble flow in full JSON output, with the event_type showing towards the end of the output;

{

"flow": {

"time": "2024-07-08T10:09:24.173232166Z",

"uuid": "755b0203-d456-452d-b399-4fa136cdb4fd",

"verdict": "FORWARDED",

"ethernet": {

"source": "06:29:73:4e:0a:c5",

"destination": "26:50:d8:4a:94:d2"

},

"IP": {

"source": "10.0.2.163",

"destination": "130.211.198.204",

"ipVersion": "IPv4"

},

"l4": {

"TCP": {

"source_port": 37736,

"destination_port": 443,

"flags": {

"PSH": true,

"ACK": true

}

}

},

"source": {

"ID": 2045,

"identity": 14398,

"namespace": "endor",

"labels": [

"k8s:app.kubernetes.io/name=tiefighter"

],

"pod_name": "tiefighter-6b56bdc869-2t6wn",

"workloads": [

{

"name": "tiefighter",

"kind": "Deployment"

}

]

},

"destination": {

"identity": 16777217,

"labels": [

"cidr:130.211.198.204/32",

"reserved:world"

]

},

"Type": "L3_L4",

"node_name": "kind-worker",

"destination_names": [

"disney.com"

],

"event_type": {

"type": 4,

"sub_type": 3

},

"traffic_direction": "EGRESS",

"trace_observation_point": "TO_STACK",

"is_reply": false,

"Summary": "TCP Flags: ACK, PSH"

},

"node_name": "kind-worker",

"time": "2024-07-08T10:09:24.173232166Z"

}

The Explanation

Cilium Event types are defined in this Go package. The first line iota == 0 then increments by one for each type, so drop =1, debug =2, etc.

const (

// 0-128 are reserved for BPF datapath events

MessageTypeUnspec = iota

// MessageTypeDrop is a BPF datapath notification carrying a DropNotify

// which corresponds to drop_notify defined in bpf/lib/drop.h

MessageTypeDrop

// MessageTypeDebug is a BPF datapath notification carrying a DebugMsg

// which corresponds to debug_msg defined in bpf/lib/dbg.h

MessageTypeDebug

// MessageTypeCapture is a BPF datapath notification carrying a DebugCapture

// which corresponds to debug_capture_msg defined in bpf/lib/dbg.h

MessageTypeCapture

// MessageTypeTrace is a BPF datapath notification carrying a TraceNotify

// which corresponds to trace_notify defined in bpf/lib/trace.h

MessageTypeTrace

// MessageTypePolicyVerdict is a BPF datapath notification carrying a PolicyVerdictNotify

// which corresponds to policy_verdict_notify defined in bpf/lib/policy_log.h

MessageTypePolicyVerdict

// MessageTypeRecCapture is a BPF datapath notification carrying a RecorderCapture

// which corresponds to capture_msg defined in bpf/lib/pcap.h

MessageTypeRecCapture

// MessageTypeTraceSock is a BPF datapath notification carrying a TraceNotifySock

// which corresponds to trace_sock_notify defined in bpf/lib/trace_sock.h

MessageTypeTraceSock

// 129-255 are reserved for agent level events

// MessageTypeAccessLog contains a pkg/proxy/accesslog.LogRecord

MessageTypeAccessLog = 129

// MessageTypeAgent is an agent notification carrying a AgentNotify

MessageTypeAgent = 130

)

const (

MessageTypeNameDrop = "drop"

MessageTypeNameDebug = "debug"

MessageTypeNameCapture = "capture"

MessageTypeNameTrace = "trace"

MessageTypeNameL7 = "l7"

MessageTypeNameAgent = "agent"

MessageTypeNamePolicyVerdict = "policy-verdict"

MessageTypeNameRecCapture = "recorder"

MessageTypeNameTraceSock = "trace-sock"

)

Therefore, in the above JSON output (last example), event type 4 is defined as trace, this particular event type also has a sub_typeas you can see here in the Hubble CLI, help output. You can see the definitions in the Go package here.

-t, --type filter Filter by event types TYPE[:SUBTYPE]. Available types and subtypes:

TYPE SUBTYPE

capture n/a

drop n/a

l7 n/a

policy-verdict n/a

trace from-endpoint

from-host

from-network

from-overlay

from-proxy

from-stack

to-endpoint

to-host

to-network

to-overlay

to-proxy

to-stack

trace-sock n/a

I hope this helps!

Regards

Dean Lewis