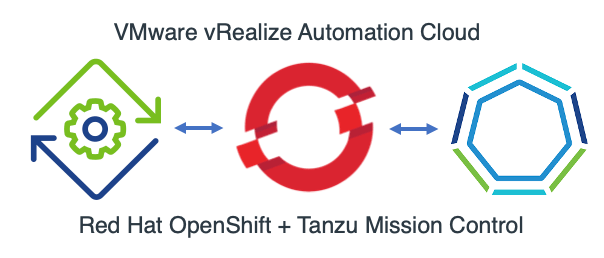

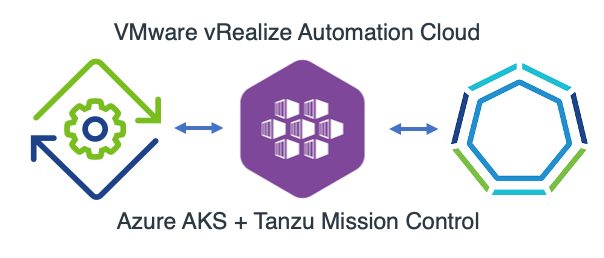

This walk-through will detail the technical configurations for using vRA Code Stream to deploy Red Hat OpenShift Clusters, register them as Kubernetes endpoints in vRA Cloud Assembly and Code Stream, and finally register the newly created cluster in Tanzu Mission Control.

The deployment uses the Installer Provisioned Infrastructure method for deploying OpenShift to vSphere. Which means the installation tool “openshift-install” provisions the virtual machines and configures them for you, with the cluster using internal load balancing for it’s API interfaces.

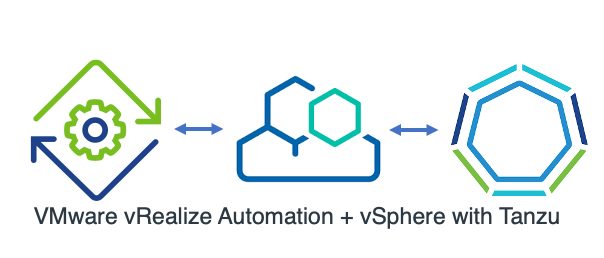

This post mirrors my original blog post on using vRA to deploy AWS EKS clusters.

Pre-reqs

- Red Hat Cloud Account

- With the ability to download and use a Pull Secret for creating OpenShift Clusters

- vRA access to create Code Stream Pipelines and associated objects inside the pipeline when it runs.

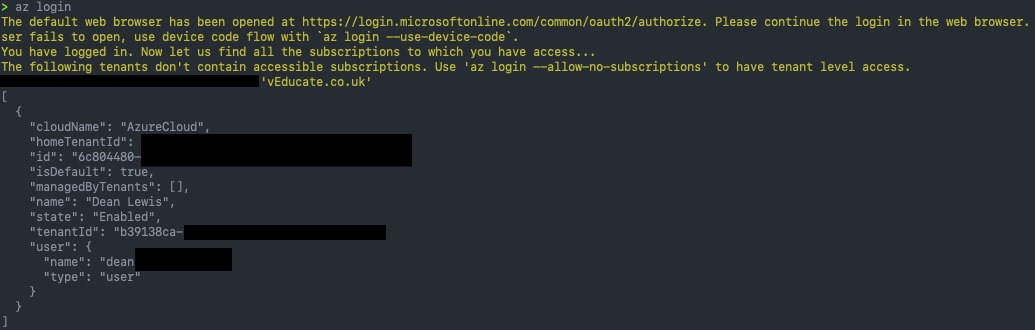

- Get CSP API access token for vRA Cloud or on-premises edition.

- Tanzu Mission Control access with ability to attach new clusters

- Get an CSP API access token for TMC

- vRA Code Stream configured with an available Docker Host that can connect to the network you will deploy the OpenShift clusters to.

- This Docker container is used for the pipeline

- You can find the Dockerfile here, and alter per your needs, including which versions of OpenShift you want to deploy.

- SSH Key for a bastion host access to your OpenShift nodes.

- vCenter account with appropriate permissions to deploy OpenShift

- You can use this PowerCLI script to create the role permissions.

- DNS records created for OpenShift Cluster

- api.{cluster_id}.{base_domain}

- *.apps.{cluster_id}.{base_domain}

- Files to create the pipeline are stored in either of these locations:

High Level Steps of this Pipeline

- Create an OpenShift Cluster

- Build a install-config.yaml file to be used by the OpenShift-Install command line tool

- Create cluster based on number of user provided inputs and vRA Variables

- Register OpenShift Cluster with vRA

- Create a service account on the cluster

- collect details of the cluster

- Register cluster as Kubernetes endpoint for Cloud Assembly and Code Stream using the vRA API

- Register OpenShift Cluster with Tanzu Mission Control

- Using the API

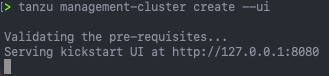

Creating a Code Stream Pipeline to deploy a OpenShift Cluster and register the endpoints with vRA and Tanzu Mission Control

Create the variables to be used

First, we will create several variables in Code Stream, you could change the pipeline tasks to use inputs instead if you wanted. Continue reading Deploying OpenShift clusters (IPI) using vRA Code Stream