The Issue

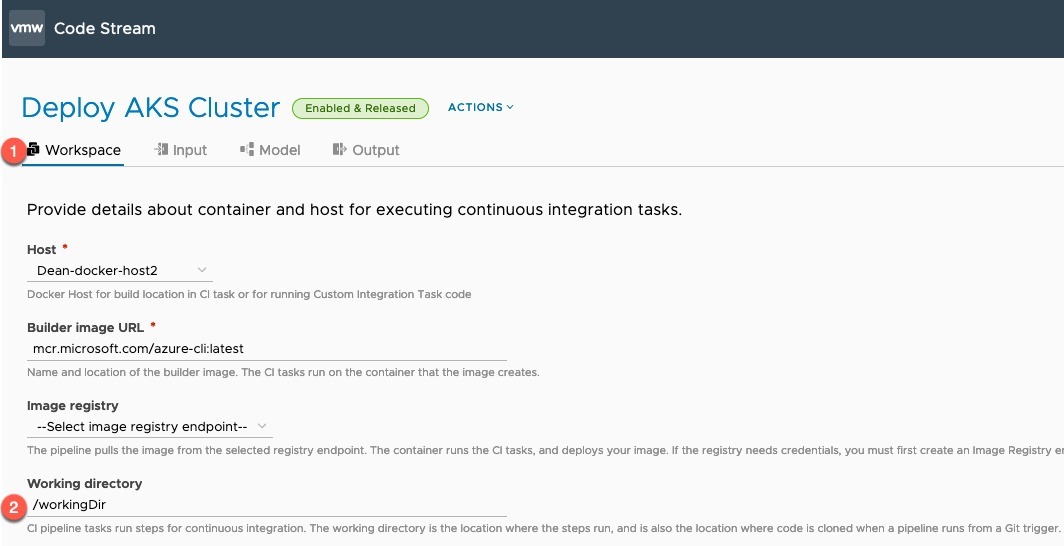

Whilst working with a vRA Code Stream CI Task, I needed to build a YAML file in my container, which I would use to provide the values to my CLI Tool I was running. Within this YAML File, there is a section of JSON input (yep I know, it’s a Red Hat thing!!!).

I wanted to pass in this JSON section as a vRA variable, as it contains my authentication details to the Red Hat Cloud Website.

So my vRA variable would be as below:

{"auths":{"cloud.openshift.com":{"auth":"token-key","email":"[email protected]"},"registry.connect.redhat.com":{"auth":"token-key","email":"[email protected]"},"registry.redhat.io":{"auth":"token-key","email":"[email protected]"}}}

So my CI Task looked something like this:

cat << EOF > install-config.yaml

apiVersion: v1

baseDomain: simon.local

compute:

- hyperthreading: Enabled

name: worker

replicas: 1

platform:

vsphere:

cpus: 4

coresPerSocket: 1

memoryMB: 8192

osDisk:

diskSizeGB: 120

PullSecret: '${var.pullSecret}'

EOF

When running the Pipeline, I kept hitting an issue where the task would fail with a message similar to the below.

com.google.gson.stream.MalformedJsonException: Unterminated array at line 1 column 895 path $[39]

The Cause

This, I believe is because the tasks are passed to the Docker Host running the container via the Docker API using JSON format. The payload then contains my outer wrapping of YAML and within that more JSON. So the system gets confused with the various bits of JSON.

The Fix

To get around this issue, I encoded my JSON data in Base64. Saved this Base64 code to the variable. Then in my CI task I added an additional line before creating the file which creates a environment variable which decodes my base64 provided from a vRA variable.

Below is my new CI Task code.

export pullSecret=$(echo ${var.pullSecret} | base64 -d)

cat << EOF > install-config.yaml

apiVersion: v1

baseDomain: simon.local

compute:

- hyperthreading: Enabled

name: worker

replicas: 1

platform:

vsphere:

cpus: 4

coresPerSocket: 1

memoryMB: 8192

osDisk:

diskSizeGB: 120

PullSecret: '$pullSecret'

EOF

Regards