Intro

This blog post is an accompaniment to the session “vSphere with Tanzu Kubernetes – Day 2 Operations for the VI Admin” created by myself and Simon Conyard, with special thanks to the VMware LiveFire Team for allowing us access to their lab environments to create the technical demo recordings.

You can see the full video with technical demos below (1hr 4 minutes). This blog post acts a supplement to the recording.

This session recording was first shown at the Canada VMUG Usercon.

- You can watch the VMUG session on-demand here.

- This session is 44 minutes long (and is a little shorter than the one above).

The basic premise of the presentation was set at around a level-100/150 introduction to the Kubernetes world and marrying that to your knowledge of VMware vSphere as a VI Admin. Giving you an insight into most of the common areas you will need to think about when all of a sudden you are asked to deploy Tanzu Kubernetes and support a team of developers.

Scene Setting

So why are we talking about VMware and Kubernetes? Isn’t VMware the place where I run those legacy things called virtual machines?

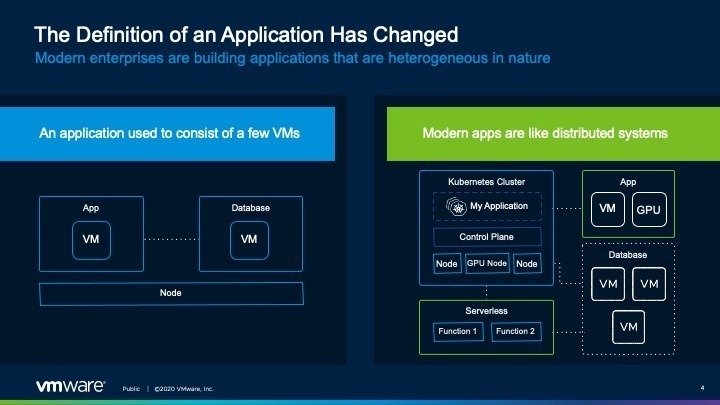

Essentially the definition of an application has changed. On the left of the below image, we have the typical Application, we usually talk about the three tier model (Web, App, DB).

However, the landscape is moving towards the right hand side, applications running more like distributed systems. Where the data your need to function is being served, serviced, recorded, and presented not only by virtual machines, but Kubernetes services as well. Kubernetes introduces its own architectures and frameworks, and finally this new buzzword, serverless and functions.

Although you may not be seeing this change happen immediately in your workplace and infrastructure today. It is the direction of the industry.

Did you know, vRealize Automation 8 is built on a modern container-based micro-services architecture.

VMware’s Kubernetes offerings

VMware has two core offerings;

- vSphere Native

- Multi-Cloud Aligned

Within vSphere there are two types of Kubernetes clusters that run natively within ESXi.

- Supervisor Kubernetes cluster control plane for vSphere

- Tanzu Kubernetes Cluster, sometimes also referred to as a “Guest Cluster.”

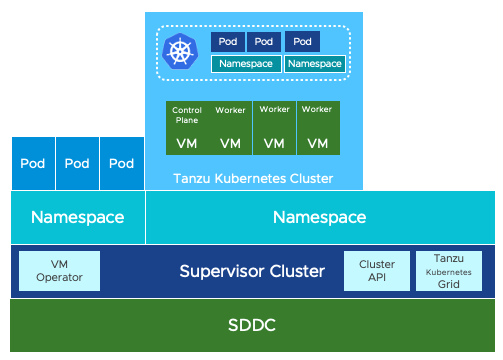

Supervisor Kubernetes Cluster

This is a special Kubernetes cluster that uses ESXi as its worker nodes instead of Linux.

This is achieved by integrating the Kubernetes worker agents, Spherelets, directly into the ESXi hypervisor. This cluster uses vSphere Pod Service to run container workloads natively on the vSphere host, taking advantage of the security, availability, and performance of the ESXi hypervisor.

- To learn more about how vSphere delivers Kubernetes natively

- vSphere 7 Blog – Introduction to the vSphere Pod Service

- vSphere 7 Blog – vSphere Pods Explained

The supervisor cluster is not a conformant Kubernetes cluster, by design, using Kubernetes to enhance vSphere. This ultimately provides you the ability to run pods directly on the ESXi host alongside virtual machines, and as the management of Tanzu Kubernetes Clusters.

Tanzu Kubernetes Cluster

To deliver Kubernetes clusters to your developers, that are standards aligned and fully conformant with upstream Kubernetes, you can use Tanzu Kubernetes Clusters (also referred to as “Guest” clusters.)

A Tanzu Kubernetes Cluster is a Kubernetes cluster that runs inside virtual machines on the Supervisor layer and not on vSphere Pods.

As a fully upstream-compliant Kubernetes it is guaranteed to work with all your Kubernetes applications and tools. Tanzu Kubernetes Clusters in vSphere use the open source Cluster API project for lifecycle management, which in turn uses the VM Operator to manage the VMs that make up the cluster.

Supervisor Cluster or Tanzu Kubernetes Cluster, which one should I choose to run my application?

Supervisor Cluster:

- Has additional capabilities that are inherent in the vSphere environment and are available to Kubernetes via the kubectl command

- Provides the ability to manage containers just as you would manage virtual machines

- Provides stronger security and resource isolation due to the use of vSphere Pods

- Performance advantages of vSphere Pods

Tanzu Kubernetes Cluster:

- Kubernetes clusters that are fully conformant with upstream Kubernetes

- Flexible cluster lifecycle management independent of vSphere, including upgrades

- Ability to add or customize open source & ecosystem tools like Helm Charts

- Broad support for open-source networking technologies such as Antrea

For further information check out the Whitepaper – VMware vSphere with Kubernetes 101

vSphere Native Deployment Options

The above information covers running Kubernetes on your vSphere platform natively. You can deploy as follows;

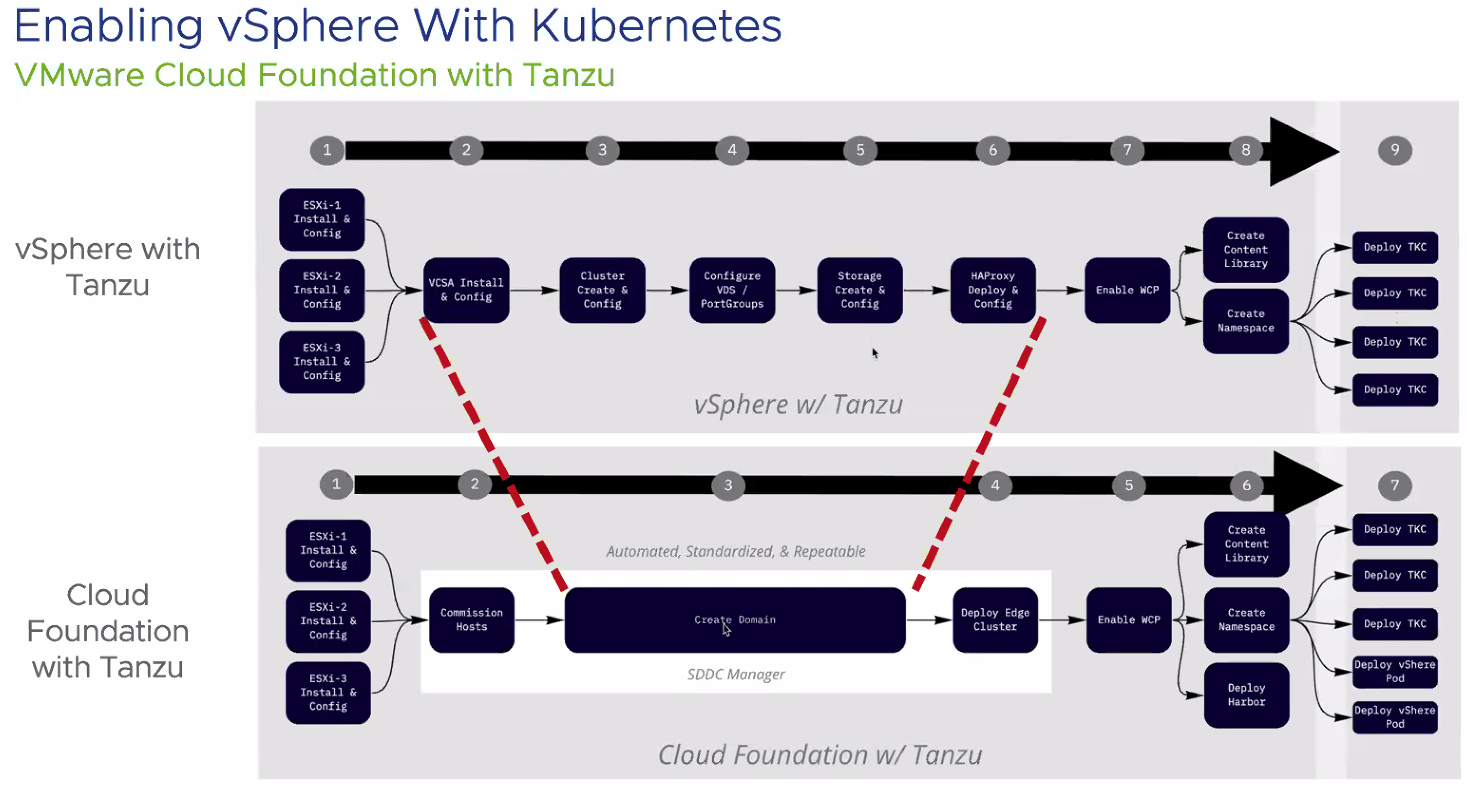

VMware Cloud Foundation is an integrated full stack solution, delivering customers a validated architecture bringing together vSphere, NSX for software defined networking, vSAN for software defined storage, and the vRealize Suite for Cloud Management automation and operation capabilities.

Deploying the vSphere Tanzu Kubernetes solution is as simple as a few clicks in a deployment wizard, providing you a fully integrated Kubernetes deployment into the VMware solutions.

The below graphic summarises the deployment steps between both options discussed.

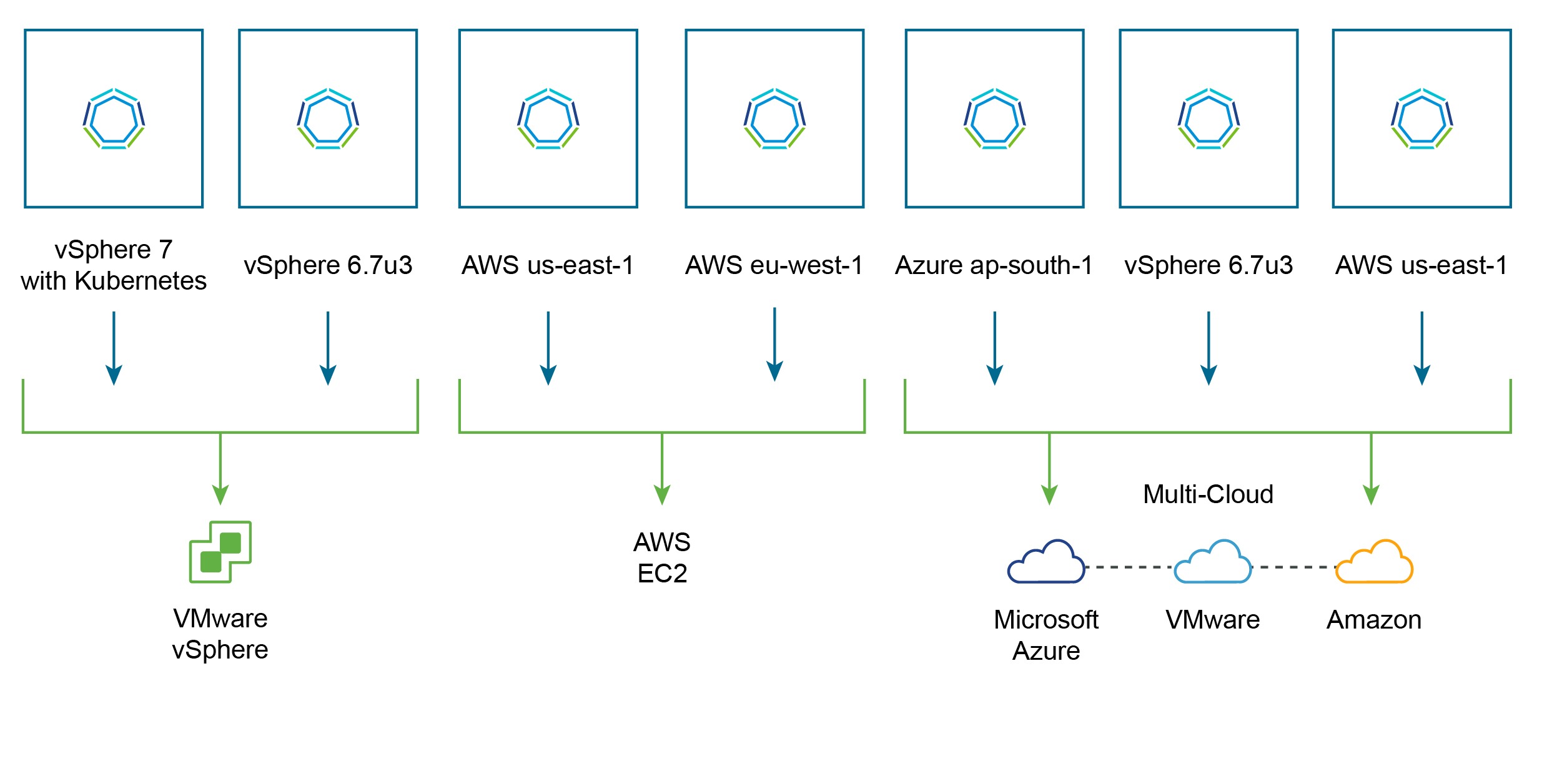

Multi-cloud Deployment Options

- Blog – Become a Modern Software Organization with VMware Tanzu

- Documentation – Tanzu Kubernetes Grid

Building on top of the explanation of Tanzu Kubernetes Cluster explained earlier, Tanzu Kubernetes Grid (TKG) is the same easy-to-upgrade, conformant Kubernetes, with pre-integrated and validated components. This multi-cloud Kubernetes offering that you can run both on-premises in vSphere and in the public cloud on Amazon and Microsoft Azure, fully supported by VMware.

- Tanzu Kubernetes Grid (TKG) is the name used for the deployment option which is multi-cloud focused.

- Tanzu Kubernetes Cluster (TKC) is the name used for a Tanzu Kubernetes deployment deployed and managed by vSphere Namespace.

Introducing vSphere Namespaces

When enabling Kubernetes within a vSphere environment a supervisor cluster is created within the VMware Data Center. This supervisor cluster is responsible for managing all Kubernetes objects within the VMware Data Center, including vSphere Namespaces. The supervisor cluster communicating with ESXi forms the Kubernetes control plane, for enabled clusters.

A vSphere Namespace is a logical object that is created on the vSphere Kubernetes supervisor cluster. This object tracks and provides a mechanism to edit the assignment of resources (Compute, Memory, Storage & Network) and access control to Kubernetes resources, such as containers or virtual machines.

You can provide the URL of the Kubernetes control plane to developers as required, where they can then deploy containers to the vSphere Namespaces for which they have permissions.

Resources and permissions are defined on a vSphere Namespace for both Kubernetes containers, consuming resources directly via vSphere, or Virtual Machines configured and provisioned to operate Tanzu Kubernetes Grid (TKG).

- vSphere 7 Blog – Introduction to Kubernetes and vSphere Namespaces

- vSphere Documentation – vSphere with Tanzu Architecture

Access control

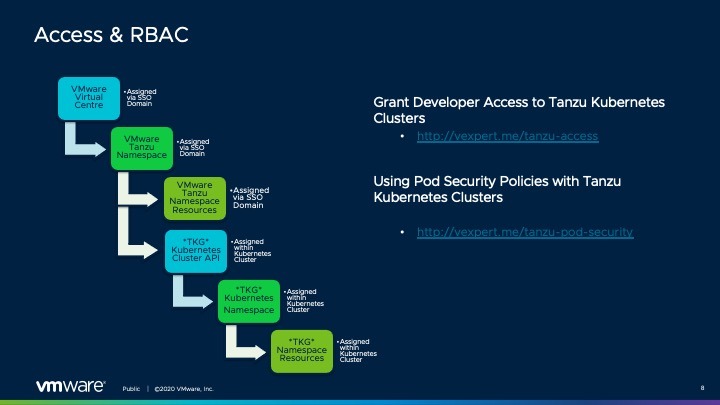

For a Virtual Administrator the way access can be assigned to various Tanzu elements within the Virtual Infrastructure is very similar to any other logical object.

- Create Roles

- Assign Permissions to the Role

- Allocate the Role to Groups or Individuals

- Link the Group or Individual to inventory objects

With Tanzu those inventory objects include Namespaces’ and Resources.

What I also wanted to highlight was if a Virtual Administrator gave administrative permissions to a Kubernetes cluster, then this has similarities to granting ‘root’ or ‘administrator’ access to a virtual machine. An individual with these permissions could create and grant permissions themselves, outside of the virtual infrastructure.

Documentation

Continue reading A guide to vSphere with Tanzu Kubernetes – Day 2 Operations for the VI Admin

Continue reading A guide to vSphere with Tanzu Kubernetes – Day 2 Operations for the VI Admin