The Issue

When upgrading to vRA SaltStack Config 8.9 using vRealize Suite LifeCycle Manager, I found I hit an issue stating that the upgrade failed as the VAMI version of the appliance was already at the build number to be expected.

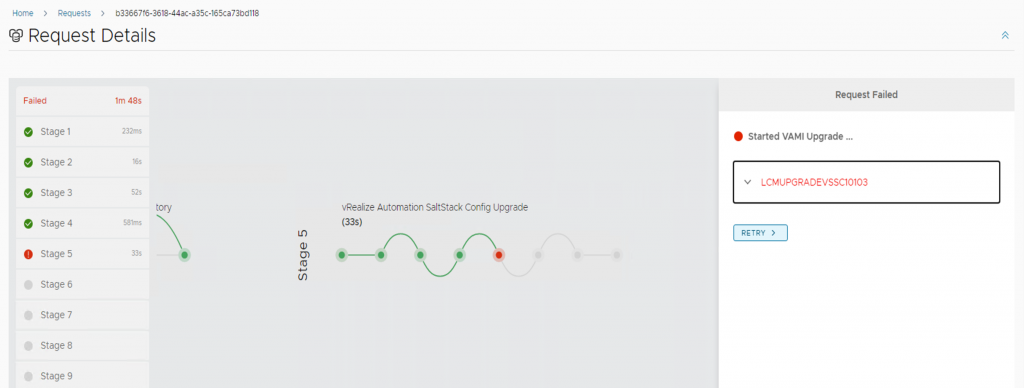

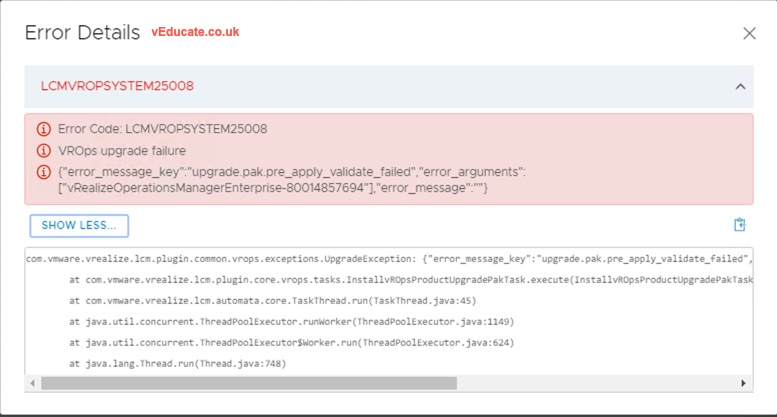

Below is a copy of the error message:

LCMUPGRADEVSSC10103 Error Code: LCMUPGRADEVSSC10103 VAMI upgrade for vRealize Automation SaltStack Config failed. Check vRealize Suite Lifecycle Manager logs for more information. VAMI is already at the version provided for upgrade. Retry the request by passing skipTask as 'true' to skip the VAMI upgrade and proceed further to RAAS upgrade. Check upgrade logs at /var/log/lcm-vami-upgrade.log on the vRealize Automation SaltStack Config host for more details. com.vmware.vrealize.lcm.vsse.common.exception.VsscUpgardeException: VAMI is already at the version provided for upgrade. Retry the request by passing skipTask as 'true' to skip the VAMI upgrade and proceed further to RAAS upgrade. Check upgrade logs at /var/log/lcm-vami-upgrade.log on the vRealize Automation SaltStack Config host for more details. at com.vmware.vrealize.lcm.vsse.core.task.VsscVamiUpgradeTask.execute(VsscVamiUpgradeTask.java:96) at com.vmware.vrealize.lcm

The Fix

Rather than follow the error message, and retry the task by skipping the failure. I instead performed a inventory sync on the environment this part of. Then retried the task without skipping the failure.

This proved successful, leading me to think that maybe vRSLCM missed a collectiong point of information during the upgrade.

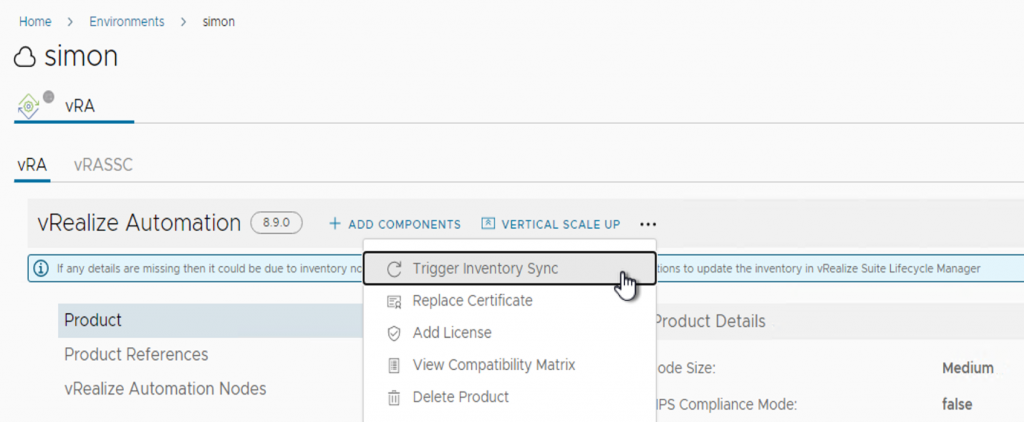

- Go to your environment with SaltStack Config installed

- Click the options to trigger the inventory sync

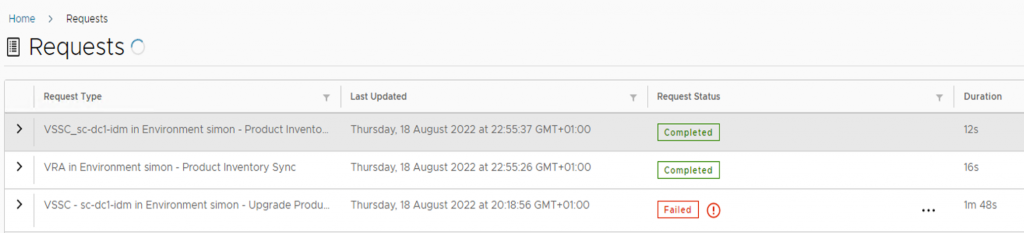

Keep an eye on the requests, and once the inventory sync is completed, now click on your failed upgrade request.

Within the request , click to retry.

And after that you should hopefully see a successfully completed request.

Regards