Recently, I was involved in some work to assist the VMware Tanzu Observability team to assist them in updating their deliverables for OpenShift. Now it’s generally available, I found some time to test it out in my lab.

For this blog post, I am going to pull in metrics from my VMware Cloud on AWS environment and the Red Hat OpenShift Cluster which is deployed upon it.

What is Tanzu Observability?

We should probably start with what is Observability, I could re-create the wheel, but instead VMware has you covered with this helpful page.

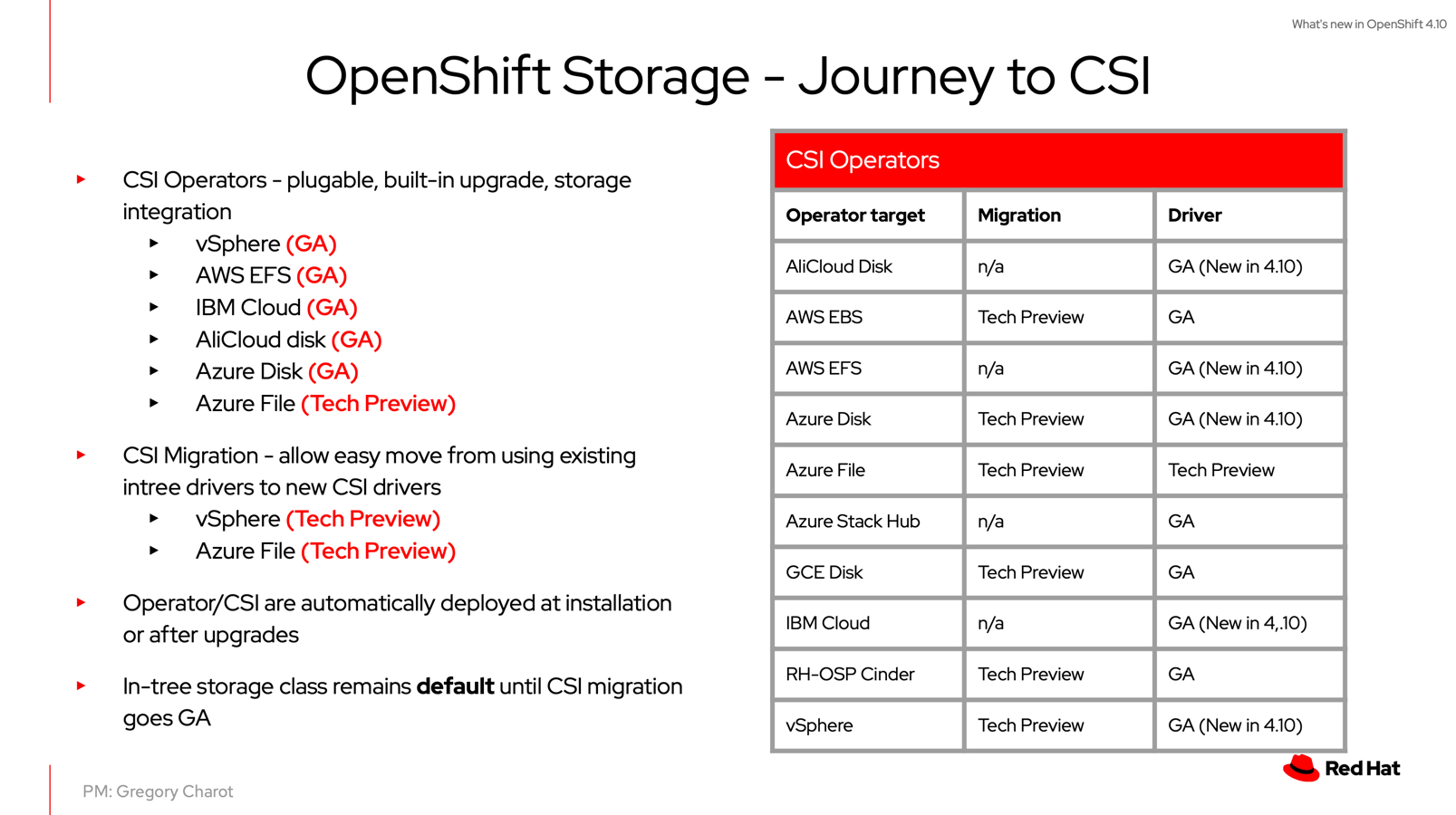

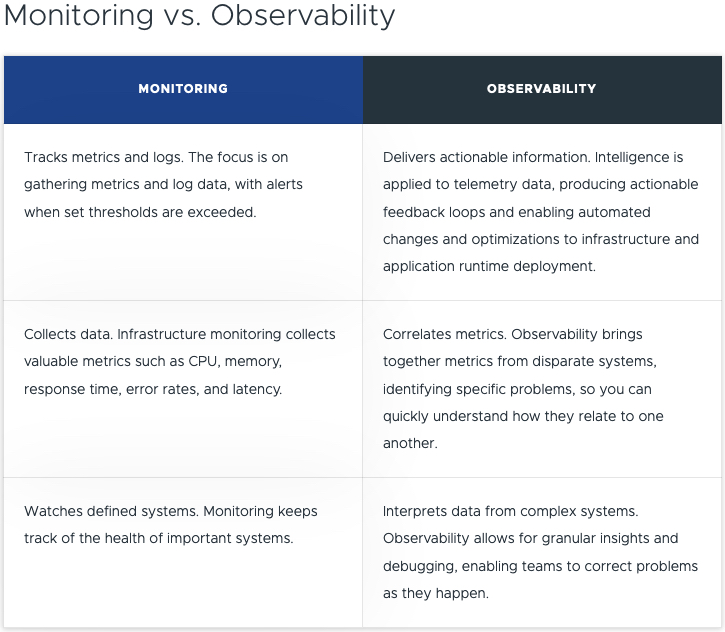

Below is the shortened table comparison.

As a developer you want to focus on developing the application, but you also do need to understand the rest of the stack to a point. In the middle, you have a Site Reliability Engineer (SRE), who covers the platform itself, and availability to ensure the app runs as best it can. And finally, we have the platform owner, where the applications and other services are located.

Somewhere in the middle, when it comes to tooling, you need to cover an example of the areas listed below:

- Application Observability & Root Cause Analysis

- App-aware Troubleshooting & Root Cause Analysis

- Distributed Tracing

- CI/CD Monitoring

- Analytics with Query Language and high reliability, granularity, cardinality, and retention

- Full-Stack Apps & Infra Telemetry as a Service

- Infra Monitoring

- Performance Optimization

- Capacity and Cost Optimization

- Configuration and Compliance

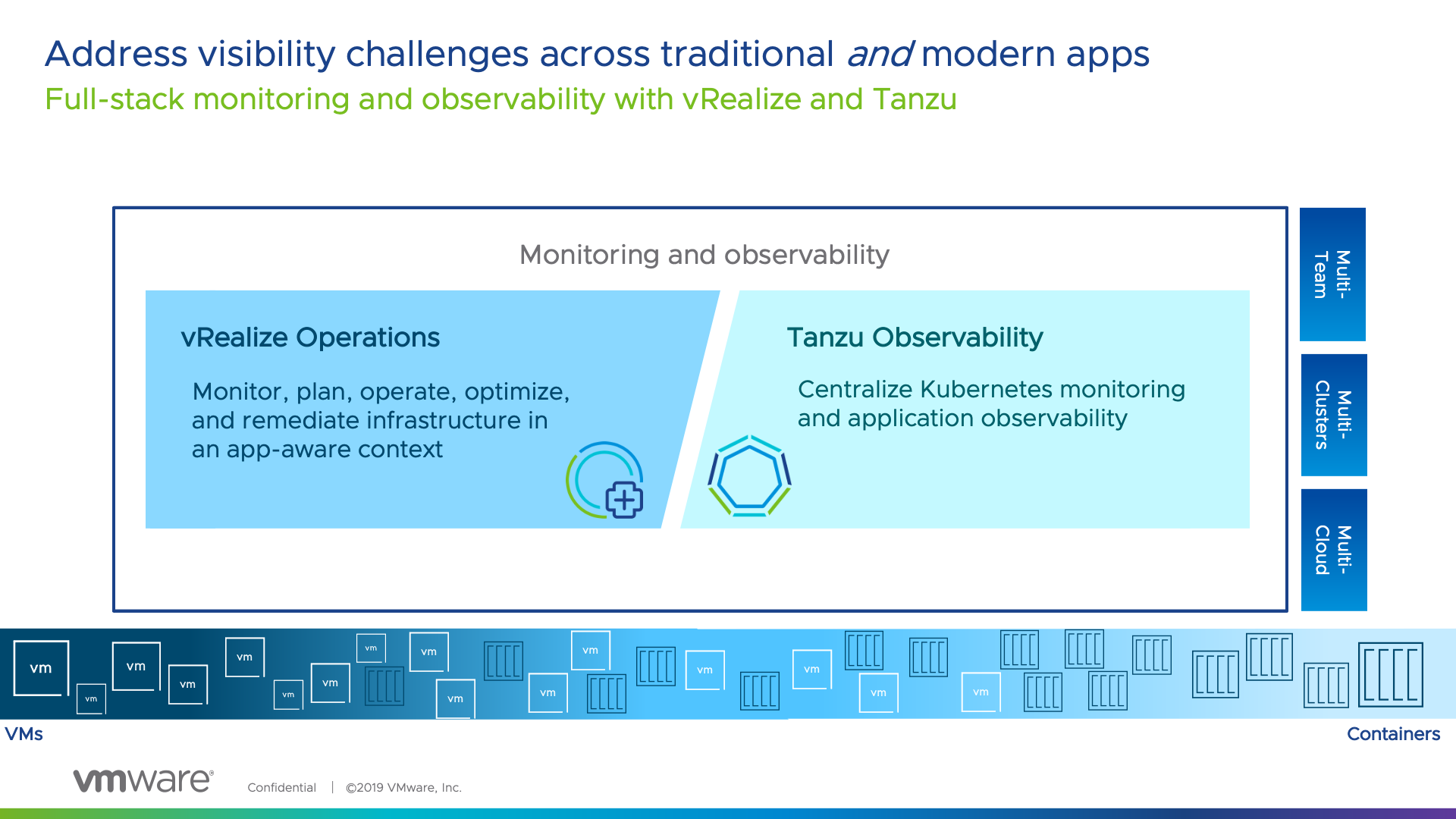

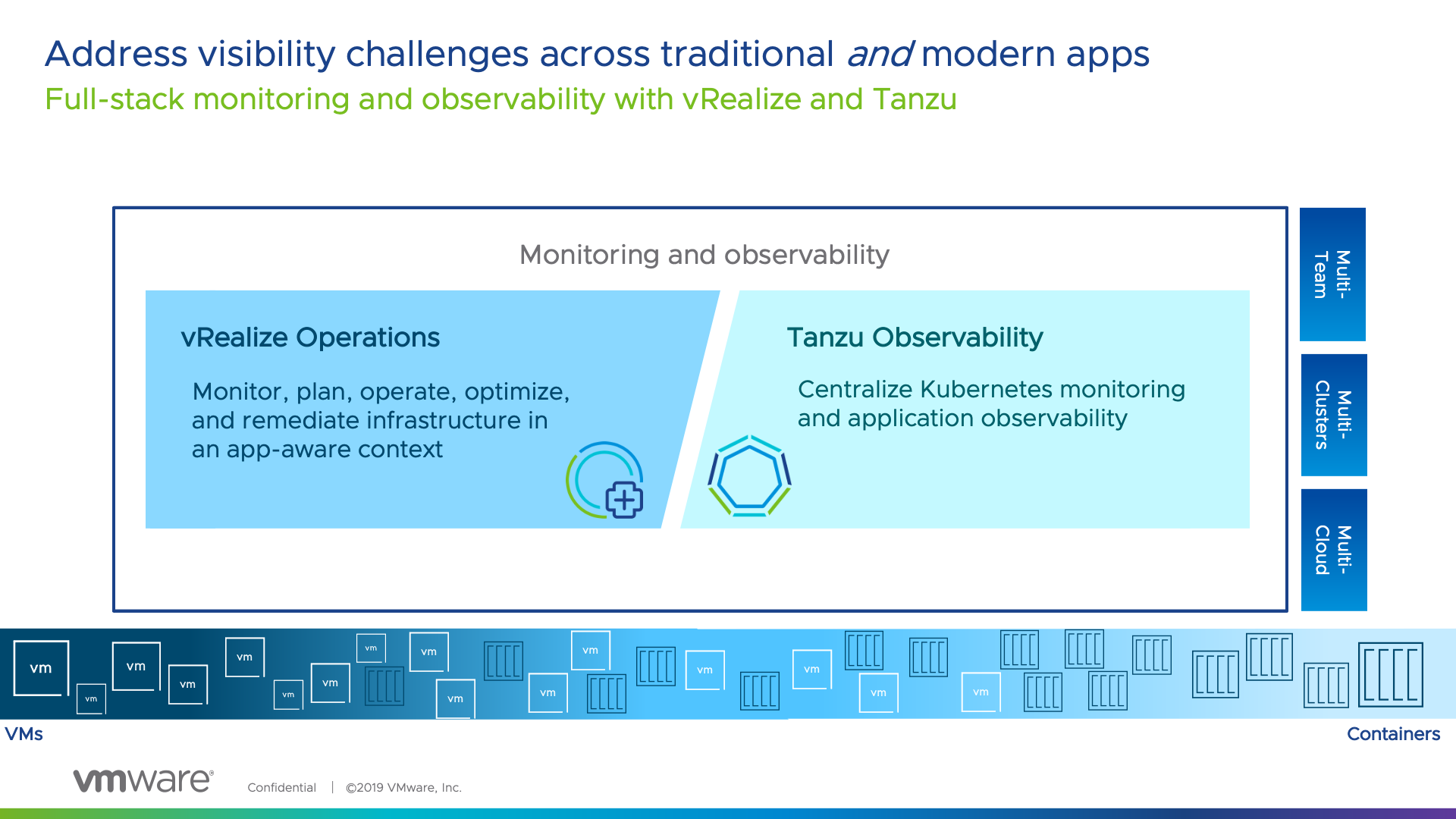

So now you are thinking, OK, but VMware has vRealize Operations that gives me a lot of data, so why is there a new product for this?

vRealize Operations and Tanzu Observability come together – delivering full stack monitoring and observability from both the infra-up and app-down perspective, equipping both teams in the org to meet common goals.

It is about the right tool for the right team and bringing together harmony between them. Which is why at VMware, the focus has been on covering the needs of team across the two products.

vRealize Operations is going to give you SLA metrics for your infrastructure and even application awareness. However Tanzu Observability brings more application focused features to allow you as a business, report on Application Experience of your end users/customers, at an SLA/SLO/KPI approach with extensibility to provide an Experience Level Agreement (XLA) type capability.

VMware Tanzu Observability by Wavefront delivers enterprise-grade observability and analytics at scale. Monitor everything from full-stack applications to cloud infrastructures with metrics, traces, event logs, and analytics.

High level features include:

To follow this blog, you can also easily get yourself access to Tanzu Observability.

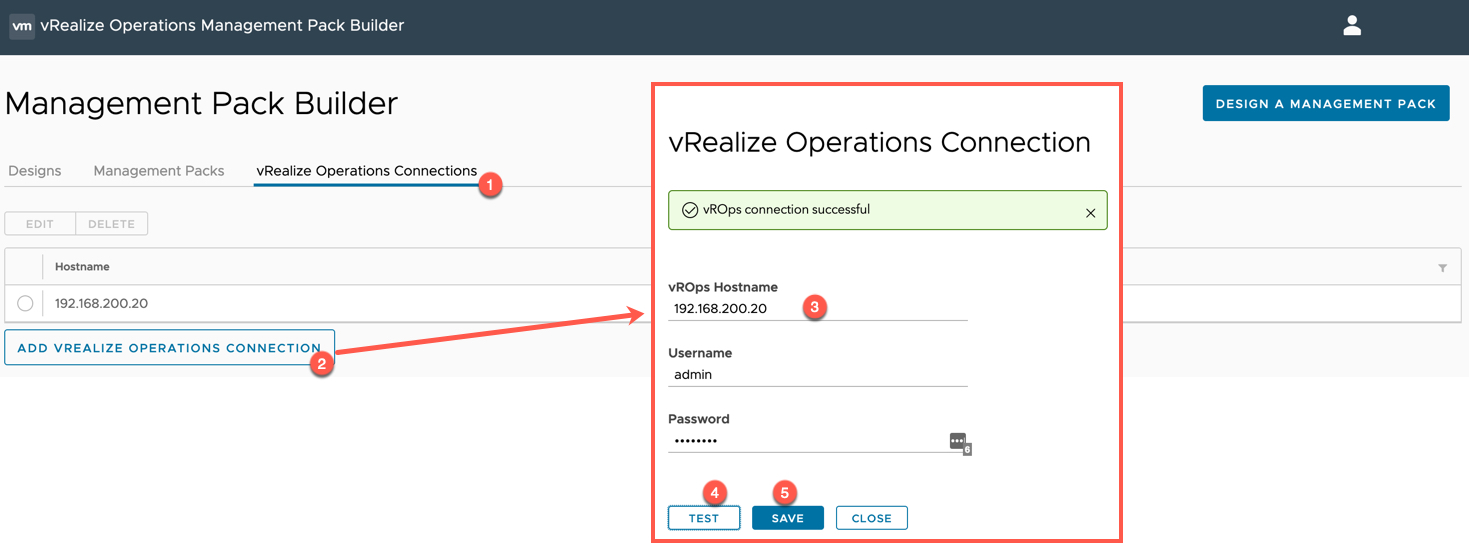

Configuring data ingestion into Tanzu Observability using the native integrations

Configuring the OpenShift (Kubernetes) Integration using Helm

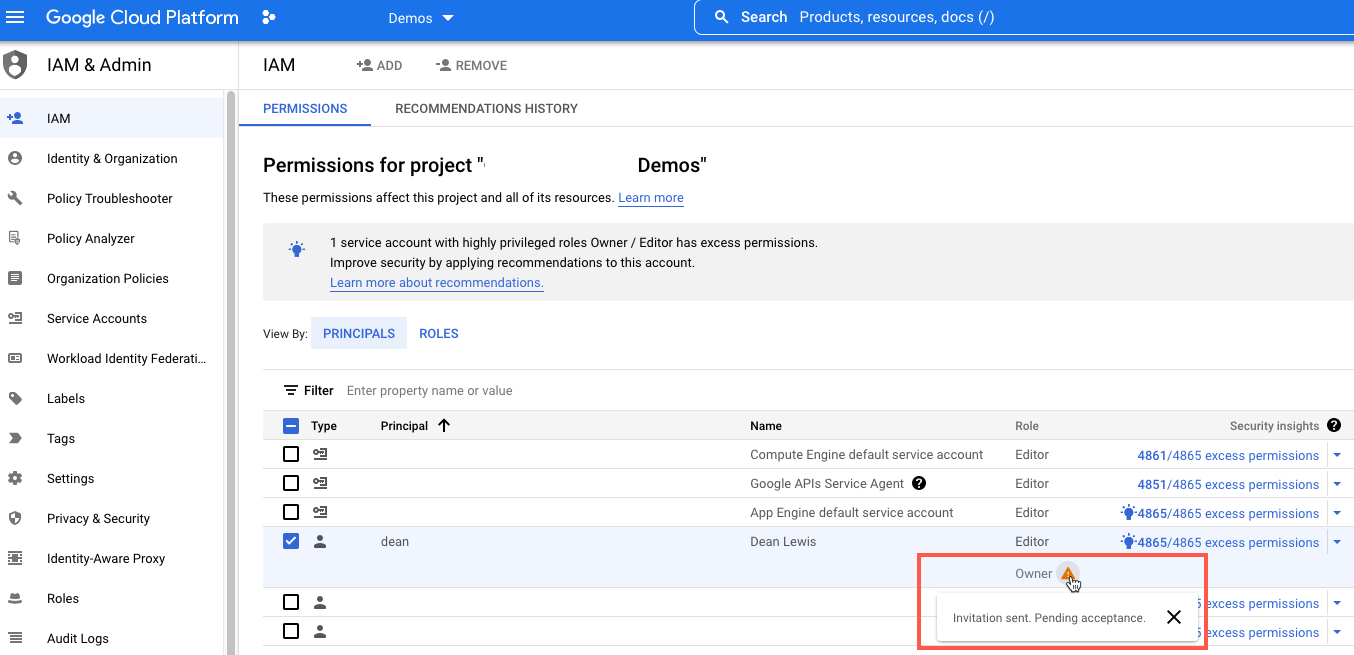

First, we need to create an API Key that we can use to connect our locally deployed wavefront services to the SaaS service to send data. Continue reading Tanzu Observability – First look at monitoring OpenShift & VMware Cloud on AWS →