This blog post is thanks to an internal query, that I thought should be easy enough to complete, however my usage of an ELK environment is limited, so it was a good chance to dig in and learn something new.

In this blog post, I’m going to detail the configurations for pushing vRealize Operations Alert notifications to ElasticStack (aka ElasticSearch, ELK) using the Notification Webhook feature.

Again, I am not an ELK expert here, so there may (read this as probably) better ways to configure this when it comes to the date handling.

Configure an ingestion timestamp in ELK

One of the first issues I hit when testing all of this, is the fact that ELK doesn’t seem to like the date formats that vROPs alerts uses. Once an index (store of data records) is created, the fields are parsed, and the type attributed to a field cannot be changed. I went through various options to remedy this, so that my logs could be searched based on time stamps, but it seemed not easily feasible. If anyone knows of the best way to achieve this, let me know, see the end of this blog post for more details.

For those of you who do know ElasticSearch, vROPs sends the time/date in the notification payload in the following format "EEE LLL dd HH:mm:ss z uuuu"

The best way I found around this, is to add in the ability to create a ingestion timestamp on the data received by Elasticsearch, and add it to the settings of the created index.

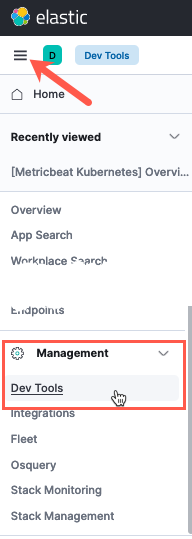

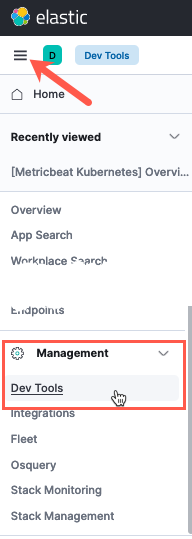

To create this ingestion rule, in your Elastic UI, click on Three Lines to open the navigation options, then click on Dev Tools, under Management.

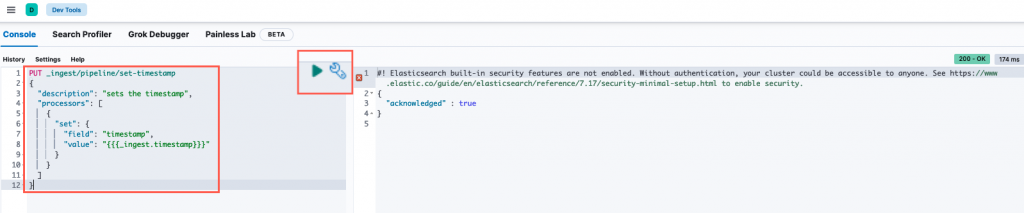

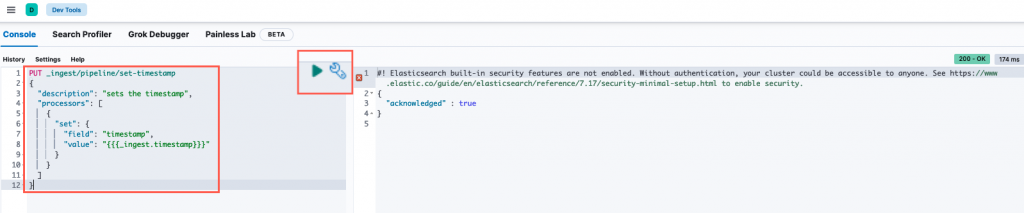

This will give you an in-browser console access to send configurations to the Elastic environment. When reading the documentation, you’ll notice that the configuration for Elastic is provided a lot of the time via API commands and payloads. It seems like this is the preferred way to configure the system, with the UI lacking the ability to make these changes for most options.

Paste the content below the screenshot, which creates a pipeline rule to provide processing on the data that comes into the system.

When the syntax is validated, you will see a small Green Arrow appear to apply the configuration. The right-hand side console window shows the output from running the API call and payload.

PUT _ingest/pipeline/set-timestamp

{

"description": "sets the timestamp",

"processors": [

{

"set": {

"field": "timestamp",

"value": "{{{_ingest.timestamp}}}"

}

}

]

}

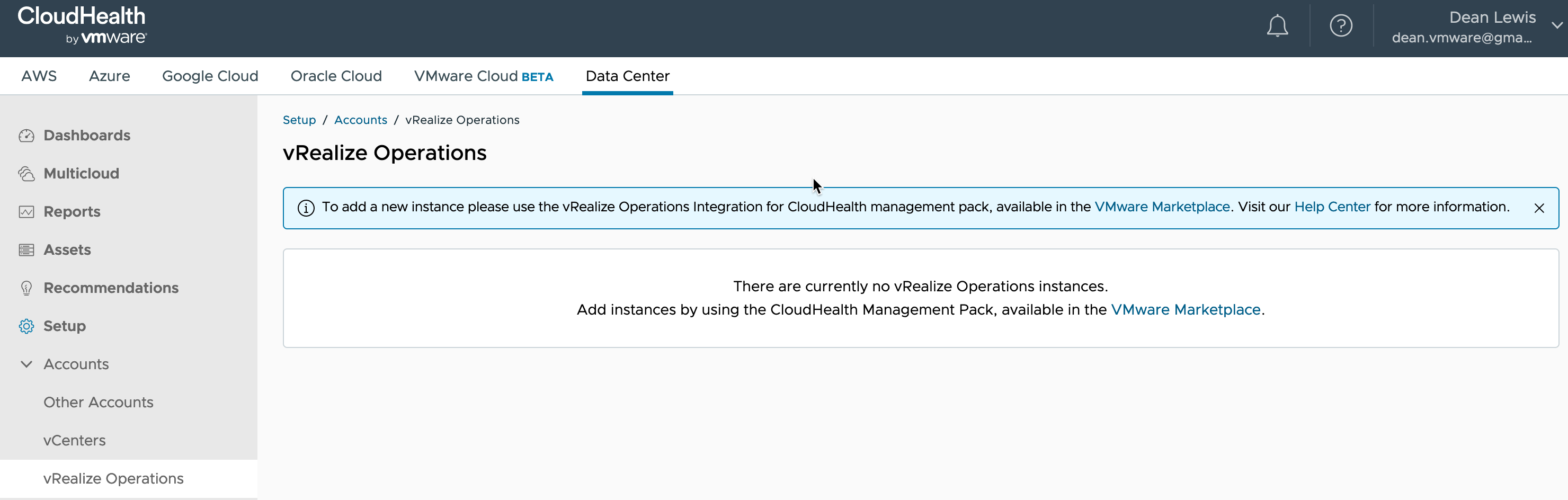

Create the outbound webhook in vRealize Operations

Continue reading Sending vRealize Operations Alerts to ElasticStack (ELK) →