In this post, I will cover how to deploy Prometheus and the Telegraf exporter and configure so that the data can be collected by vRealize Operations.

Overview

Delivers intelligent operations management with application-to-storage visibility across physical, virtual, and cloud infrastructures. Using policy-based automation, operations teams automate key processes and improve the IT efficiency.

Is an open-source systems monitoring and alerting toolkit. Prometheus collects and stores its metrics as time series data, i.e. metrics information is stored with the timestamp at which it was recorded, alongside optional key-value pairs called labels.

There are several libraries and servers which help in exporting existing metrics from third-party systems as Prometheus metrics. This is useful for cases where it is not feasible to instrument a given system with Prometheus metrics directly (for example, HAProxy or Linux system stats).

Telegraf is a plugin-driven server agent written by the folks over at InfluxData for collecting & reporting metrics. By using the Telegraf exporter, the following Kubernetes metrics are supported:

Why do it this way with three products?

You can actually achieve this with two products (vROPs and cAdvisor for example). Using vRealize Operations and a metric exporter that the data can be grabbed from in the Kubernetes cluster. By default, Kubernetes offers little in the way of metrics data until you install an appropriate package to do so.

Many customers have now decided upon using Prometheus for their metrics needs in their Modern Applications world due to the flexibility it offers.

Therefore, this integration provides a way for vRealize Operations to collect the data through an existing Prometheus deploy and enrich the data further by providing a context-aware relationship view between your virtualisation platform and the Kubernetes platform which runs on top of it.

vRealize Operations Management Pack for Kubernetes supports a number of Prometheus exporters in which to provide the relevant data. In this blog post we will focus on Telegraf.

You can view sample deployments here for all the supported types. This blog will show you an end-to-end setup and deployment.

Prerequisites

- Administrative access to a vRealize Operations environment

- Install the “vRealize Operations Management Pack for Kubernetes”

- Access to a Kubernetes cluster that you want to monitor

- Install Helm if you have not already got it setup on the machine which has access to your Kubernetes cluster

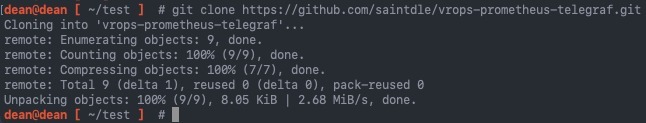

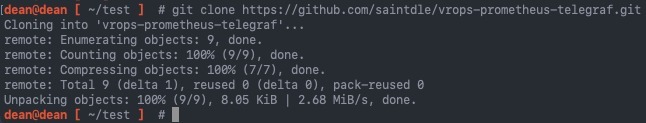

- Clone this GitHub repo to your machine to make life easier

git clone https://github.com/saintdle/vrops-prometheus-telegraf.git

Information Gathering

Note down the following information:

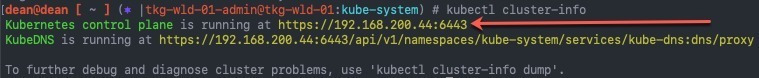

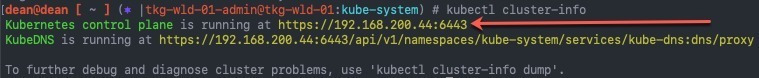

- Cluster API Server information

kubectl cluster-info

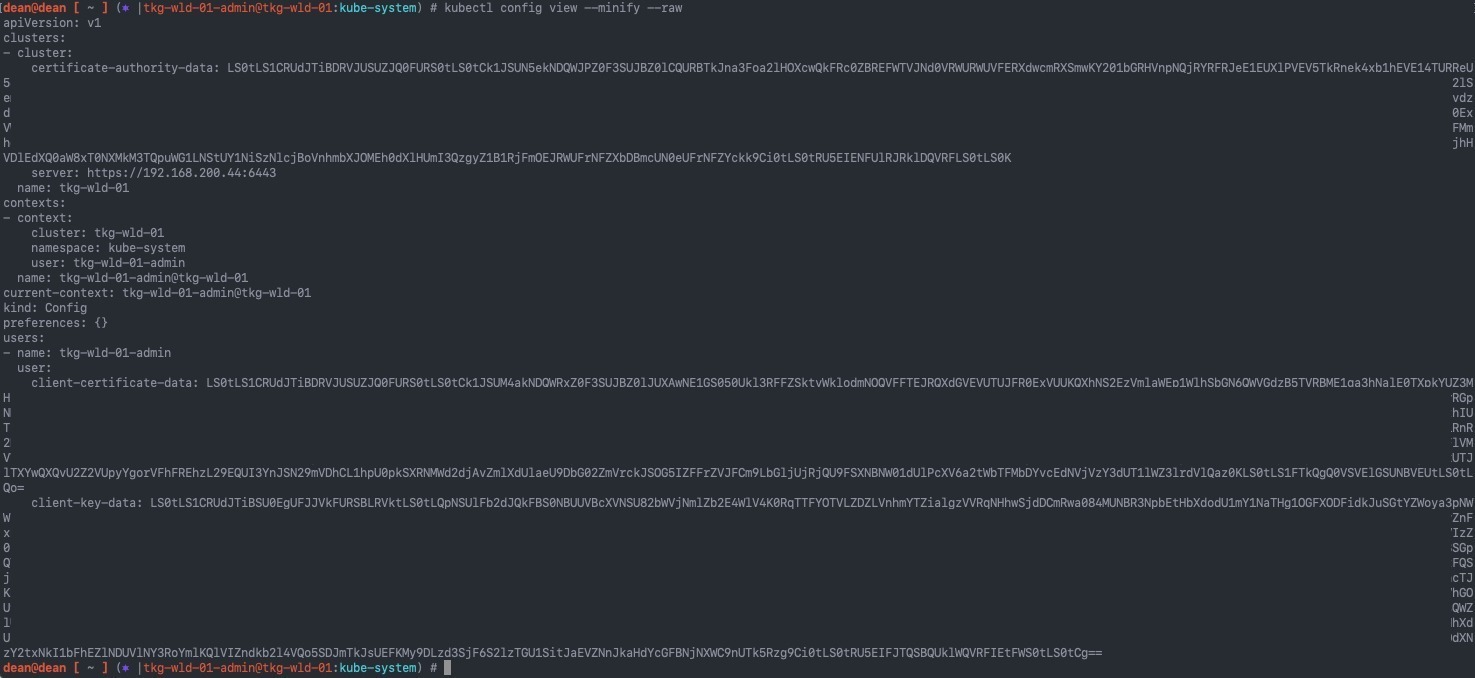

- Access details for the Kubernetes cluster

- Basic Authentication – Uses HTTP basic authentication to authenticate API requests through authentication plugins.

- Client Certification Authentication – Uses client certificates to authenticate API requests through authentication plugins.

- Token Authentication – Uses bearer tokens to authenticate API requests through authentication plugin

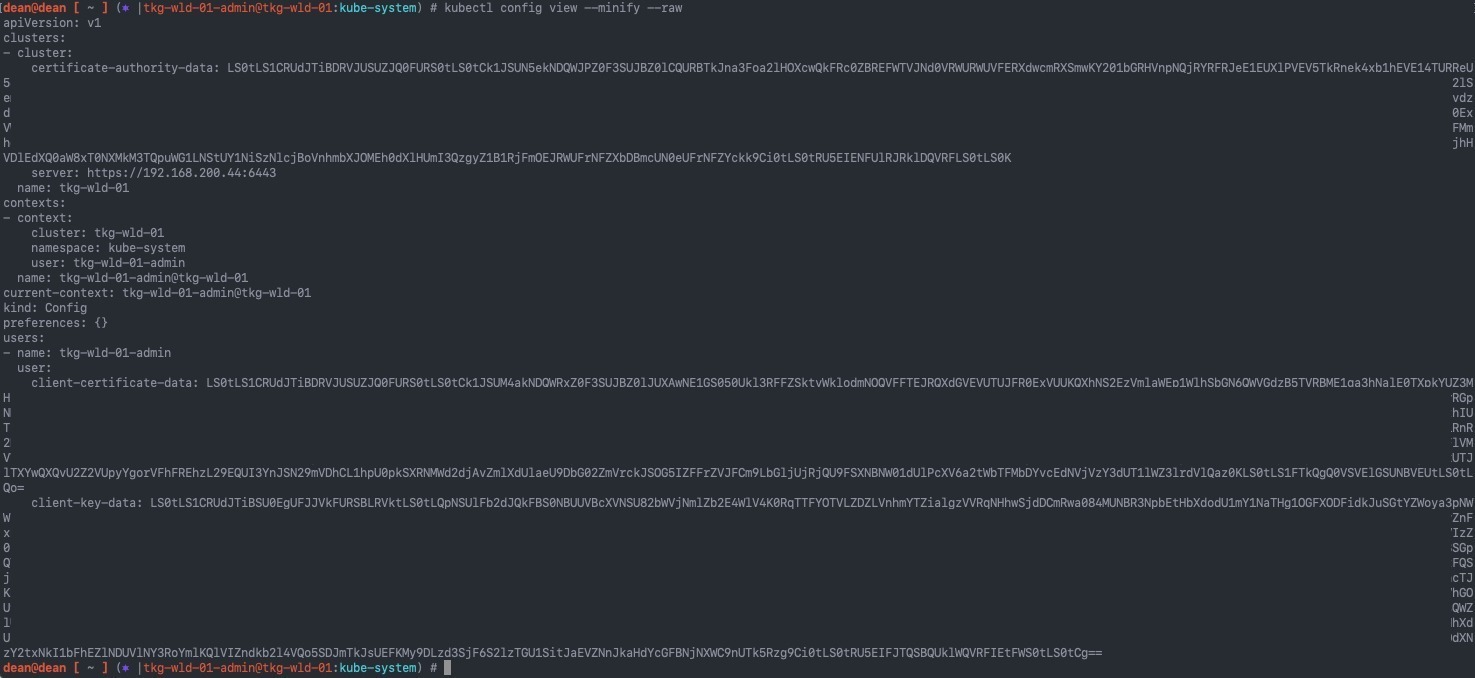

In this example I will be using “Client Certification Authentication” using my current authenticated user by running:

kubectl config view --minify --raw

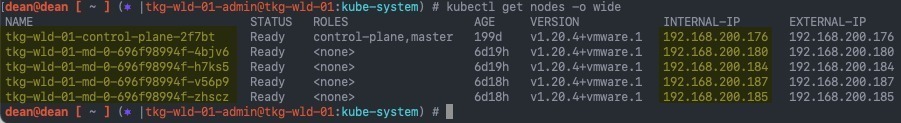

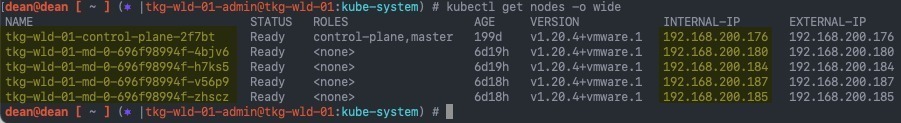

- Get your node names and IP addresses

kubectl get nodes -o wide

Install the Telegraf Kubernetes Plugin

Continue reading vRealize Operations – Monitoring Kubernetes with Prometheus and Telegraf →