I was honoured to be a guest on the “Ask an OpenShift Admin” webinar recently. Where I had the chance to talk about OpenShift on VMware, always a hot topic, and how we co-innovate and work together on solutions.

You can watch the full session below. Keep reading to see the content I didn’t get to cover on a separate recording I’ve produced.

Ask an OpenShift Admin (Ep 54): OpenShift on VMware and the vSphere Kubernetes Drivers Operator

However, I had a number of topics and demo’s planned, that we never got time to visit. So here is the full content I had prepared.

Some of the areas in this webinar and my additional session we covered were:

- Answering questions live from the views (anything on the table)

- OpenShift together with VMware

- Common issues and best practices for deploying OpenShift on VMware vSphere

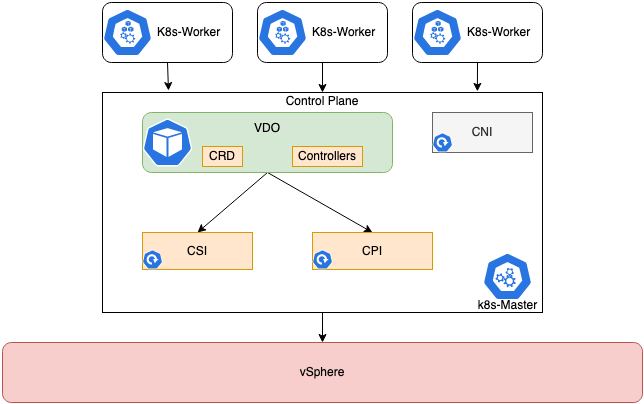

- Consuming your vSphere Storage in OpenShift

- Integrating with the VMware Network stack

- Infrastructure Up Monitoring

OpenShift on VMware – Integrating with vSphere Storage, Networking and Monitoring

Resources

- Compute

- Red Hat and VMware Announce VMware Reference Architecture for OpenShift

- VMware Validated Design – About Architecture and Design for a Red Hat OpenShift Workload Domain

- Running Red Hat OpenShift Container Platform on VMware Cloud Foundation

- Deployment on VMware Cloud on AWS is now supported as well

- vMotion Support – vCenter requirements – Using OpenShift Container Platform with vMotion

- How to specify your vSphere virtual machine resources when deploying Red Hat OpenShift

- How to Scale and Edit your cluster deployments

- Storage

- Networking

- VMware NSX Container plugin integration with OpenShift 4

- VMworld CODE 2746: Networking and Security of Modern Apps with NSX-T (Antrea) and Openshift

- Docs – NSX-T Container Plug-in for OpenShift – Installation and Administration Guide

- Docs – Antrea – Try it out on OpenShift

- Monitoring

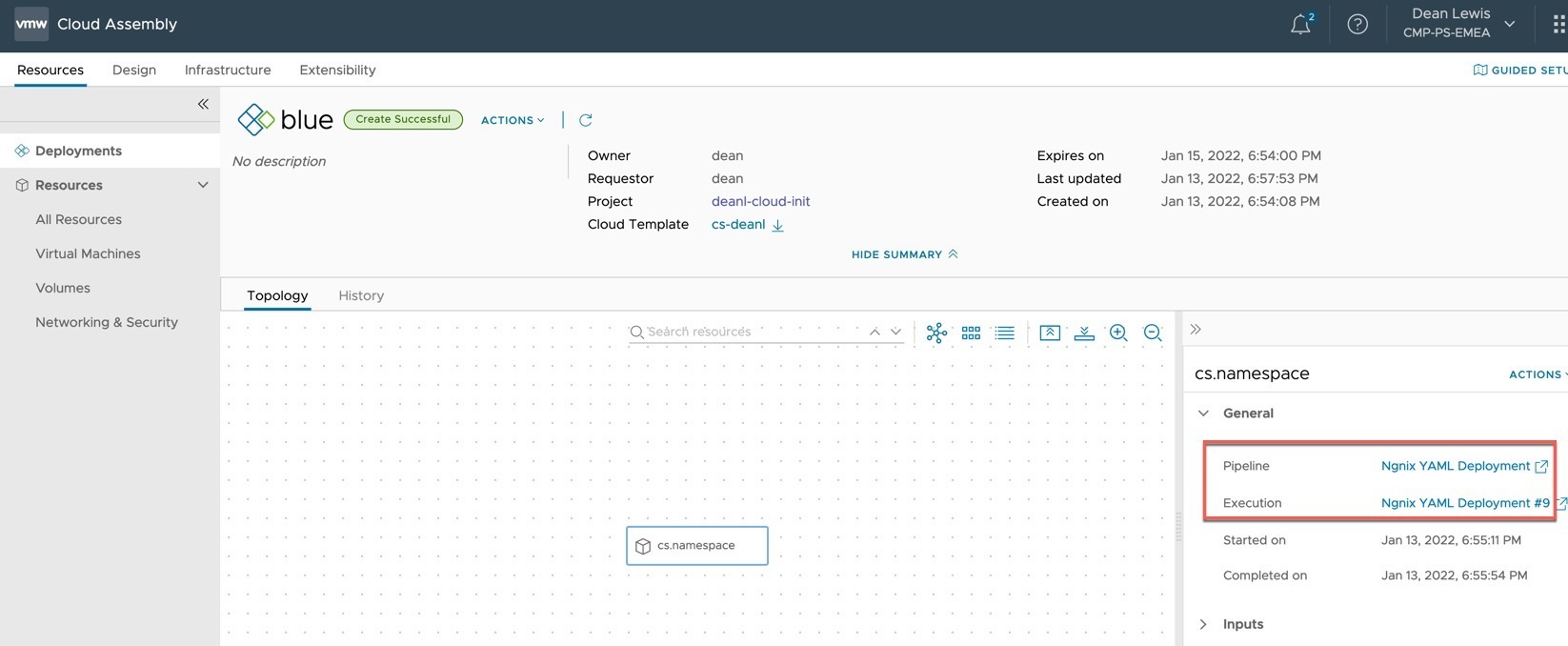

- Automation

- Other

Regards