Now we understand how to deploy a Tanzu Kubernetes Cluster using Tanzu Mission Control, let’s look at the next lifecycle step, how to upgrade the Kubernetes version of the cluster.

Below are the other blog posts in the series.

Tanzu Mission Control - Getting Started Tanzu Mission Control - Cluster Inspections - Workspaces and Policies - Data Protection - Deploying TKG clusters to AWS - Upgrading a provisioned cluster - Delete a provisioned cluster - TKG Management support and provisioning new clusters - TMC REST API - Postman Collection - Using custom policies to ensure Kasten protects a deployed application

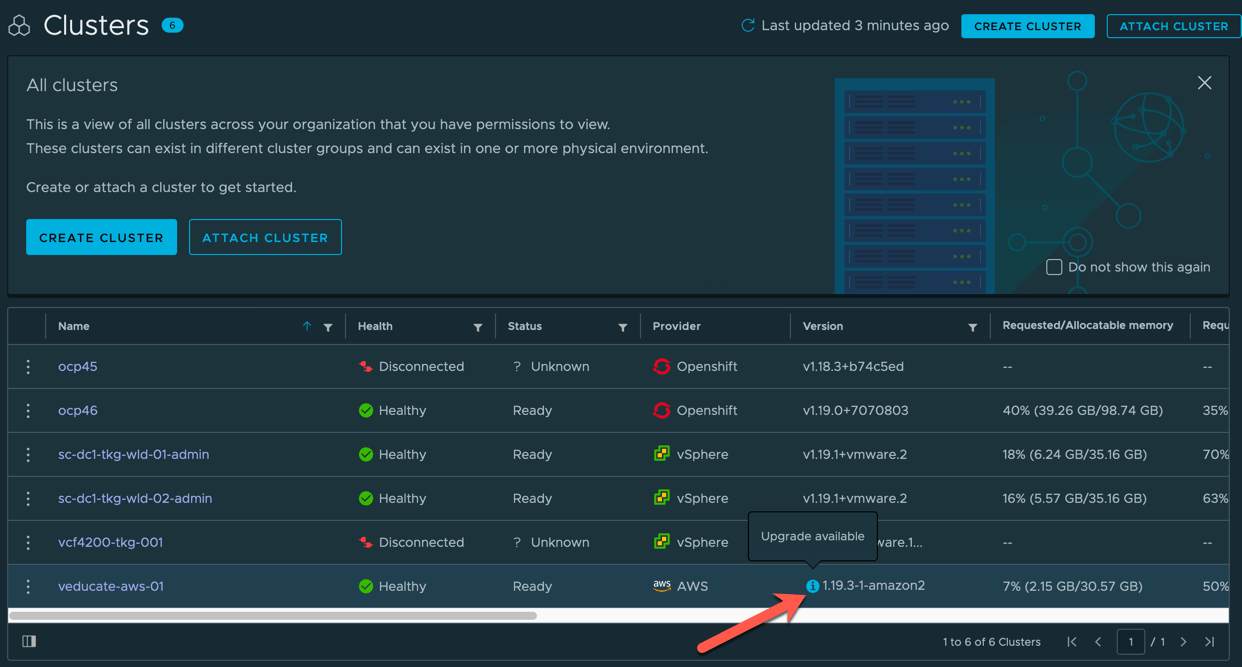

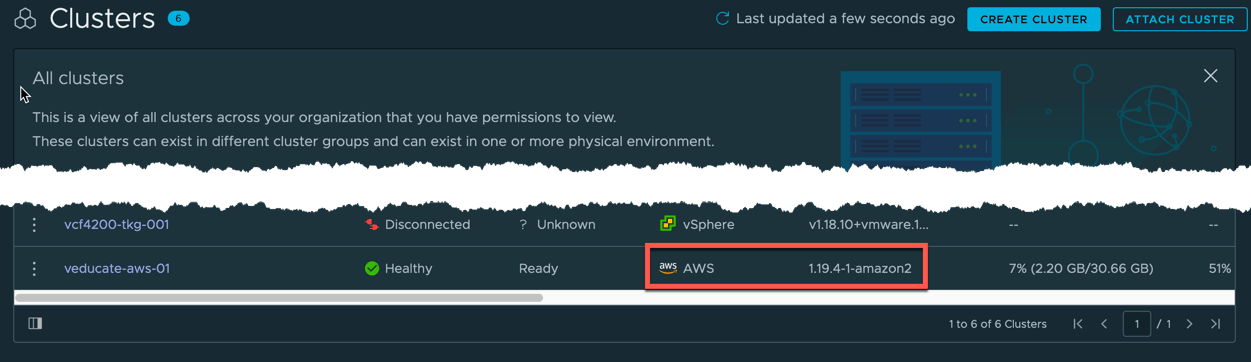

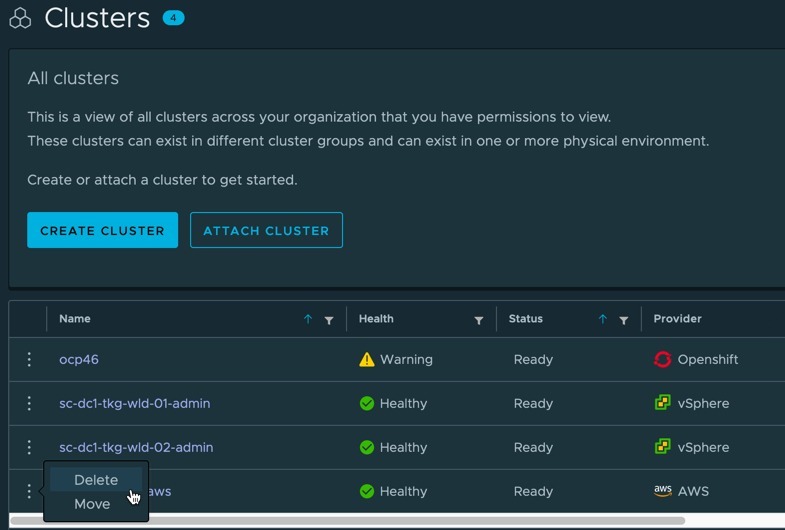

When a cluster which has been provisioned by TMC, and therefore managed by TMC, has an available upgrade, you will see an “i” icon next to the version on the clusters UI view, hovering over this will tell you there is an upgrade ready.

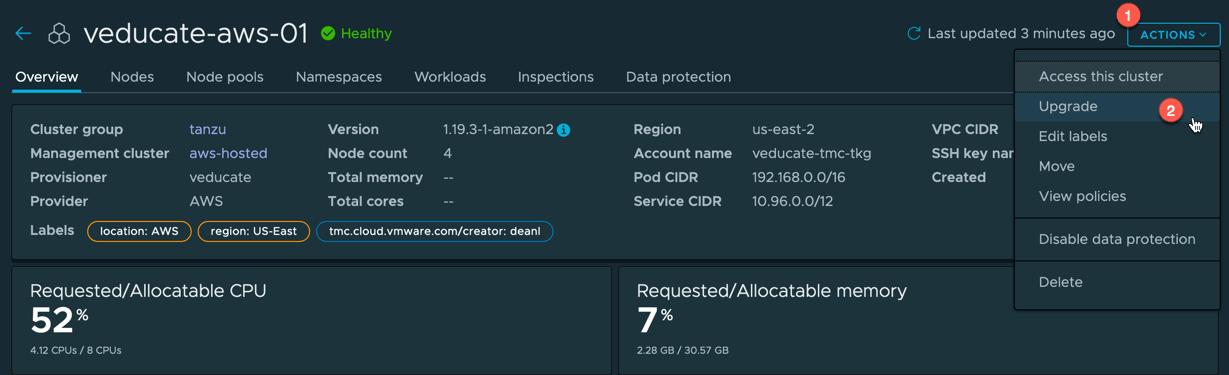

Click the cluster name to take you into the cluster object to see the full details,

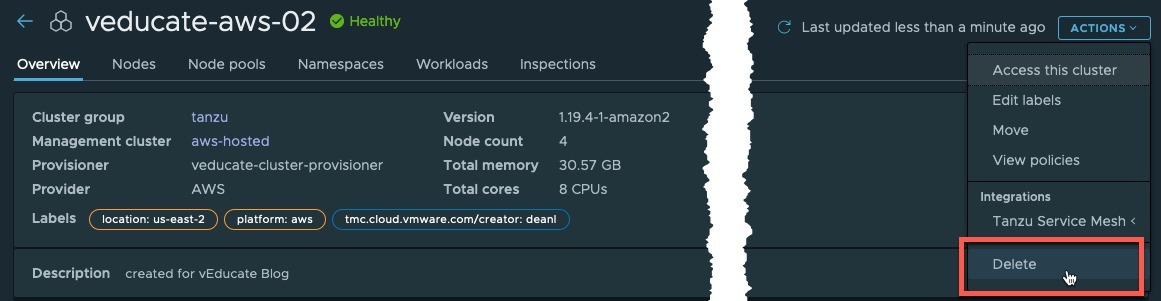

- click the actions button

- and select upgrade.

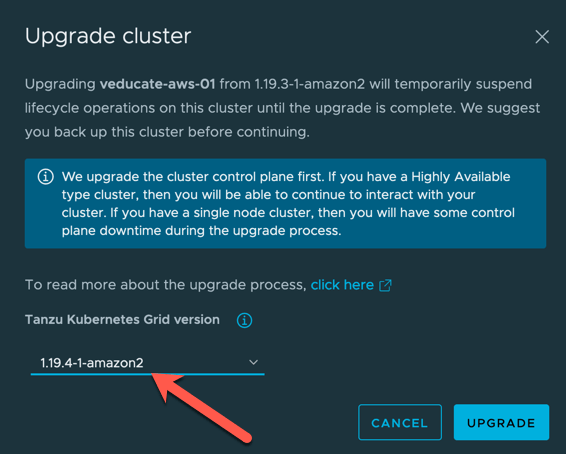

The Upgrade Cluster dialogue will appear. Select the version you want to upgrade to and click upgrade.

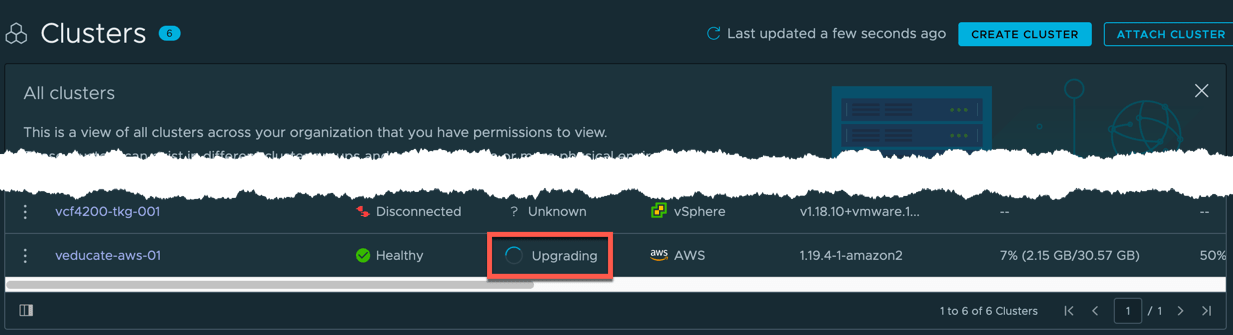

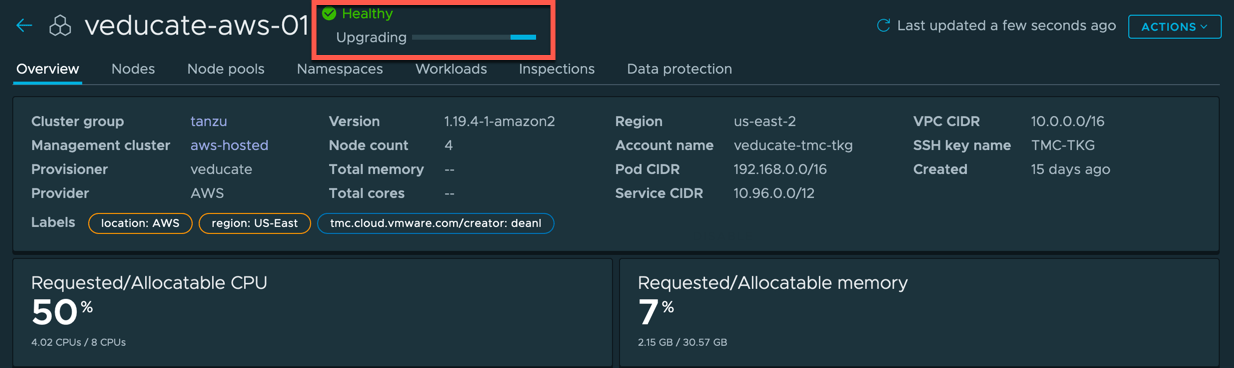

On both the Cluster list and Cluster Detailed view, the status will change to upgrading.

Once the upgrade has completed, the cluster will change back to ready and show the updated version.

Wrap-up and Resources

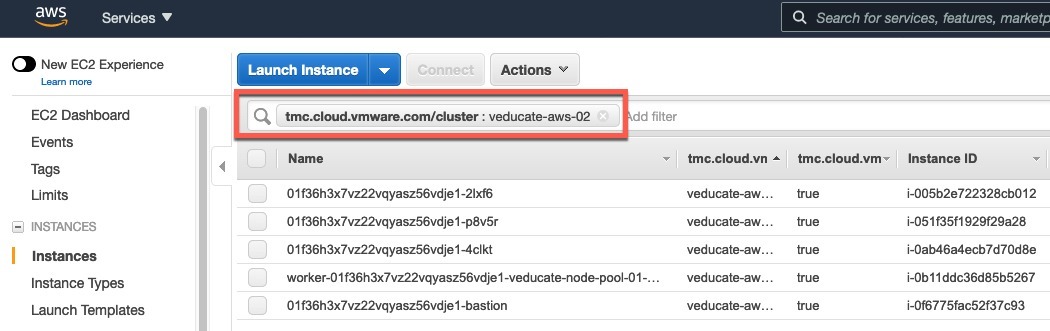

In this quick blog post, we used Tanzu Mission Control to upgrade a provisioned Tanzu Kubernetes Grid cluster which was running in AWS. All the steps provided in this blog post can be replicated using the TMC CLI as well.

As a reminder, to take real advantage of TMC I recommend you read the follow posts:

Tanzu Mission Control - Getting Started Tanzu Mission Control - Cluster Inspections - Workspaces and Policies - Data Protection - Deploying TKG clusters to AWS - Upgrading a provisioned cluster - Delete a provisioned cluster - TKG Management support and provisioning new clusters - TMC REST API - Postman Collection - Using custom policies to ensure Kasten protects a deployed application

You can get hands on experience of Tanzu Mission Control yourself over on the VMware Hands-on-Lab website, which is always free!

Regards