Continuing from the First Look blog post, where we created a distributed application between different public cloud Kubernetes deployments and connected them via Tanzu Service Mesh. We will move onto some of the more advanced capabilities of Tanzu Service Mesh.

In this blog post, we’ll look at how we can setup monitoring of our application components and performance against a Service Level Objective, and then how Tanzu Mission Control and action against violations of the SLO using auto-scaling capabilities.

What is a Service Level Objective and how do we monitor our app?

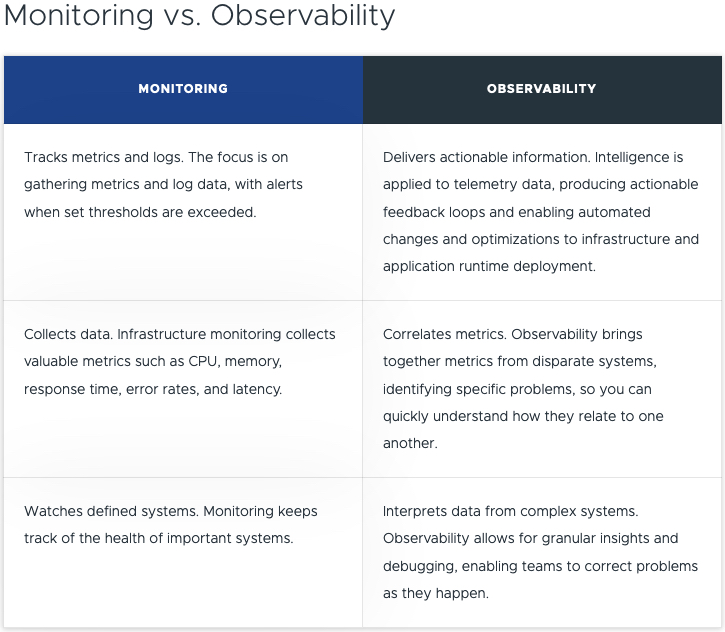

Service level objectives (SLO/s) provide a structured way to describe, measure, and monitor the performance, quality, and reliability of micro-service apps.

A SLO is used to describe the high-level objective for acceptable operation and health of one or more services over a length of time (for example, a week or a month).

- For example, Service X should be healthy 99.1% of the time.

In the provided example, Service X can be “unhealthy” 1% of the time, which is considered an “Error Budget”. This allows for downtime for errors that are acceptable (keeping an app up 100% of the time is hard and expensive to achieve), or for the likes of planned routine maintenance.

The key is the specification of which metrics or characteristics, and associated thresholds are used to define the health of the micro-service/application.

- For example:

- Error rate is less than 2%

- CPU Average is Less than 80%

This specification makes up the Service Level Indicator (SLI/s), of which one or multiple can be used to define an overall SLO.

Tanzu Service Mesh SLOs options

Before we configure, let’s quickly discuss what is available to be configured.

Tanzu Service Mesh (TSM) offers two SLO configurations:

- Monitored SLOs

- These provide alerting/indicators on performance of your services and if they meet your target SLO conditions based on the configured SLIs for each specified service.

- This kind of SLO can be configured for Services that are part of a Global Namespace (GNS-scoped SLOs) or services that are part of a direct cluster (org-scoped SLOs).

- Actionable SLOs

- These extend the capabilities of Monitored SLOs by providing capabilities such as auto-scaling for services based on the SLIs.

- This kind of SLO can only be configured for services inside a Global Namespace (GNS-scoped SLO).

-

Each actionable SLO can have only have one service, and a service can only have one actionable SLO.

The official documentation also takes you through some use-cases for SLOs. Alternatively, you can continue to follow this blog post for an example.

Quick overview of the demo environment

- Tanzu Service Mesh (of course)

- Global Namespace configured for default namespace in clusters with domain “app.sample.com”

- Three Kubernetes Clusters with a scaled-out application deployed

- AWS EKS Cluster

- Running web front end (shopping) and cart instances

- Azure AKS Cluster

- Running Catalog Service that holds all the images for the Web front end

- GCP GKE

- Running full copy of the application

- AWS EKS Cluster

In this environment, I’m going to configure a SLO which is focused on the Front-End Service – Shopping, and will scale up the number of pods when the SLIs are breached.

Configure a SLO Policy and Autoscaler

- Under the Policies header, expand

- Select “SLOs”

- Select either New Policy options