What is the vSphere Kubernetes Driver Operator (VDO)?

This Kubernetes Operator has been designed and created as part of the VMware and IBM Joint Innovation Labs program. We also talked about this at VMworld 2021 in a joint session with IBM and Red Hat. With the aim of simplifying the deployment and lifecycle of VMware Storage and Networking Kubernetes driver plugins on any Kubernetes platform, including Red Hat OpenShift.

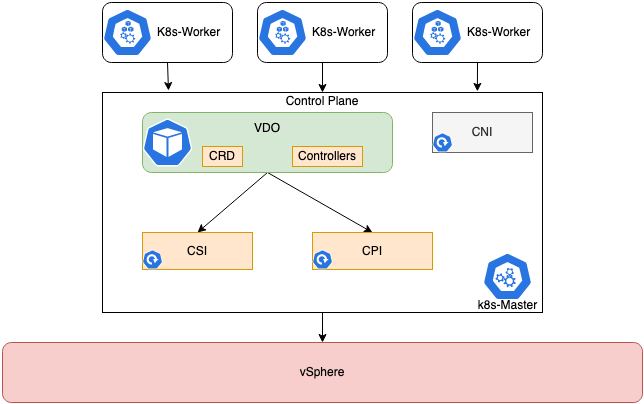

This vSphere Kubernetes Driver Operator (VDO) exposes custom resources to configure the CSI and CNS drivers, and using Go Lang based CLI tool, introduces validation and error checking as well. Making it simple for the Kubernetes Operator to deploy and configure.

The Kubernetes Operator currently covers the following existing CPI, CSI and CNI drivers, which are separately maintained projects found on GitHub.

This operator will remain CNI agnostic, therefore CNI management will not be included, and for example Antrea already has an operator.

Below is the high level architecture, you can read a more detailed deep dive here.

Installation Methods

You have two main installation methods, which will also affect the pre-requisites below.

If using Red Hat OpenShift, you can install the Operator via Operator Hub as this is a certified Red Hat Operator. You can also configure the CPI and CSI driver installations via the UI as well.

- Supported for OpenShift 4.9 currently.

Alternatively, you can install the manual way and use the vdoctl cli tool, this method would also be your route if using a Vanilla Kubernetes installation.

This blog post will cover the UI method using Operator Hub.