In this blog post I’m going to deep dive into the end-to-end activation, deployment, and consuming of the managed Tanzu Services (Tanzu Kubernetes Grid Service > TKGS) within a VMware Cloud on AWS SDDC. I’ll deploy a Tanzu Cluster inside a vSphere Namespace, and then deploy my trusty Pac-Man application and make it Publicly Accessible.

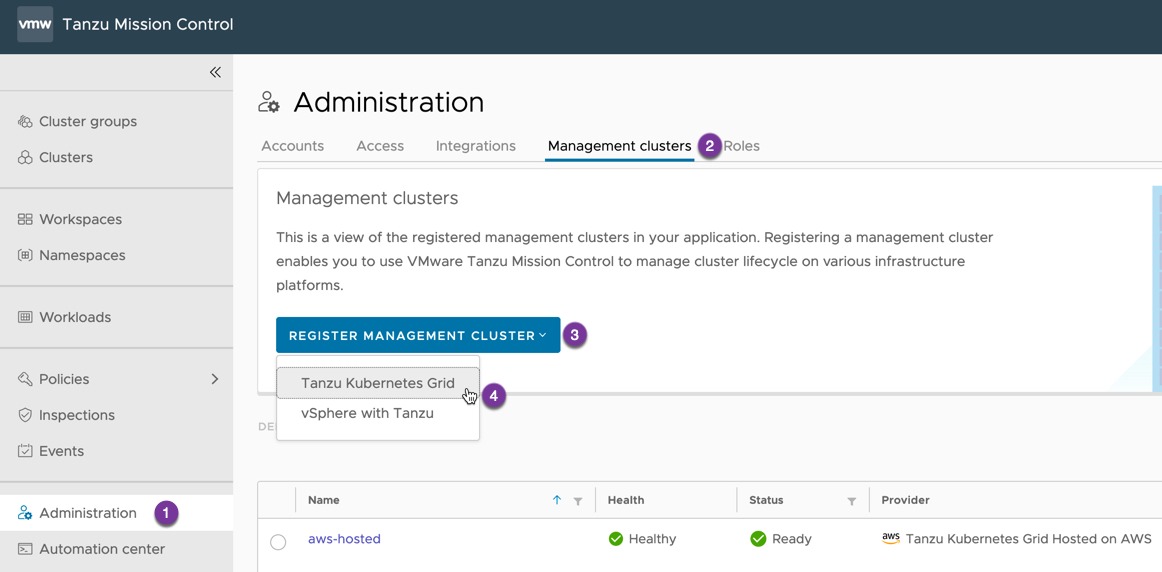

Previously to this capability, you would need to deploy Tanzu Kubernetes Grid to VMC, which was fully supported, as a Management Cluster and then additional Tanzu Clusters for your workloads. (See Terminology explanations here). This was a fully support option, however it did not provide you all the integrated features you could have by using the TKGS as part of your On-Premises vSphere environment.

What is Tanzu Services on VMC?

Tanzu Kubernetes Grid Service is a managed service built into the VMware Cloud on AWS vSphere environment.

This feature brings the availability of the integrated Tanzu Kubernetes Grid Service inside of vSphere itself, by coupling the platform together, you can easily deploy new Tanzu clusters, use the administration and authentication of vCenter, as well as provide governance and policies from vCenter as well.

Note: VMware Cloud on AWS does not enable activation of Tanzu Kubernetes Grid by default. Contact your account team for more information. Note2: In VMware Cloud on AWS, the Tanzu workload control plane can be activated only through the VMC Console.

- Official Documentation

But wait, couldn’t I already install a Tanzu Kubernetes Grid Cluster onto VMC anyway?

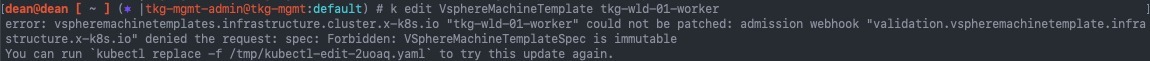

Tanzu Kubernetes Grid is a multi-cloud solution that deploys and manages Kubernetes clusters on your selected cloud provider. Previously to the vSphere integrated Tanzu offering for VMC that we are discussing today, you would deploy the general TKG option to your SDDC vCenter.

What differences should I know about this Tanzu Services offering in VMC versus the other Tanzu Kubernetes offering?

- When Activated, Tanzu Kubernetes Grid for VMware Cloud on AWS is pre-provisioned with a VMC-specific content library that you cannot modify.

- Tanzu Kubernetes Grid for VMware Cloud on AWS does not support vSphere Pods.

- Creation of Tanzu Supervisor Namespace templates is not supported by VMware Cloud on AWS.

- vSphere namespaces for Kubernetes releases are configured automatically during Tanzu Kubernetes Grid activation.

Activating Tanzu Kubernetes Grid Service in a VMC SDDC

Reminder: Tanzu Services Activation capabilities are not activated by default. Contact your account team for more information.

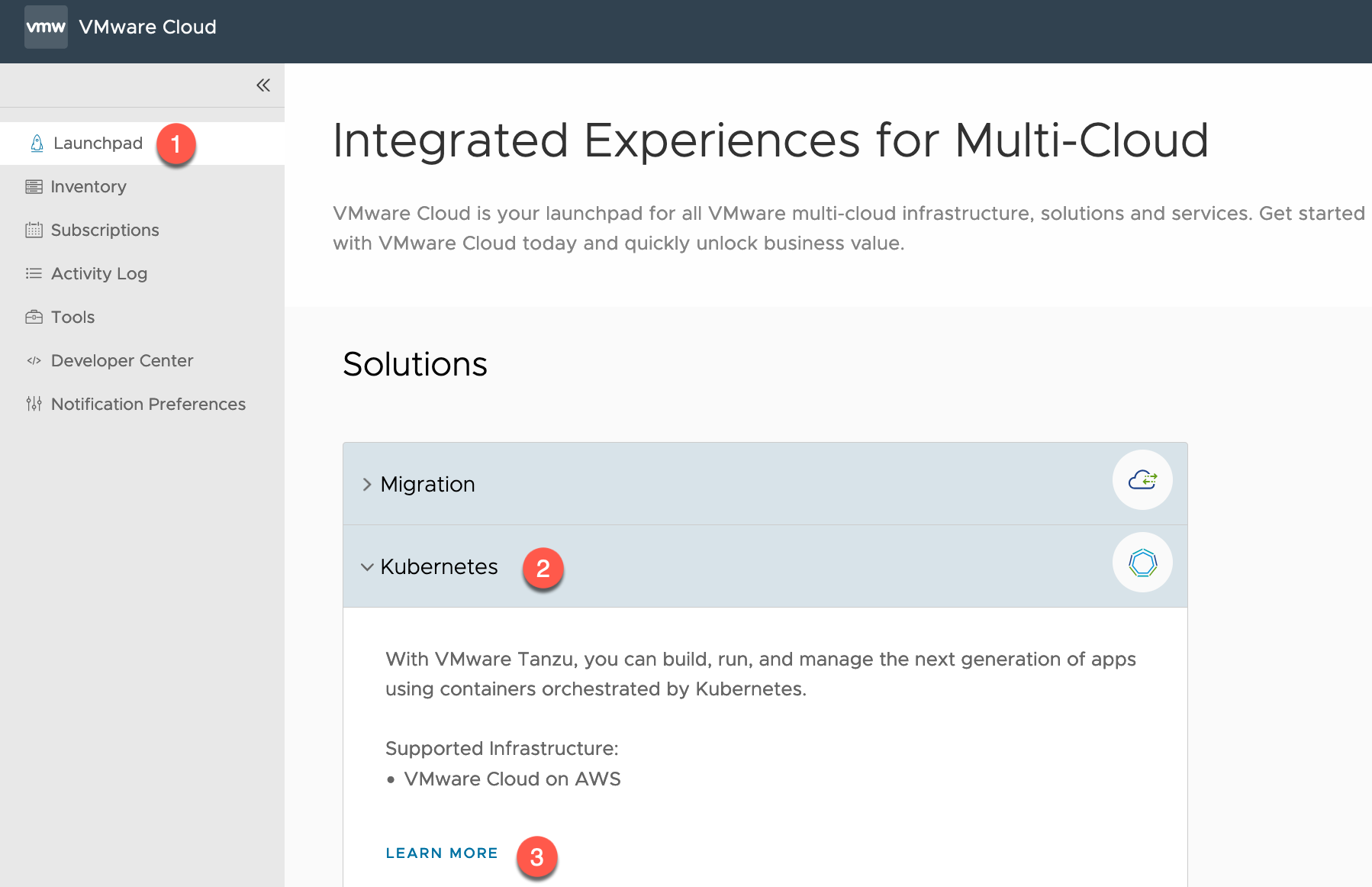

Within your VMC Console, you can either go via the Launchpad method or via the SDDC inventory item. I’ll cover both:

- Click on Launchpad

- Open the Kubernetes Tab

- Click Learn More

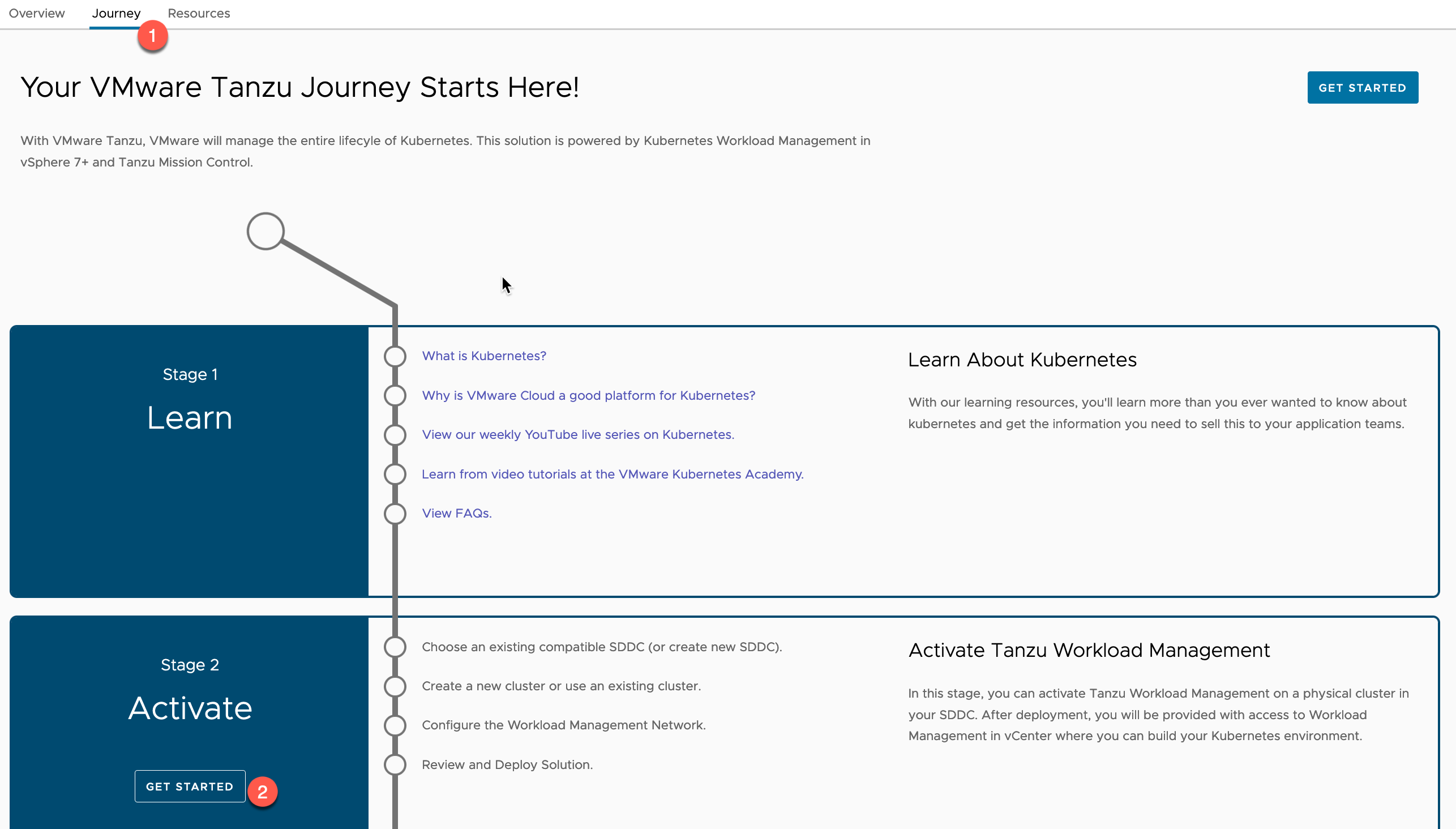

- Select the Journey Tab

- Under Stage 2 – Activate > Click Get Started

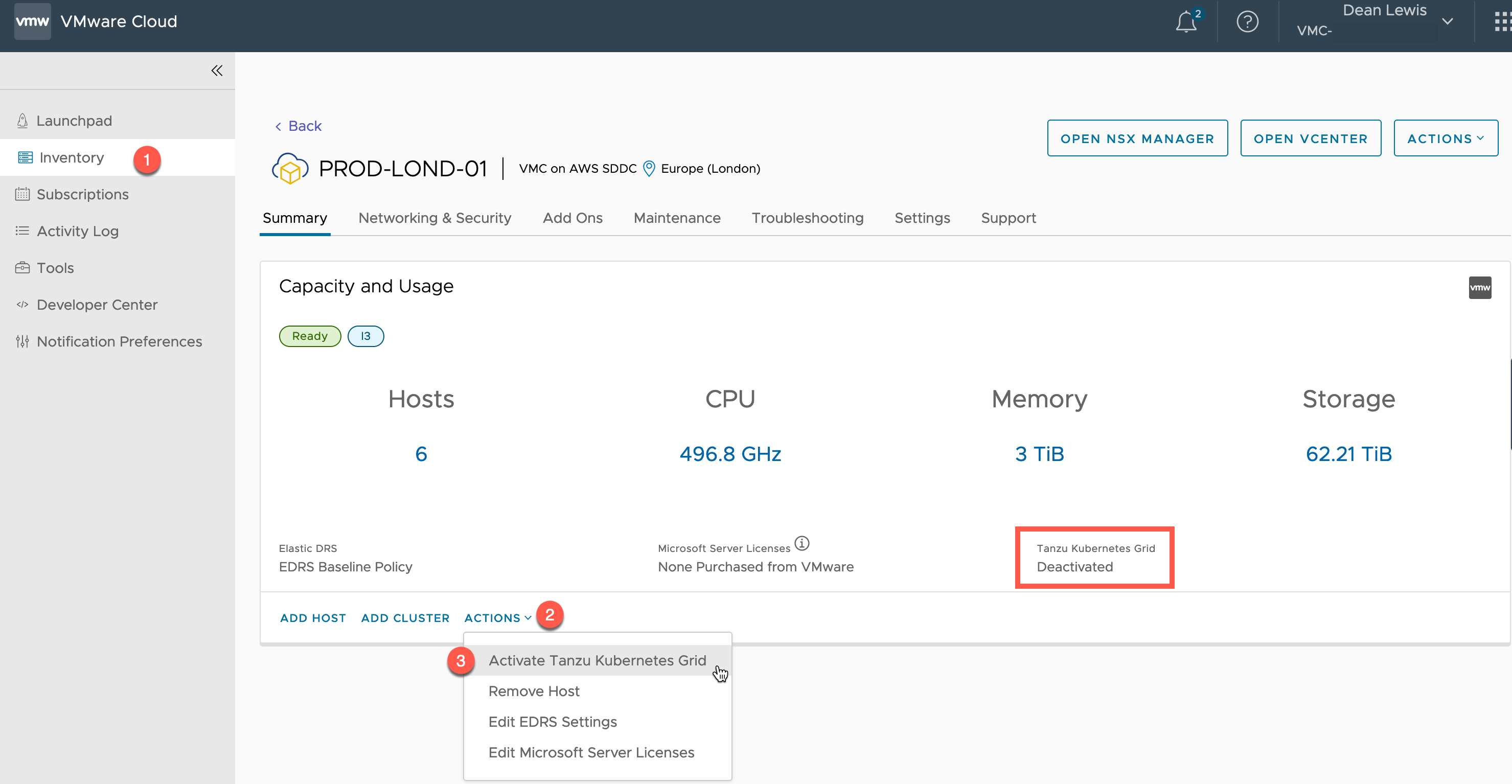

Alternatively, from the SDDC object in the Inventory view

- Click Actions

- Click “Activate Tanzu Kubernetes Grid”

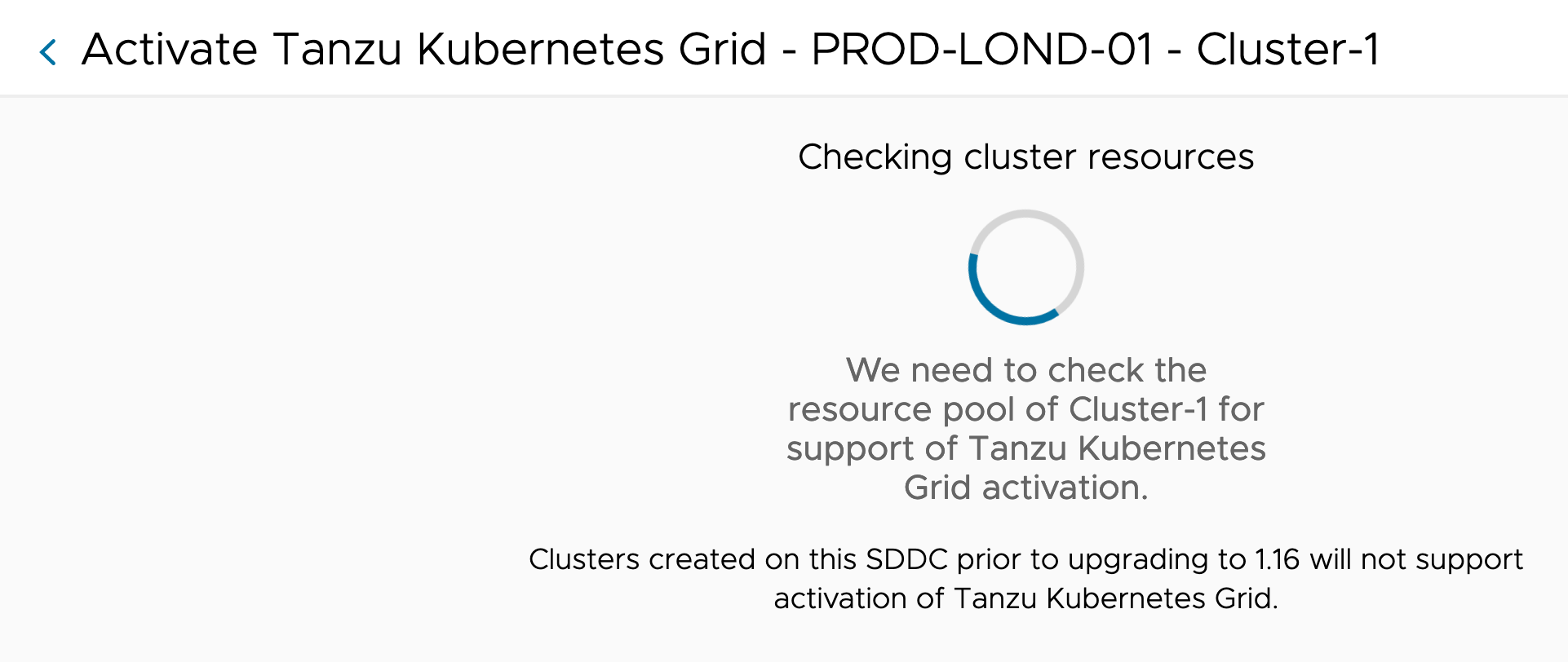

You will now be shown a status dialog, as VMC checks to ensure that Tanzu Kubernetes Grid Service can be activated in your cluster.

This will check you have the correct configurations and compute resources available.

If the check is successful, you will now be presented the configuration wizard. Essentially, all you must provide is your configuration for four networks. Continue reading VMware Cloud on AWS Deep Dive – Activating, Deploying and Using the managed Tanzu Kubernetes Grid Service