This blog post will take you through the full steps on installing and configuring Kasten, the container based enterprise backup software now owned by Veeam Software

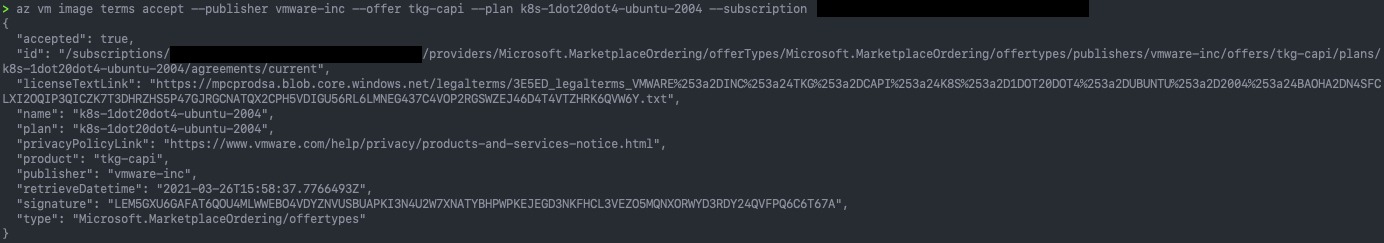

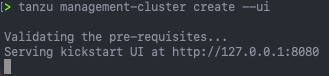

This deployment will be for VMware Tanzu Kubernetes Grid which is running on top of VMware vSphere.

You can read how to create backup policies and restore your data in this blog post.

For the data protection demo, I’ll be using my trusty Pac-Man application that has data persistence using MongoDB.

Installing Kasten on Tanzu Kubernetes Grid

In this guide, I am going to use Helm, you can learn how to install it here.

Add the Kasten Helm charts repo.

helm repo add kasten https://charts.kasten.io/

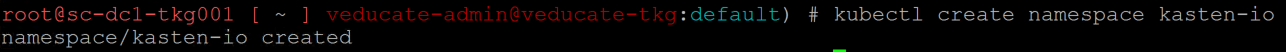

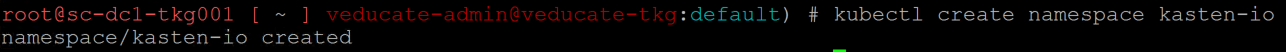

Create a Kubernetes namespace called “kasten-io”

kubectl create namespace kasten-io

Next we are going to use Helm to install the Kasten software into our Tanzu Kubernetes Grid cluster.

helm install k10 kasten/k10 --namespace=kasten-io \

--set externalGateway.create=true \

--set auth.tokenAuth.enabled=true \

--set global.persistence.storageClass=<storage-class-name>

Breaking down the command arguments;

- –set externalGateway.crete=true

- This creates an external service to use ServiceType=LoadBalancer to allow external access to the Kasten K10 Dashboard outside of your cluster.

- –set auth.tokenAuth.enabled=true

- –set global.persistence.storageClass=<storage-class-name>

- This sets the storage class to be used for the PV/PVCs to be created for the Kasten install. (In a TKG guest cluster there may not be a default storage class.)

You will be presented an output similar to the below.

NAME: k10

LAST DEPLOYED: Fri Feb 26 01:17:55 2021

NAMESPACE: kasten-io

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Thank you for installing Kasten’s K10 Data Management Platform!

Documentation can be found at https://docs.kasten.io/.

How to access the K10 Dashboard:

The K10 dashboard is not exposed externally. To establish a connection to it use the following

`kubectl --namespace kasten-io port-forward service/gateway 8080:8000`

The Kasten dashboard will be available at: `http://127.0.0.1:8080/k10/#/`

The K10 Dashboard is accessible via a LoadBalancer. Find the service's EXTERNAL IP using:

`kubectl get svc gateway-ext --namespace kasten-io -o wide`

And use it in following URL

`http://SERVICE_EXTERNAL_IP/k10/#/`

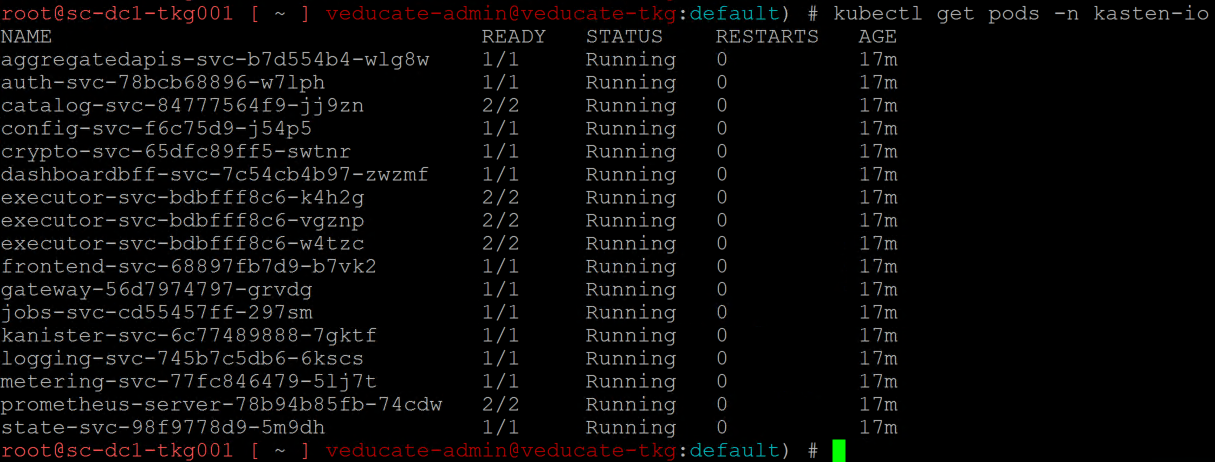

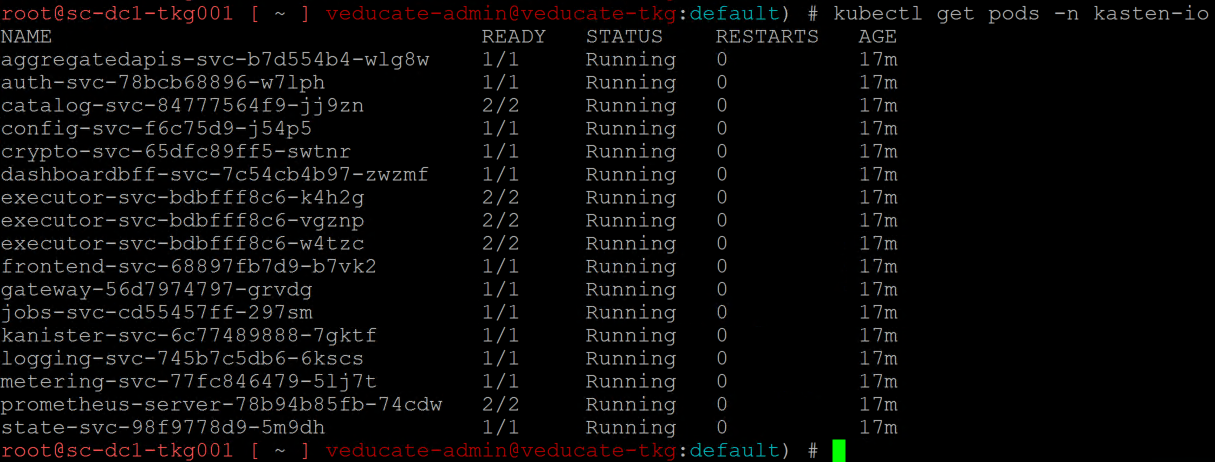

It will take a few minutes for your pods to be running, you can review with the following command;

kubectl get pods -n kasten-io

Next we need to get our LoadBalancer IP address for the External Web Front End, so that we can connect to the Kasten K10 Dashboard.

kubectl get svc -n kasten-io

Continue reading Installing and configuring Kasten to protect container workloads on VMware Tanzu Kubernetes Grid →