Just a quick blog post on how to authenticate Docker to a Harbor Image Registry, using a Robot Account, which is good for programmatically access to push/pull images from your registry.

Harbor introduced the capability for administrators to create system robot accounts you can use you run automated actions in your Harbor instances. System robot accounts allow you to use a robot account to perform maintenance or repeated task across all or a subset of projects in your Harbor instance.

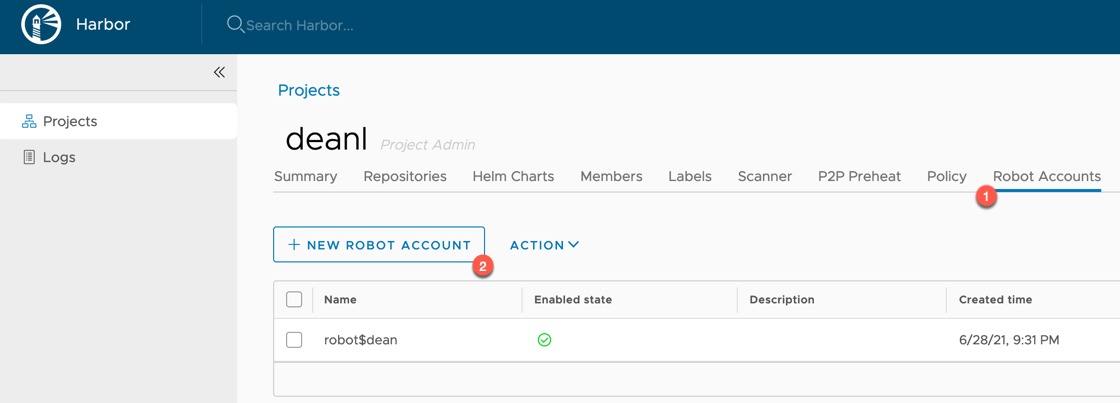

In Harbor, to create your robot account, within your project:

- Click the Robot Accounts tab

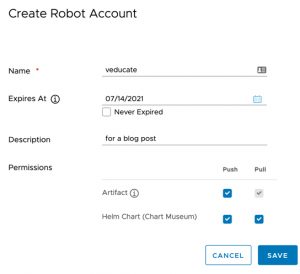

- Click New Robot Account

- Enter your details as necessary and click save

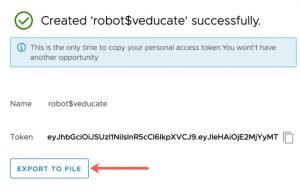

- Select export to file and save the JSON file.

This file will be outputted with a “$” in the name. You can either change the name of the file, or escape the character in the terminal, which I what I’ll be doing below.

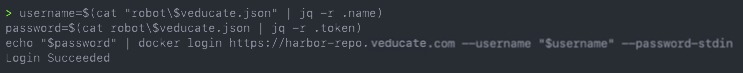

Within your terminal run the following commands:

username=$(cat file.json | jq -r .name) password=$(cat file.json | jq -r .token) <<< See below update echo "$password" | docker login https://URL --username "$username" --password-stdin Example username=$(cat "robot\$veducate.json" | jq -r .name) password=$(cat robot\$veducate.json | jq -r .token) echo "$password" | docker login https://harbor-repo.veducate.com --username "$username" --password-stdin Update October 2021 Since Harbor 2.2 minor release, and I found that within the JSON the key name has changed to secret, so this is the updated example username=$(cat robot-veducate.json | jq -r .name) password=$(cat robot-veducate.json | jq -r .secret) echo "$password" | docker login https://harbor-repo.veducate.com --username "$username" --password-stdin

Now you can pull/push images to your repository using this authentication details.

Regards