In this blog post we are going to cover off how to delete a Tanzu Kubernetes Grid cluster that has been provisioned by Tanzu Mission Control. We will cover the following areas:

Below are the other blog posts in the series.

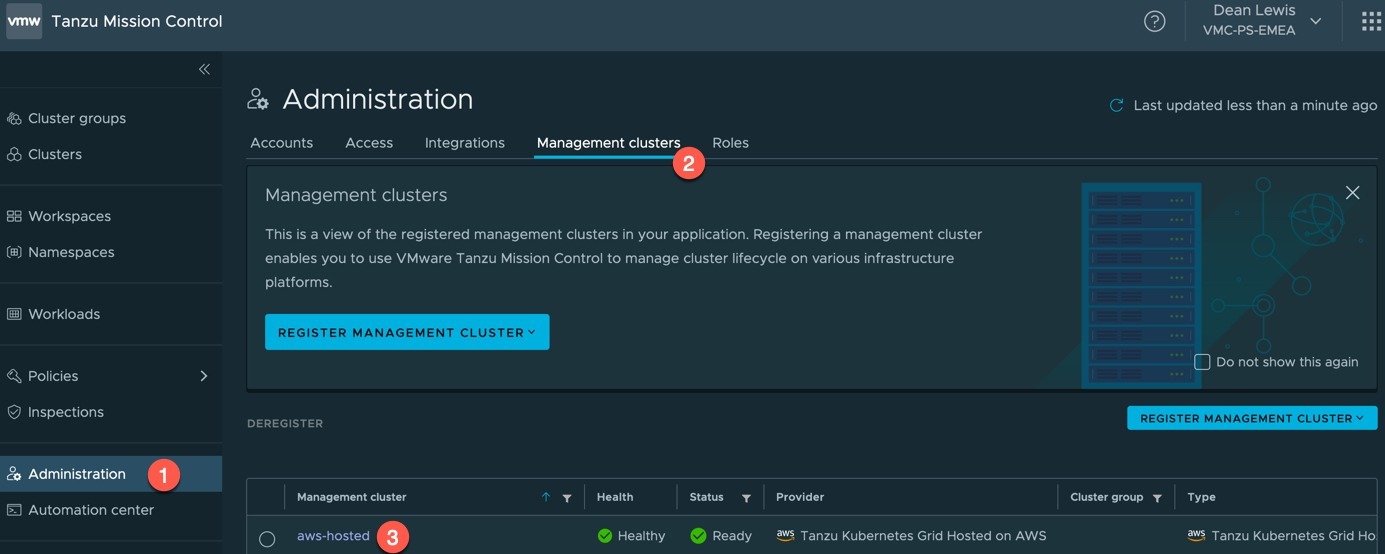

Tanzu Mission Control - Getting Started Tanzu Mission Control - Cluster Inspections - Workspaces and Policies - Data Protection - Deploying TKG clusters to AWS - Upgrading a provisioned cluster - Delete a provisioned cluster - TKG Management support and provisioning new clusters - TMC REST API - Postman Collection - Using custom policies to ensure Kasten protects a deployed application

We are going to use the cluster I created in my last blog post.

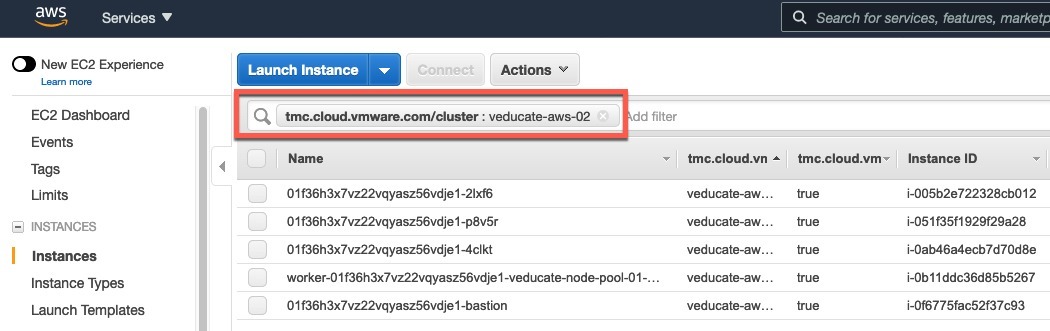

Below are my EC2 instances that make up my TMC provisioned cluster, here I have filtered my view using the field “tmc.cloud.vmware.com/cluster” + cluster name.

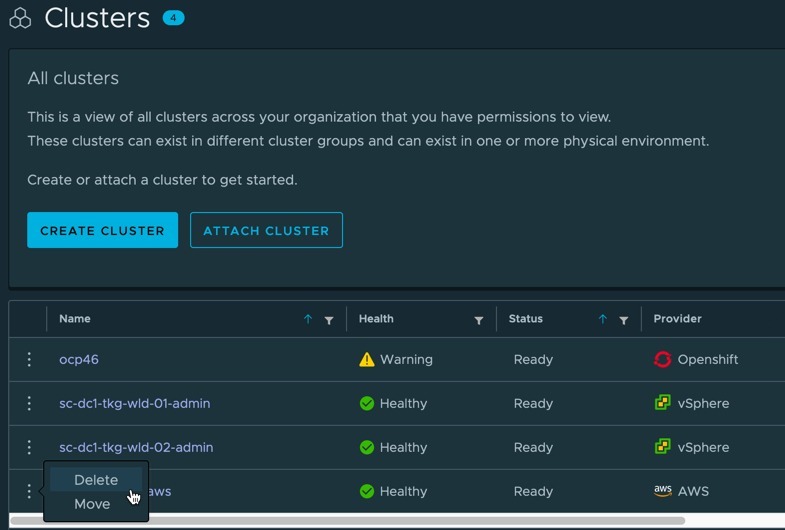

Deleting a Provisioned cluster in the TMC UI

In the TMC UI, going to the clusters view, you can click the three dots next to the cluster you want to remove and select delete.

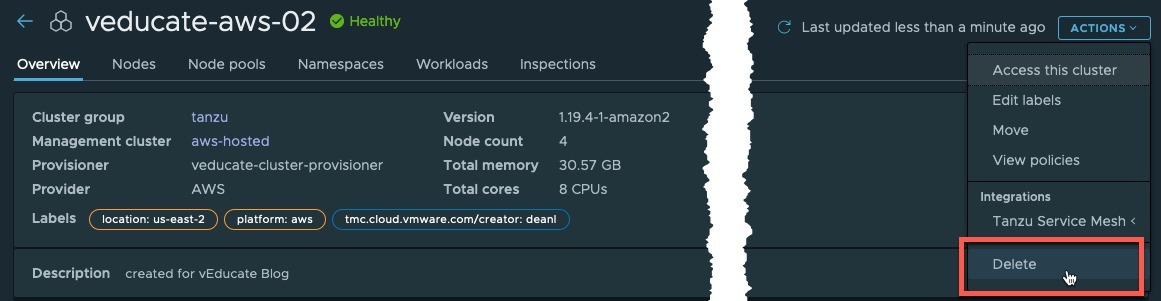

Alternatively, within the cluster object view, click actions then delete.

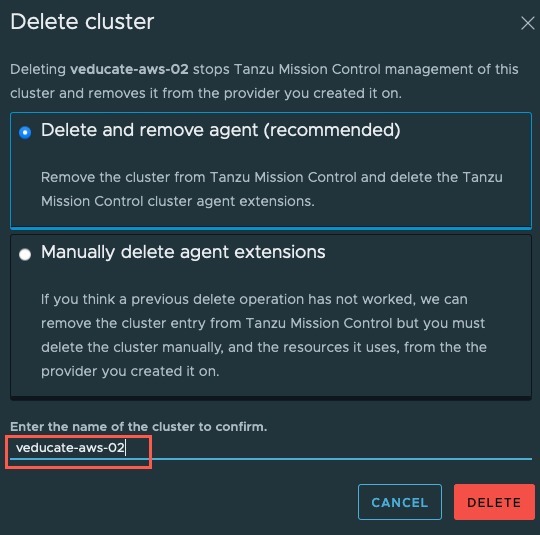

Both options will bring up the below confirmation dialog box.

You select one of the following options:

- Delete and remove agent (recommended)

- Remove from TMC and delete agent extensions

- Manually delete agent extensions

- A secondary option whereby a manual removal is needed if a cluster delete fails

Enter the name of the cluster you want to delete, to confirm the cluster deletion.

Continue reading Tanzu Mission Control – Delete a provisioned cluster

Continue reading Tanzu Mission Control – Delete a provisioned cluster