In my previous blog post, I detailed a full end to end guide in deploying and configurating the managed Tanzu Kubernetes Service offering as part of VMware Cloud on AWS (VMC), finishing with some example application deployments and configurations.

In this blog post, I am moving on to show you how to integrate this environment with Tanzu Mission Control, which will provide fleet management for your Kubernetes instances. I’ve wrote several blog posts on TMC previous which you can find below:

Tanzu Mission Control - Getting Started Tanzu Mission Control - Cluster Inspections - Workspaces and Policies - Data Protection - Deploying TKG clusters to AWS - Upgrading a provisioned cluster - Delete a provisioned cluster - TKG Management support and provisioning new clusters - TMC REST API - Postman Collection - Using custom policies to ensure Kasten protects a deployed application

Management with Tanzu Mission Control

The first step is to connect the Supervisor cluster running in VMC to our Tanzu Mission Control environment.

Connecting the Supervisor Cluster to TMC

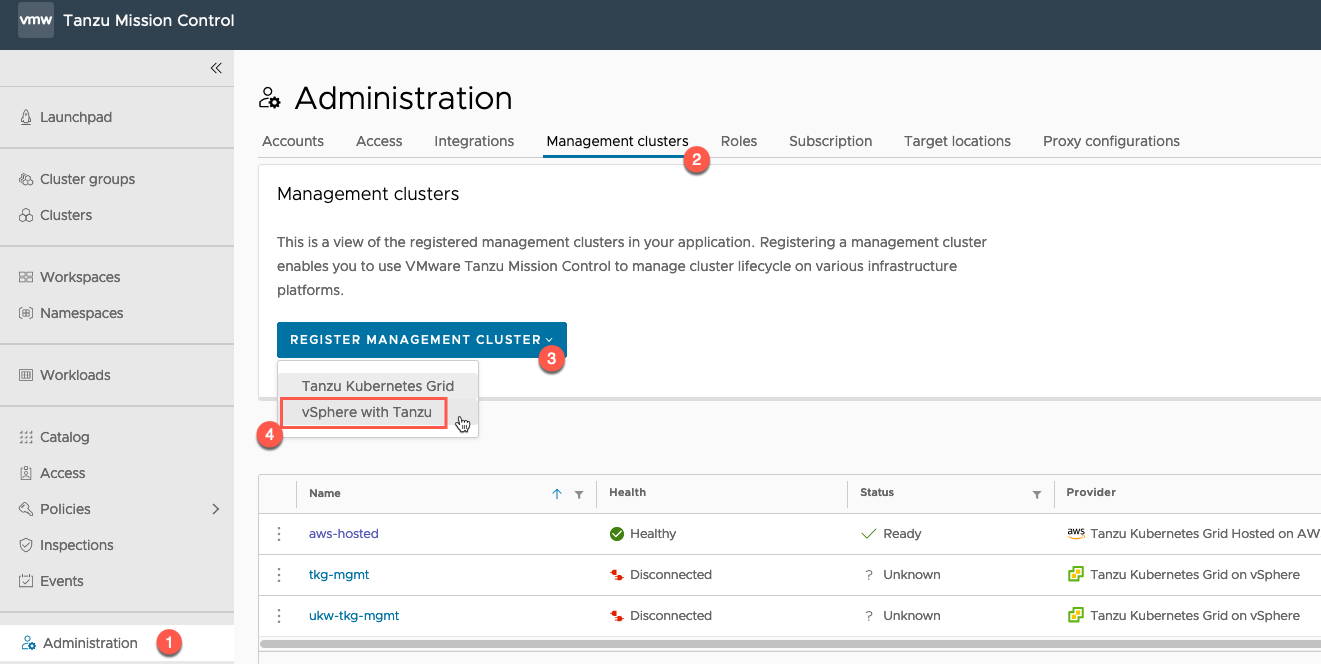

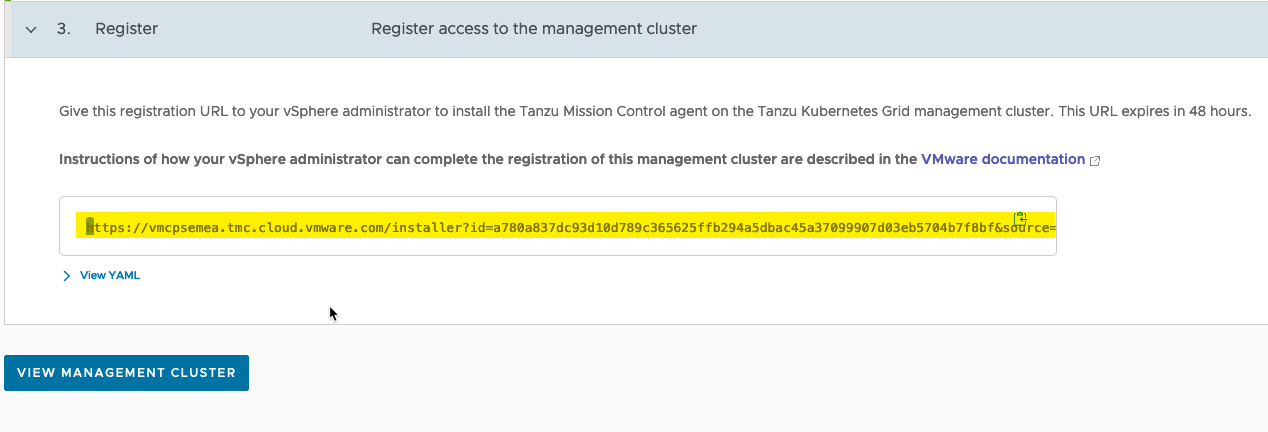

Within the TMC console, go to:

- Administration

- Management Clusters

- Register Management Cluster

- Select “vSphere with Tanzu”

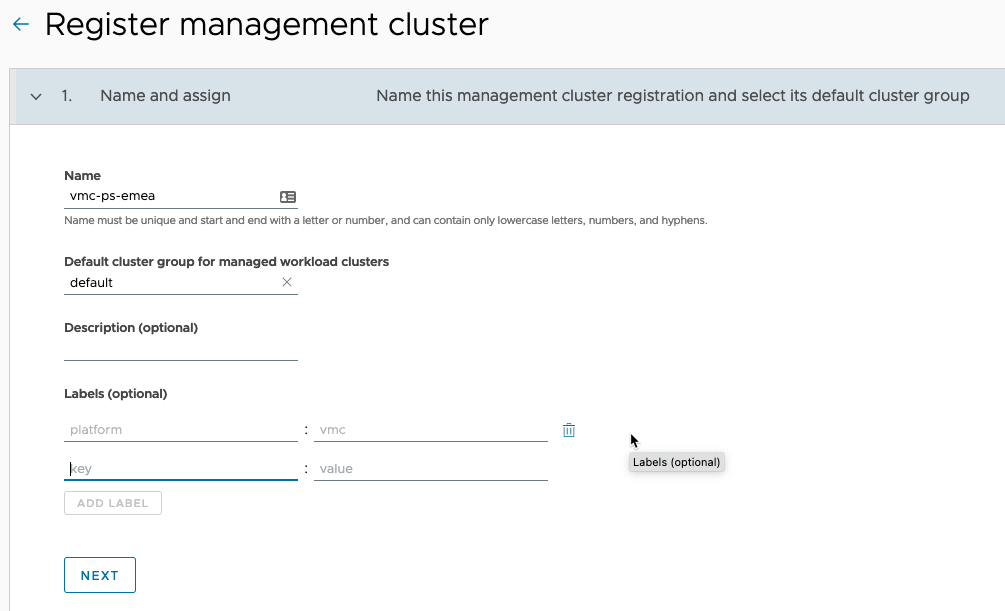

On the Register Management Cluster page:

- Set the friendly name for the cluster in TMC

- Select the default cluster group for managed workload clusters to be added into

- Set any description and labels as necessary

- Proxy settings for a Supervisor Cluster running in VMC are not supported, so ignore Step 2.

- Copy the registration URL.

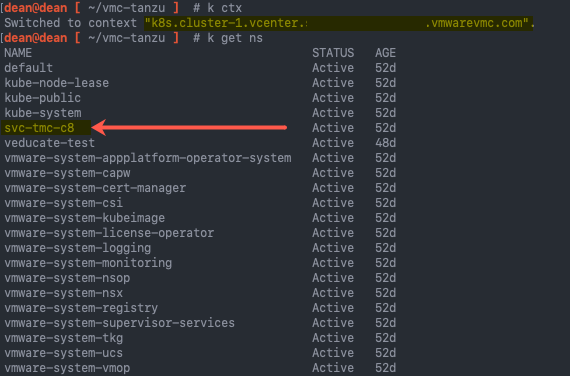

- Log into your vSphere with Tanzu Supervisor cluster.

- Find the namespace that identifies your cluster and is used for TMC configurations, “kubectl get ns”

- It will start “svc-tmc-xx”

- Copy this namespace name

Continue reading VMware Cloud on AWS – Managed Tanzu Kubernetes Grid with Tanzu Mission Control

Continue reading VMware Cloud on AWS – Managed Tanzu Kubernetes Grid with Tanzu Mission Control