This is probably one of the simplest blog posts I’ll publish.

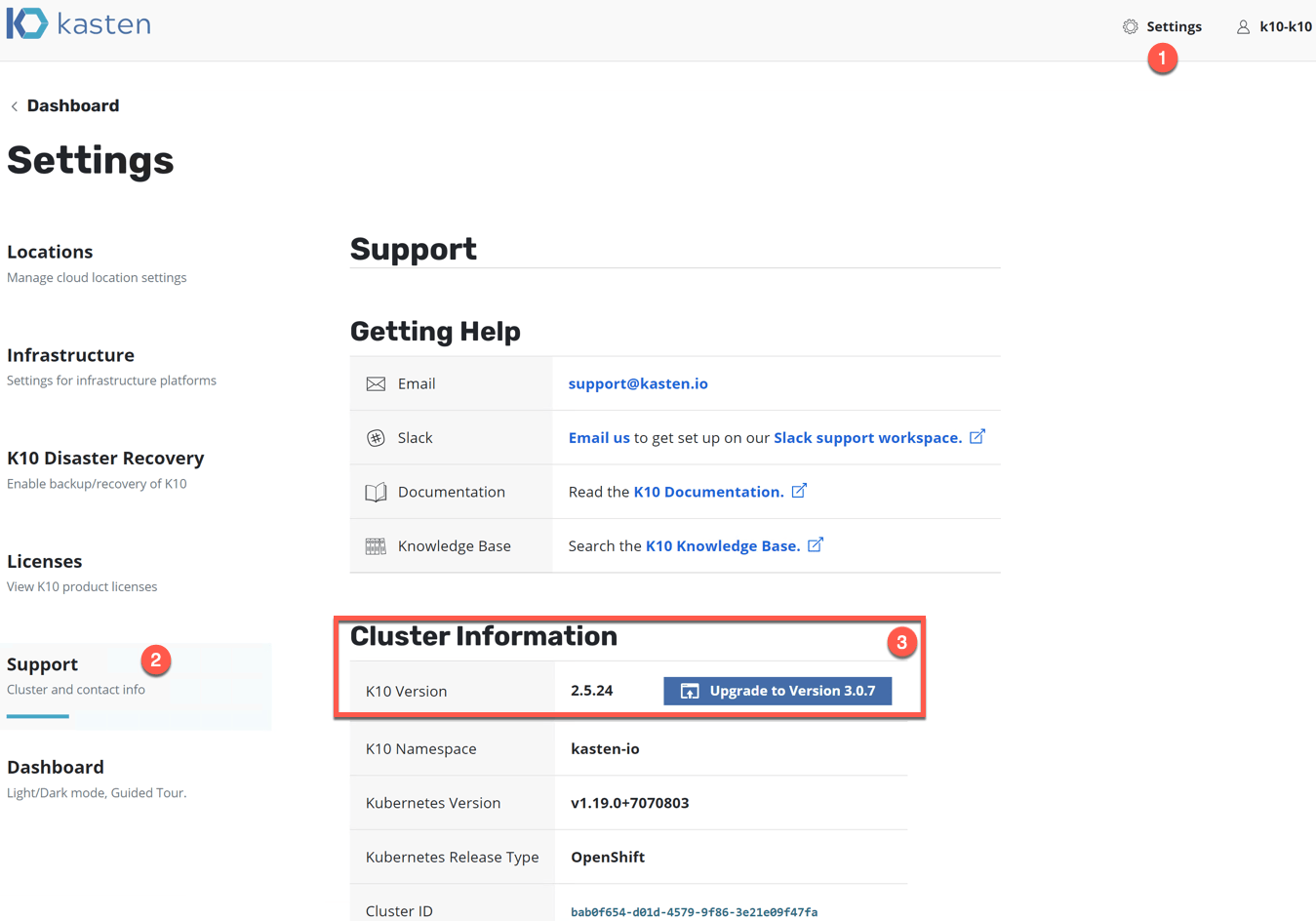

To see if there is an available update for your Kasten install.

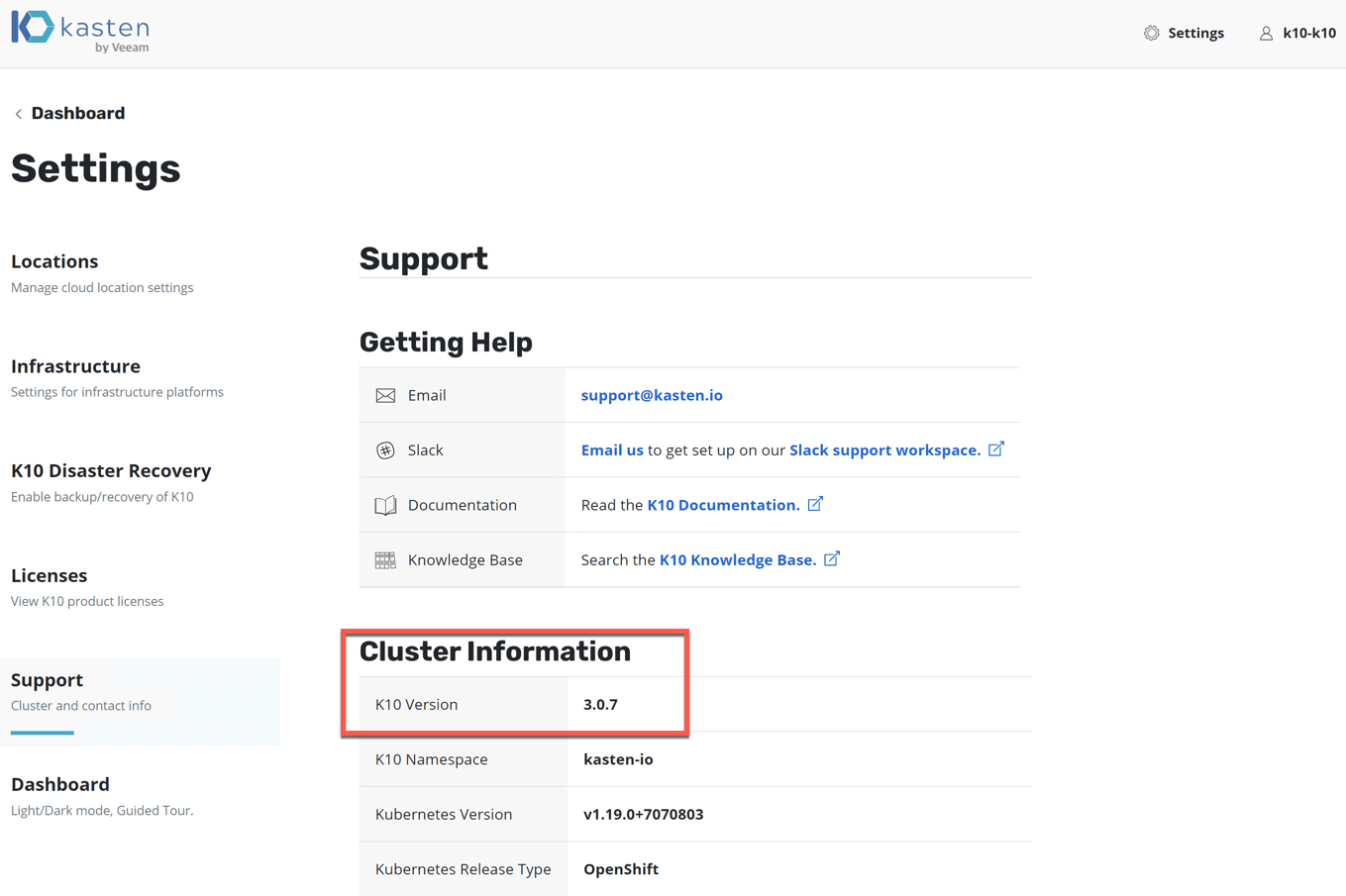

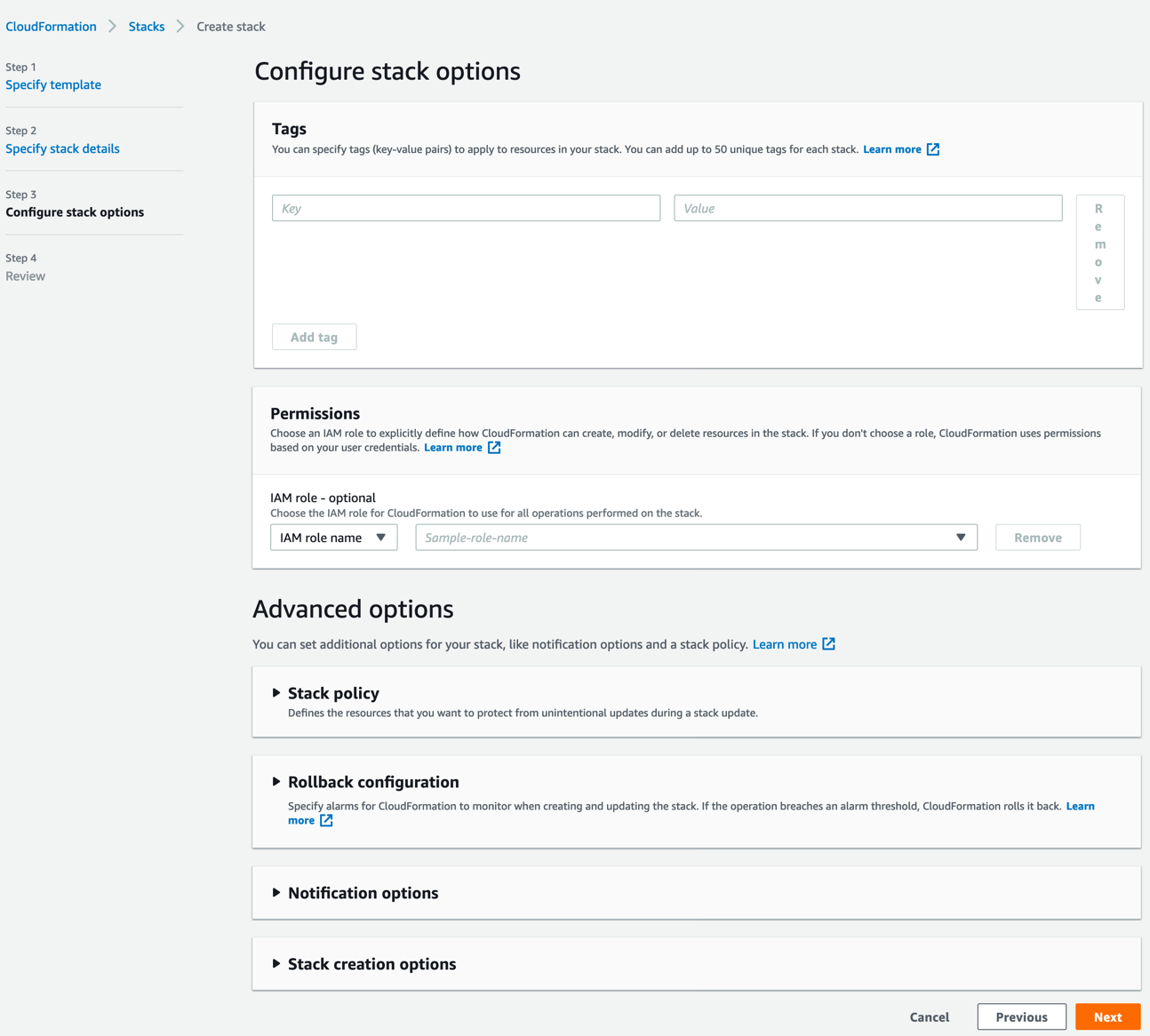

- In the dashboard click > Settings

- Click on Support

See if there is a notification under the Cluster Information heading.

Clicking the “upgrade to version x.x.x” button will take you to this Kasten Docs page.

Or you can follow the same instructions with real life screenshots below.

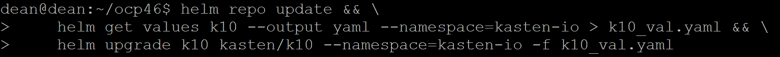

To upgrade run the following using helm:

helm repo update && \ helm get values k10 --output yaml --namespace=kasten-io > k10_val.yaml && \ helm upgrade k10 kasten/k10 --namespace=kasten-io -f k10_val.yaml

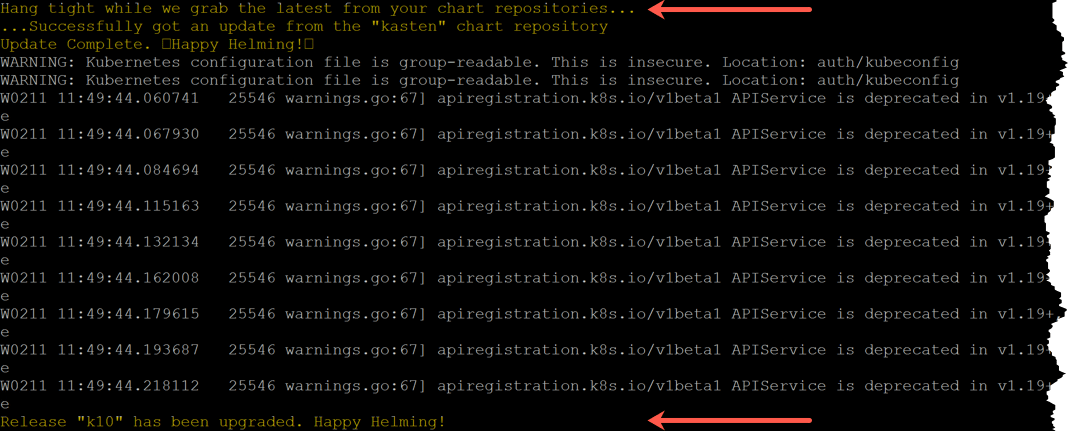

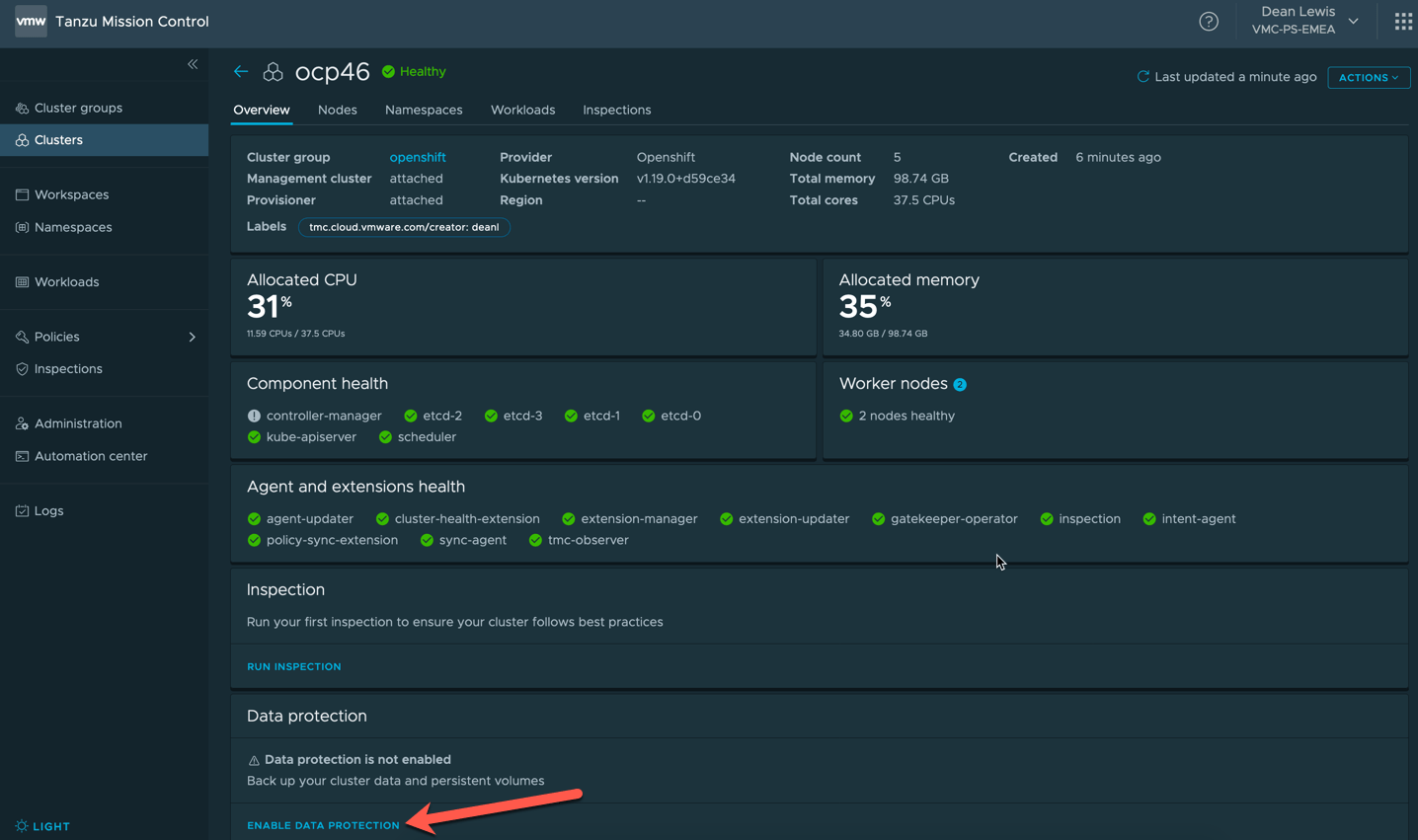

You will see messages similar to the below.

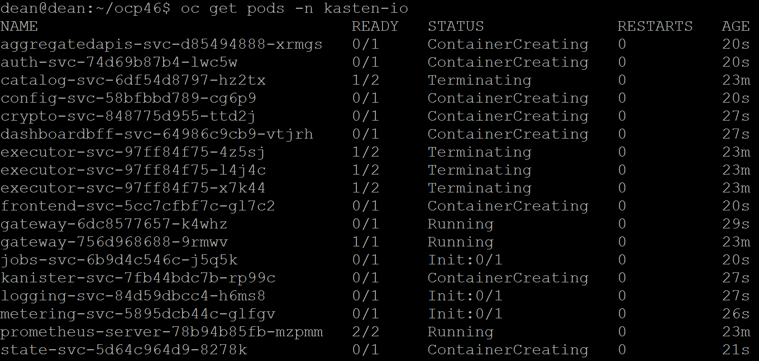

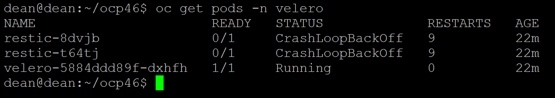

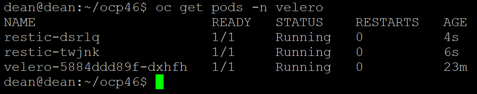

If I now look at my pods in my namespace “Kasten-IO” I can see they are being recreated as the deployment artifacts will have been updated with the new configuration including container images.

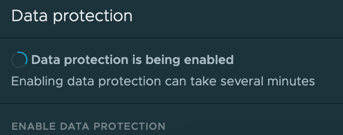

And finally looking back at my Kasten Dashboard for the cluster information, I can see I am now at the latest version.

Regards